Research Statement

-

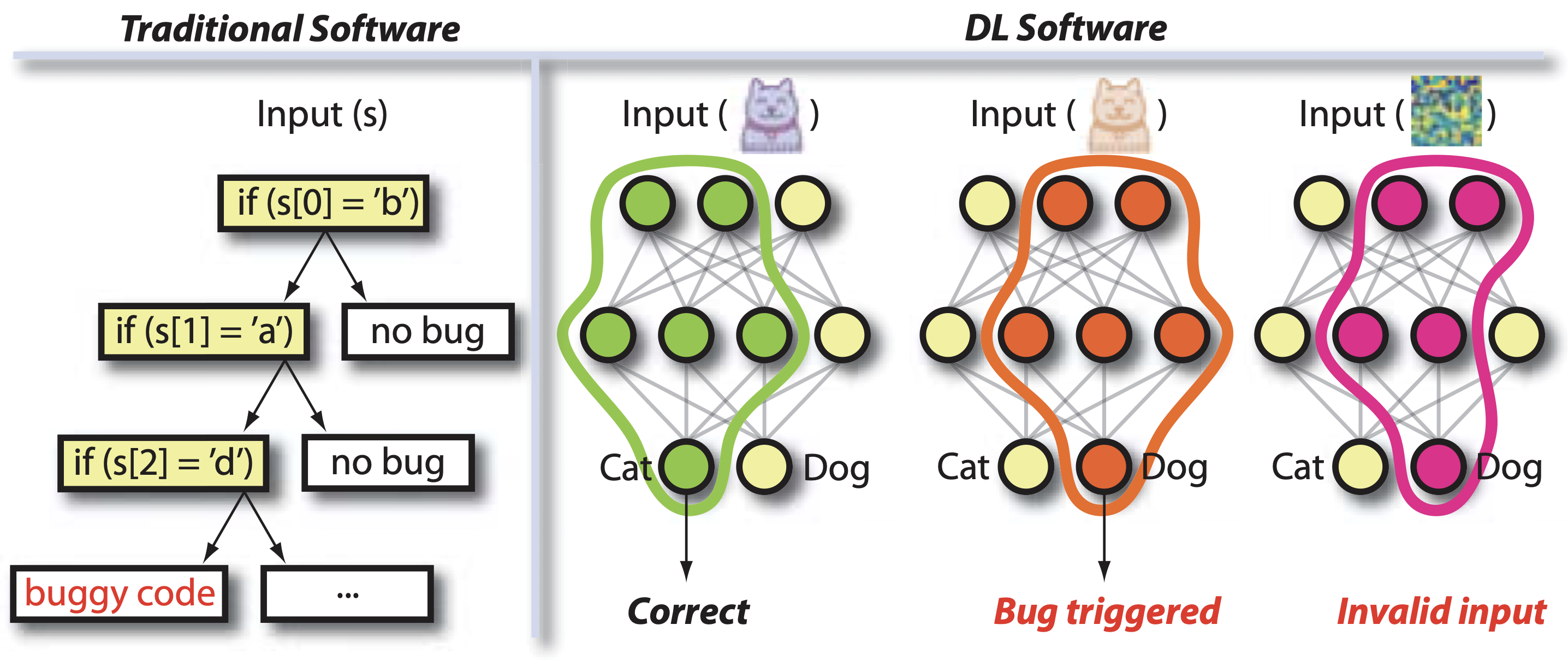

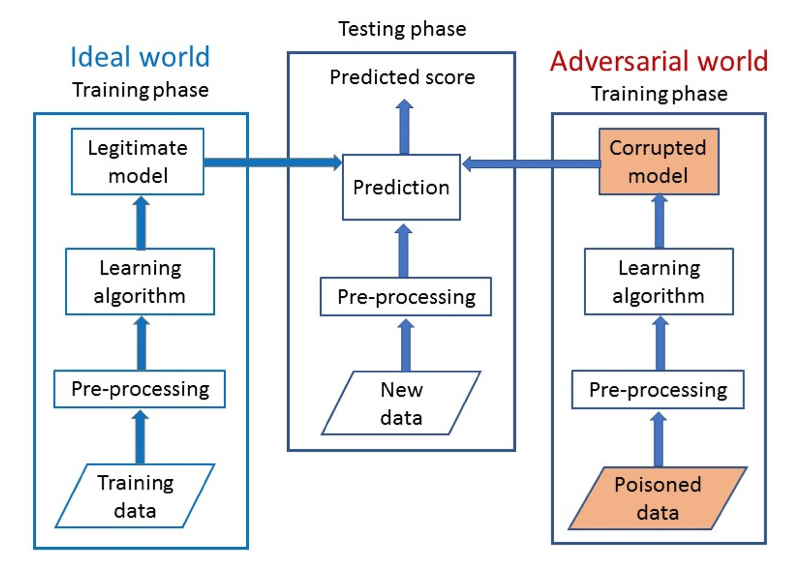

Despite great advancements in machine learning, especially deep learning, current learning systems have severe limitations. Even if a learner performs well in the typical scenario in which it is trained and tested on the same/similar data distribution, it can fail under new scenarios and be fooled and misled by attacks at inference time (adversarial examples) or training time (data poisoning attacks). As learning systems become pervasive, safeguarding their security and privacy is critical.

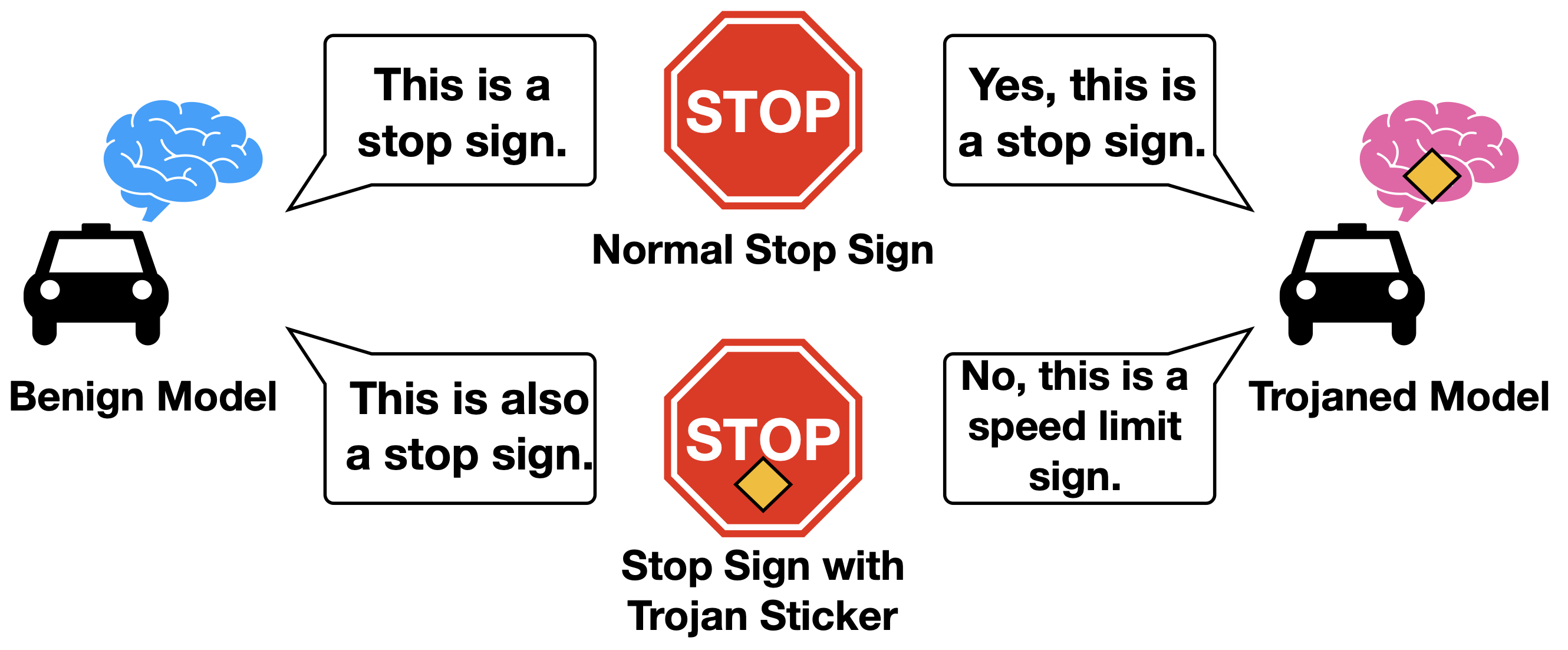

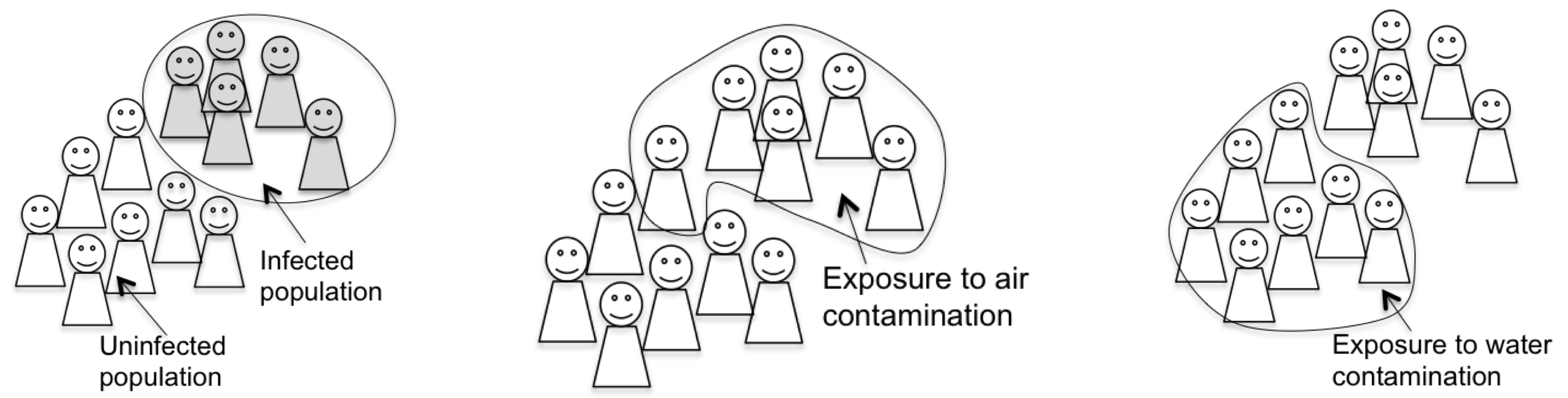

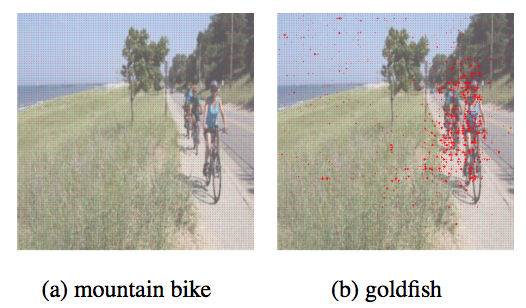

In particular, recent studies have shown that the current learning systems are vulnerable to evasion attacks. For example, our work has shown that by putting printed color stickers on road signs, a learner can be easily fooled by such physical perturbation. A model may also be trained with a poisoned data set, causing it to make incorrect predictions under certain scenarios. Our recent work has demonstrated that attackers can embed “backdoors” in a learner using a poisoned data set for real-world applications such as face recognition systems. More exploration of ML model vulnerabilities can be found in thread model exploration.

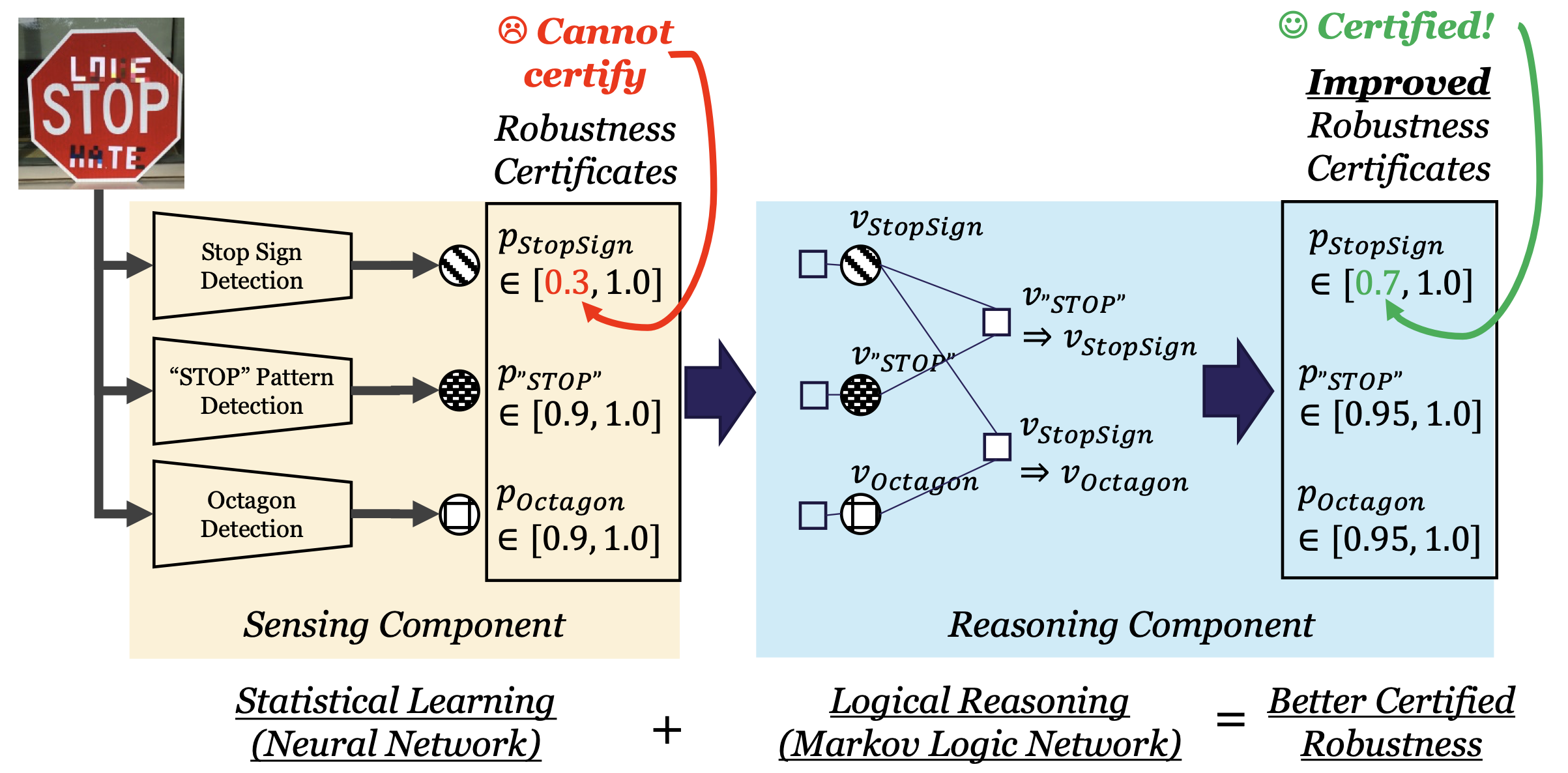

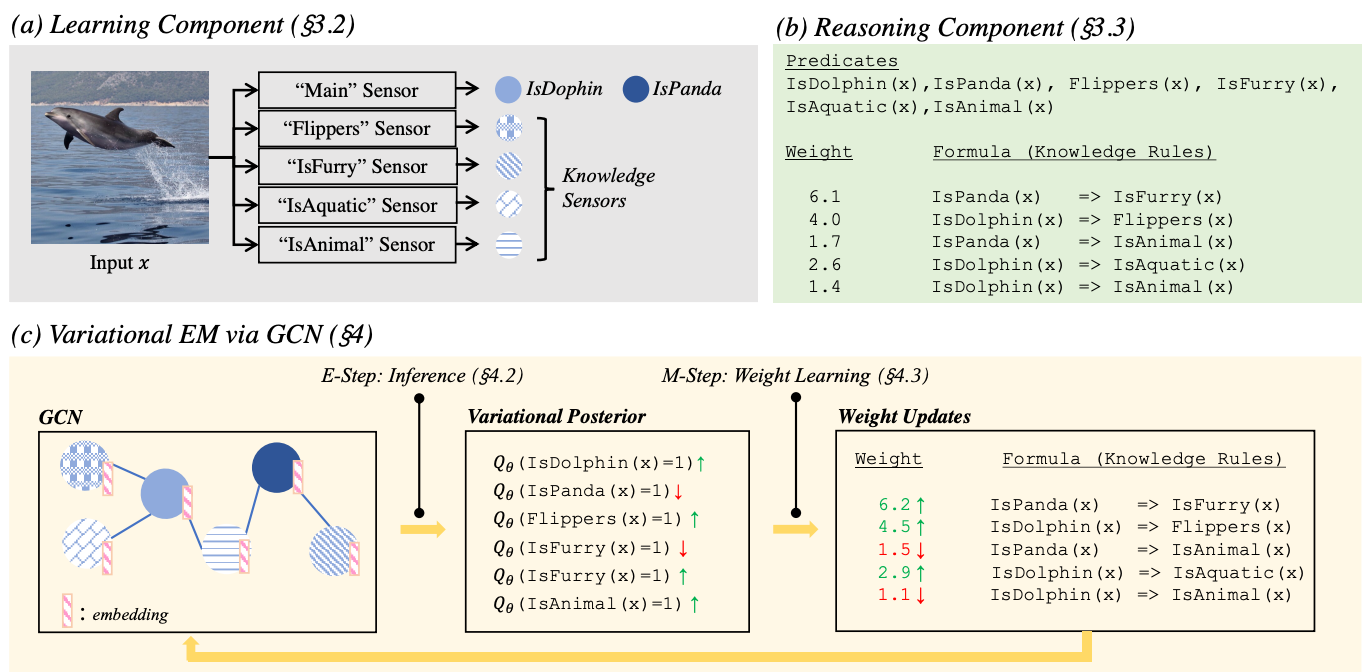

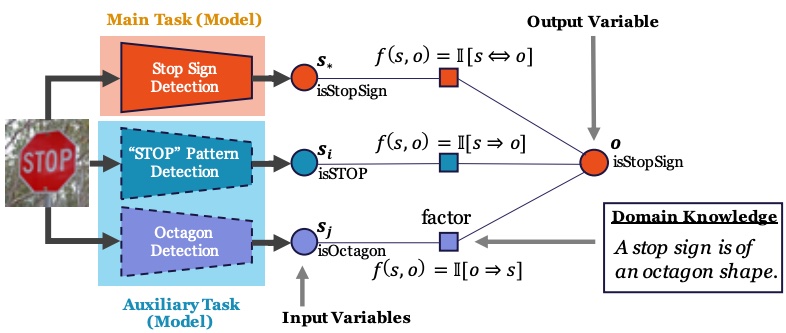

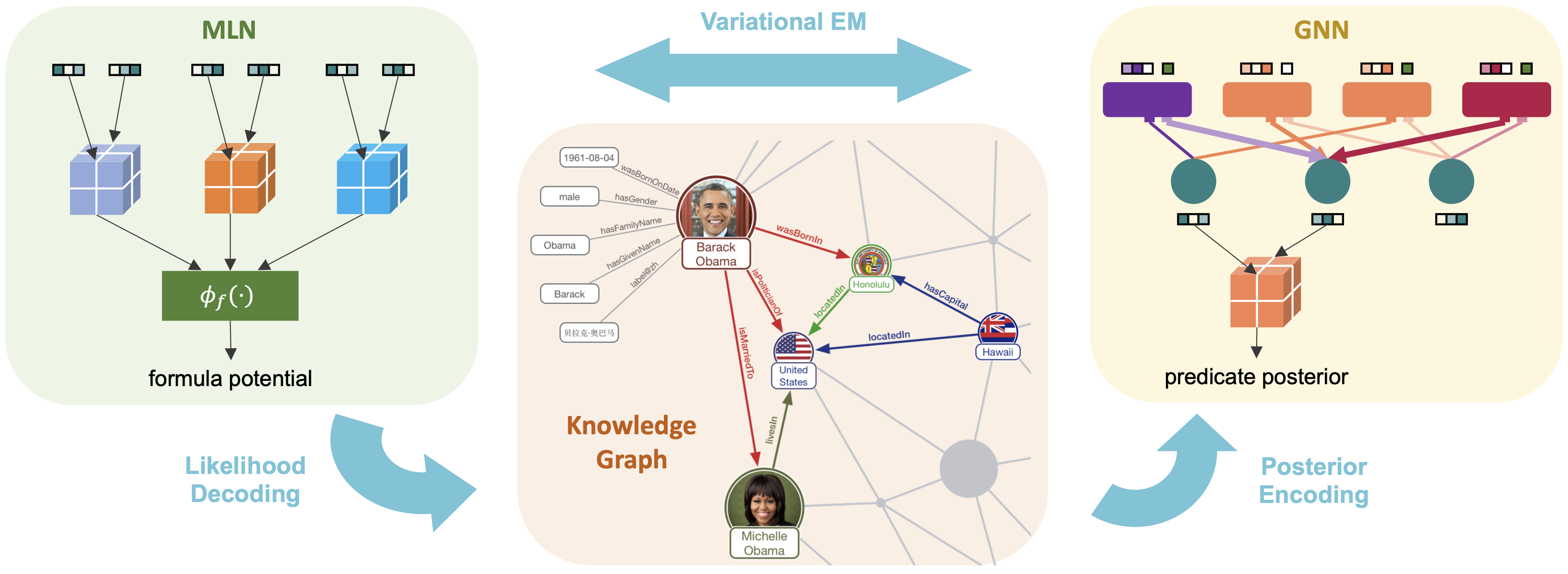

Several solutions to these threats have been proposed, but they are not resilient against intelligent adversaries responding dynamically to the deployed defenses. Thus, the question of how to improve the robustness of machine learning models against advanced adversaries remains largely unanswered. Our research aims to provide robustness guarantees for different ML models through game-theoretic analysis, providing certification methods for different ML paradigms, and designing learning with reasoning ML pipelines to improve the robustness certification.

Recent Publications

-

Game-theoretic modeling & Certifiably robust ML

-

Other robust ML paradigms (e.g., ensemble, FL, RL)

-

Thread model exploration

Game-theoretic modeling & Certifiably robust ML

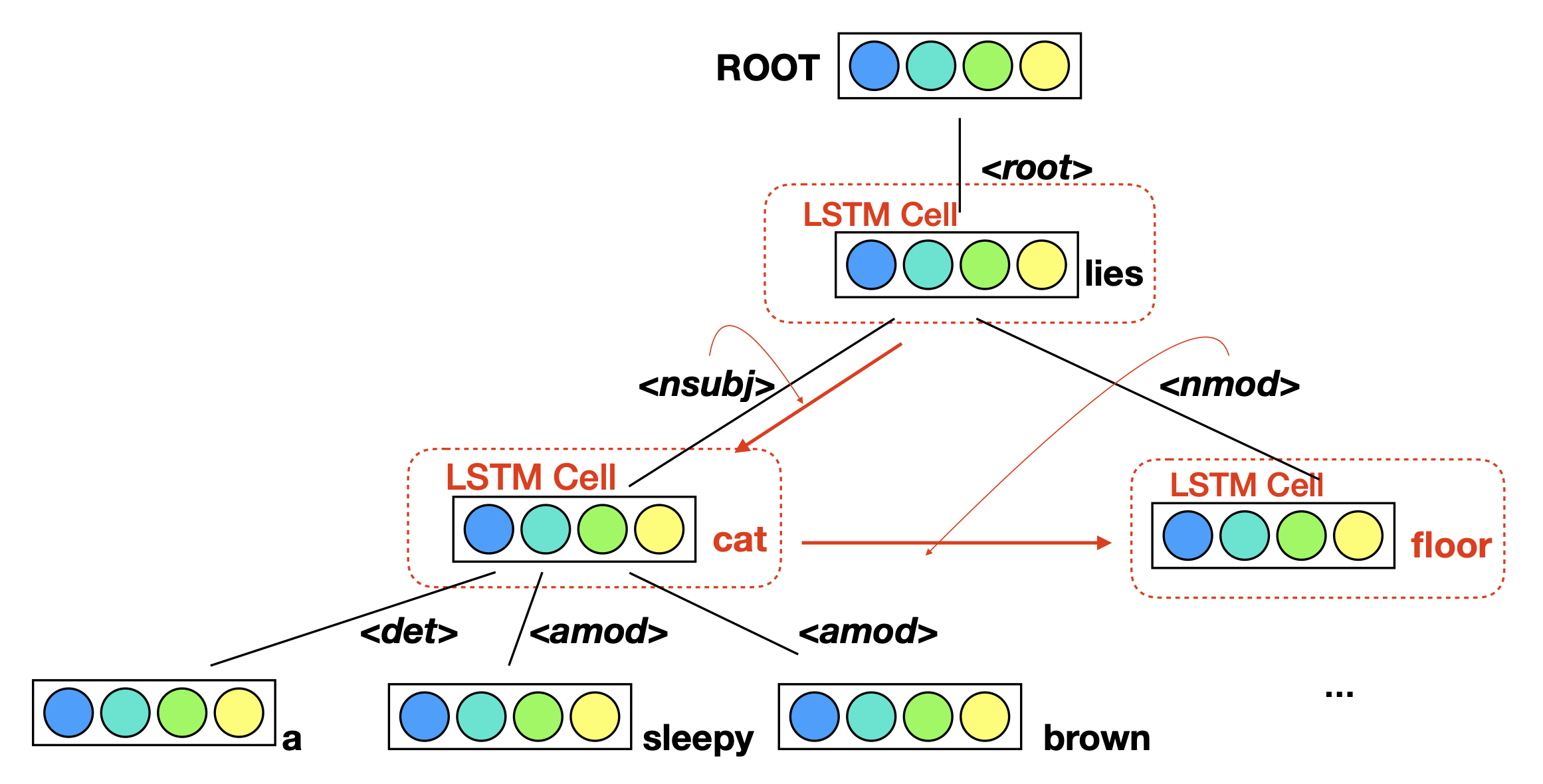

Knowledge enabled certifiably robust ML pipelines

|

Improving Certified Robustness via Statistical Learning with Logical Reasoning Zhuolin Yang, Zhikuan Zhao, Boxin Wang, Jiawei Zhang, Linyi Li, Hengzhi Pei, Bojan Karlaš, Ji Liu, Heng Guo, Ce Zhang, Bo Li. NeurIPS 2022

|

|

CARE: Certifiably Robust Learning with Reasoning via Variational Inference Jiawei Zhang, Linyi Li, Ce Zhang, Bo Li. SaTML 2022

|

|

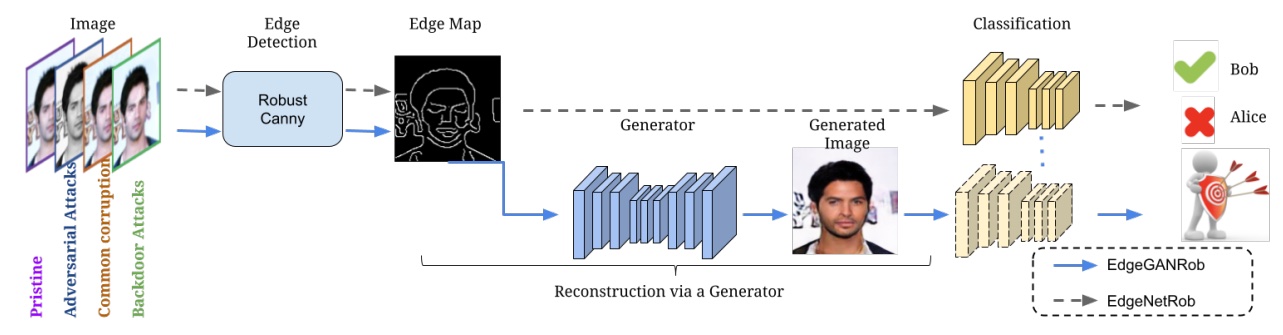

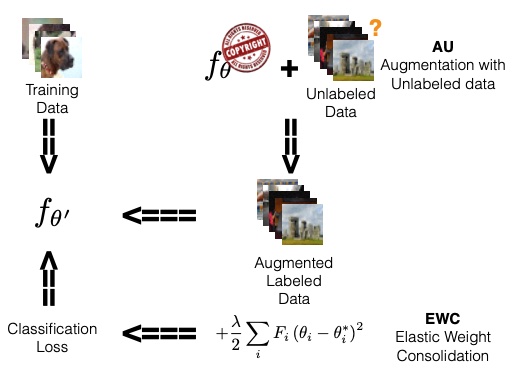

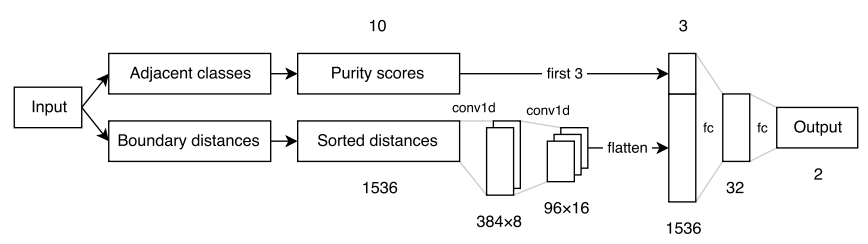

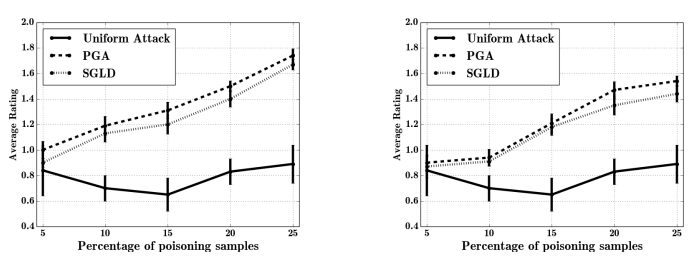

Knowledge-Enhanced Machine Learning Pipeline against Diverse Adversarial Attacks Nezihe Merve Grel*, Xiangyu Qi*, Luka Rimanic, Ce Zhang, Bo Li. ICML 2021

|

|

Efficient Probabilistic Logic Reasoning with Graph Neural Networks Yuyu Zhang, Xinshi Chen, Yuan Yang, Arun Ramamurthy, Bo Li, Yuan Qi, Le Song. ICLR 2020

|

Certifying ML models

|

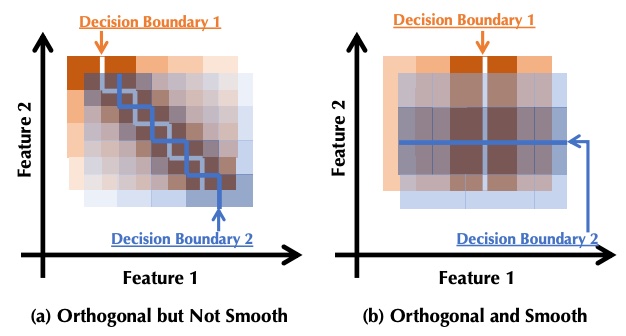

LOT: Layer-wise Orthogonal Training on Improving l2 Certified Robustness Xiaojun Xu, Linyi Li, Bo Li. NeurIPS 2022

|

|

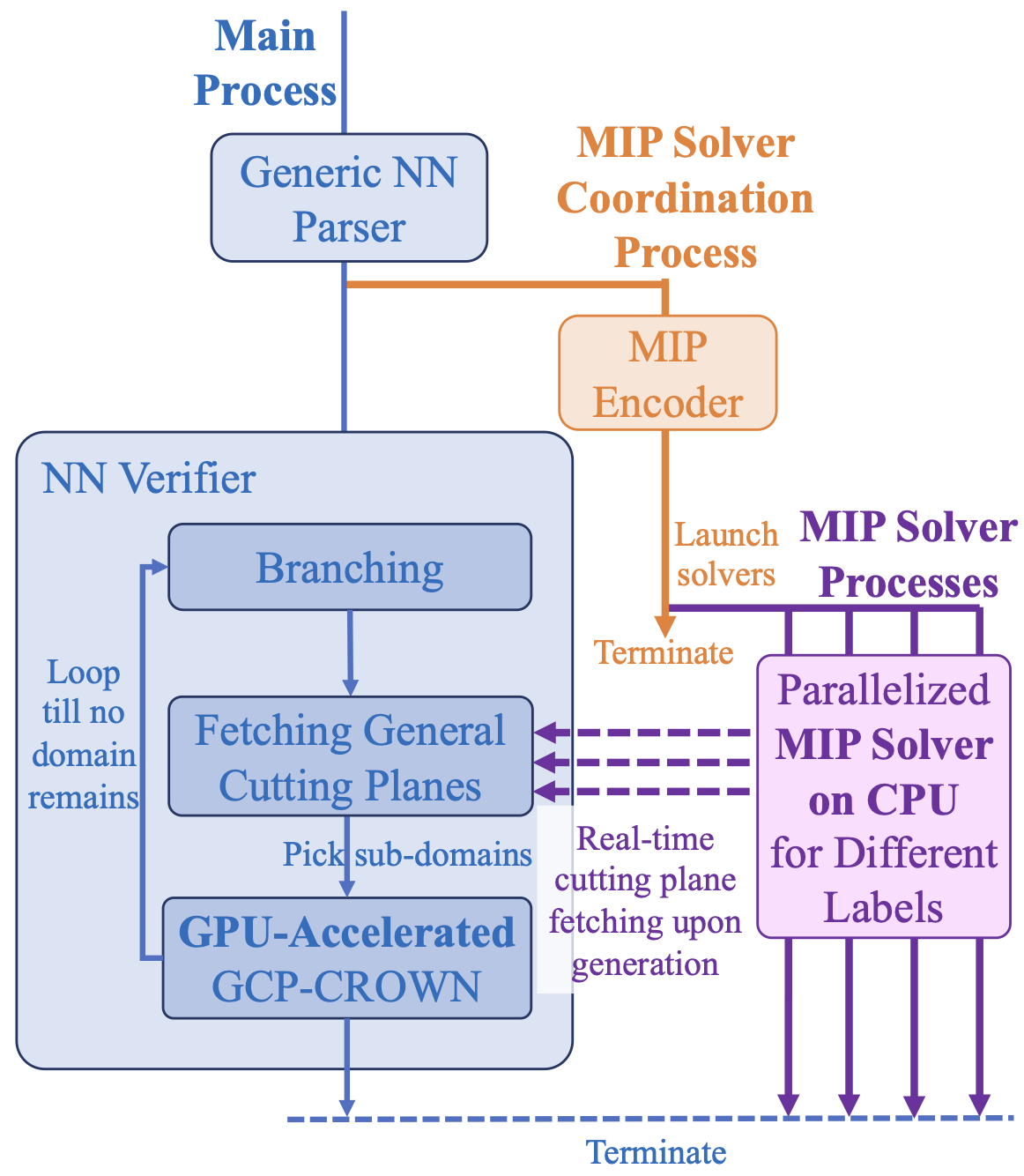

General Cutting Planes for Bound-Propagation-Based Neural Network Verification Huan Zhang, Shiqi Wang, Kaidi Xu, Linyi Li, Bo Li, Suman Jana, Cho-Jui Hsieh, J Zico Kolter. NeurIPS 2022

|

|

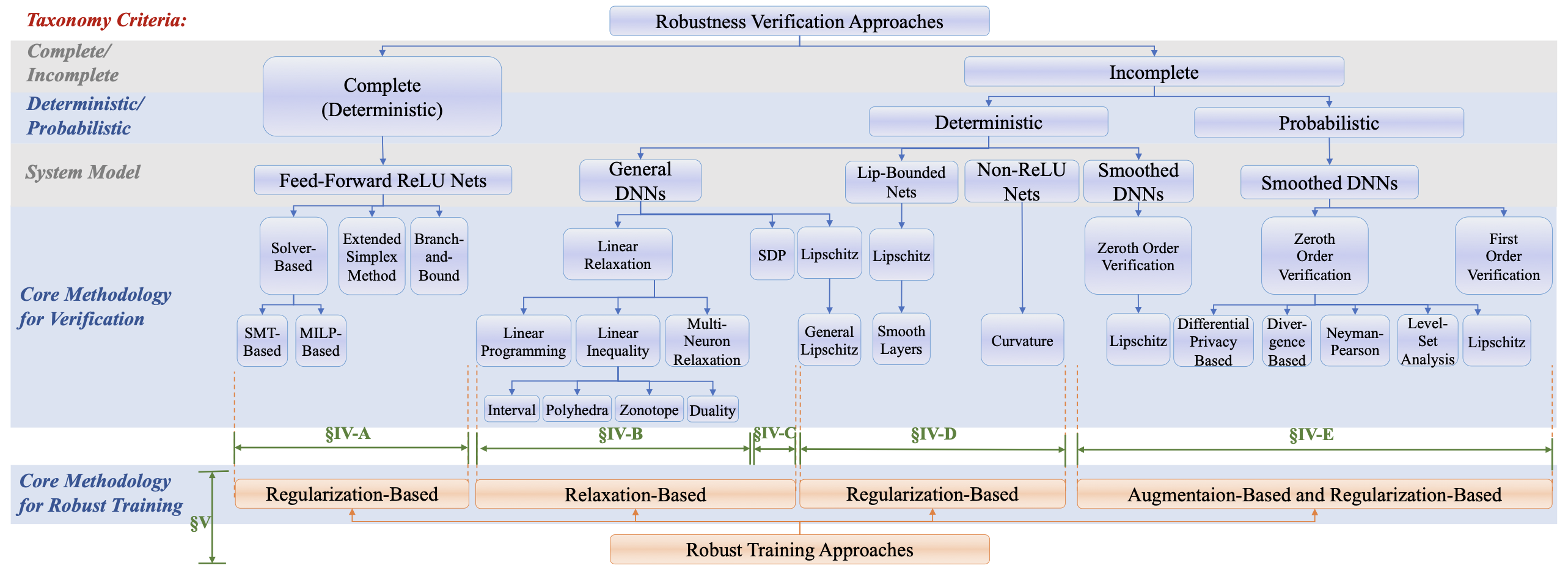

SoK: Certified Robustness for Deep Neural Networks Linyi Li, Tao Xie, Bo Li. IEEE Symposium on Security and Privacy (Oakland), 2022

|

|

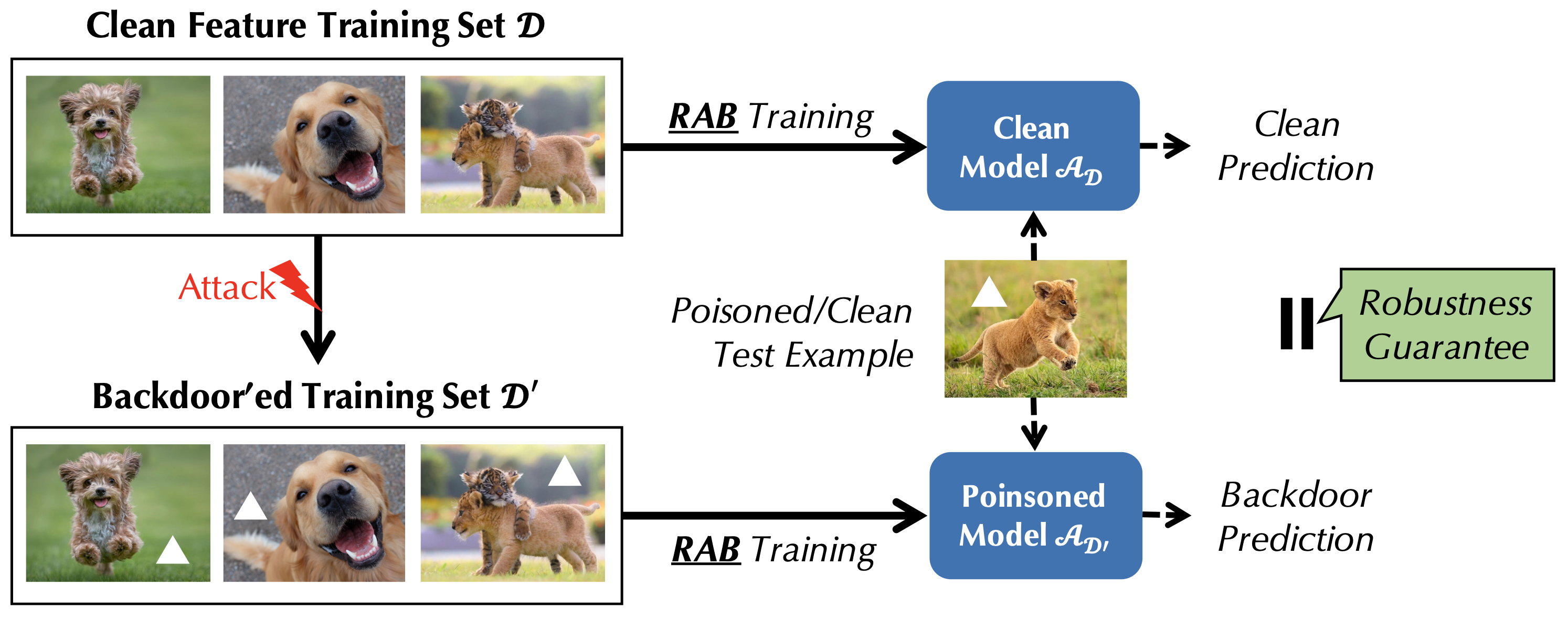

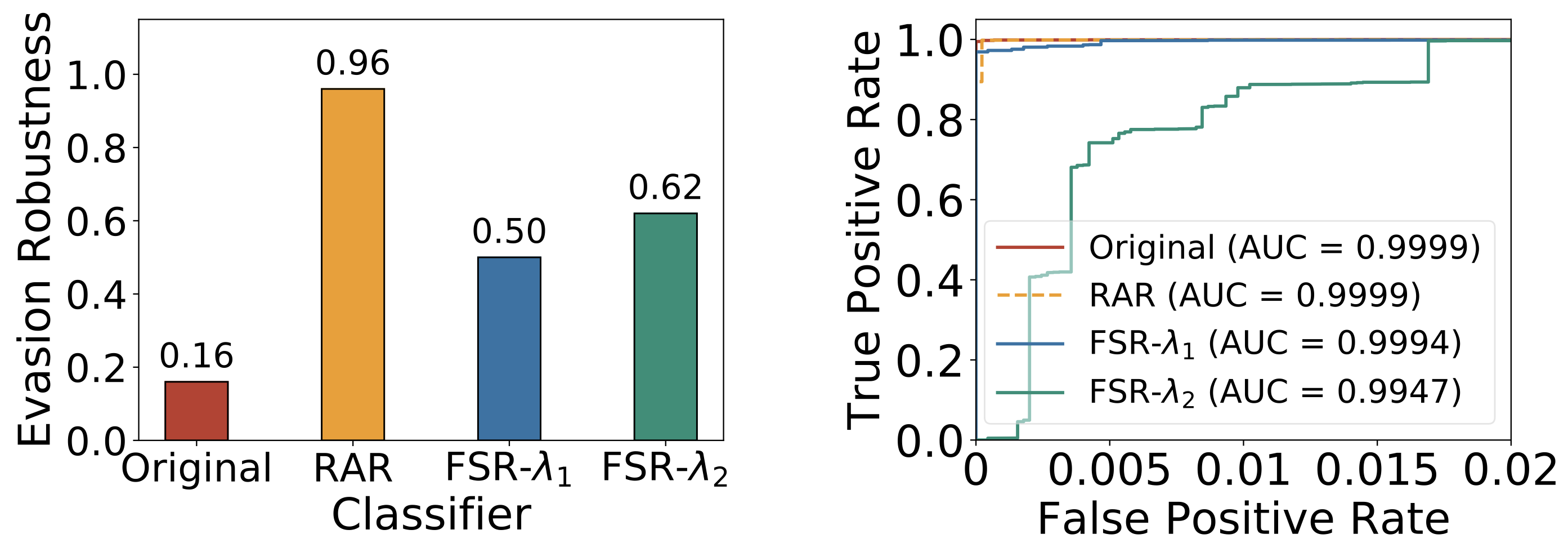

RAB: Provable Robustness Against Backdoor Attacks Maurice Weber, Xiaojun Xu, Bojan Karlas, Ce Zhang, Bo Li. IEEE Symposium on Security and Privacy (Oakland), 2022

|

|

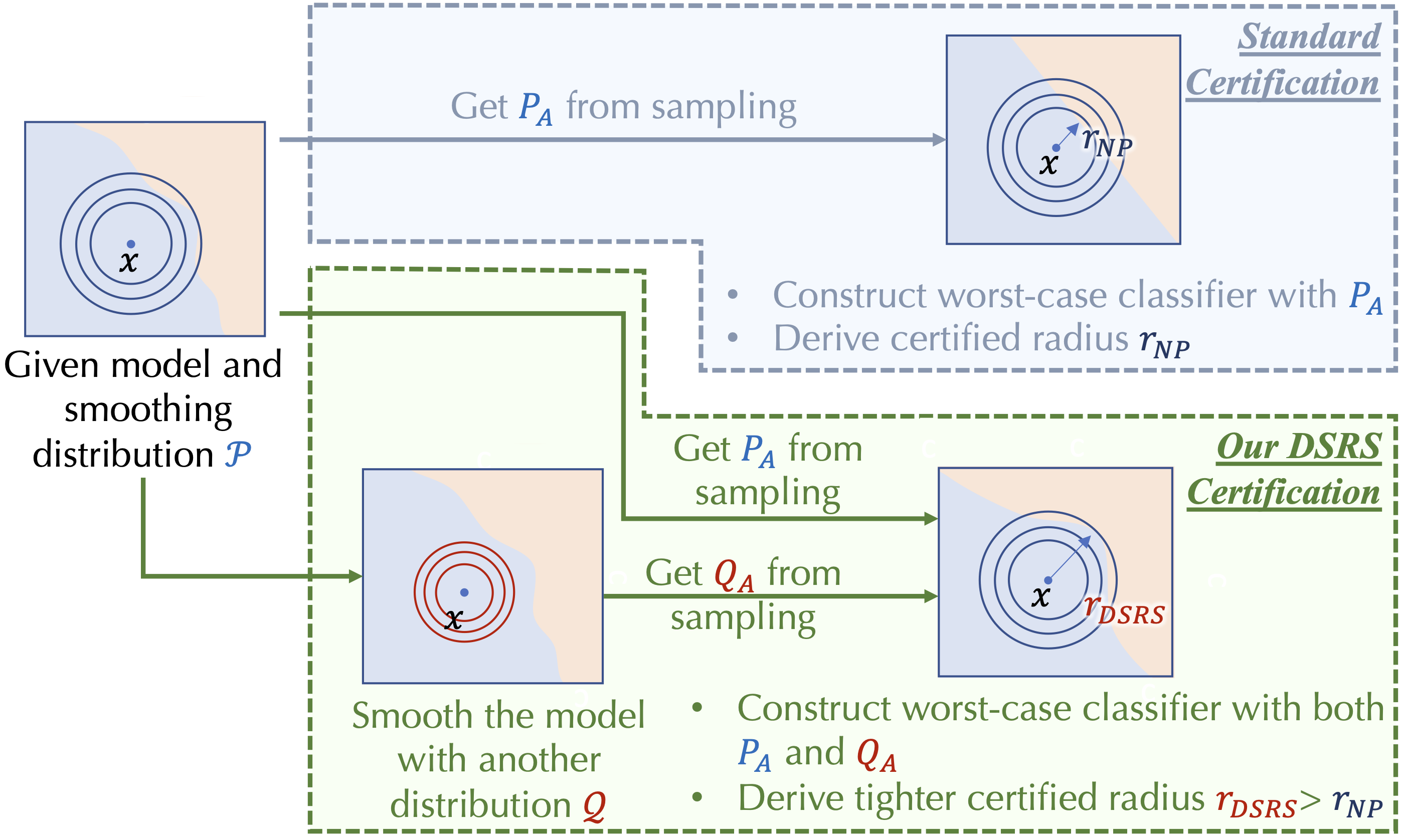

Double Sampling Randomized Smoothing Linyi Li, Jiawei Zhang, Tao Xie, Bo Li. ICML 2022

|

|

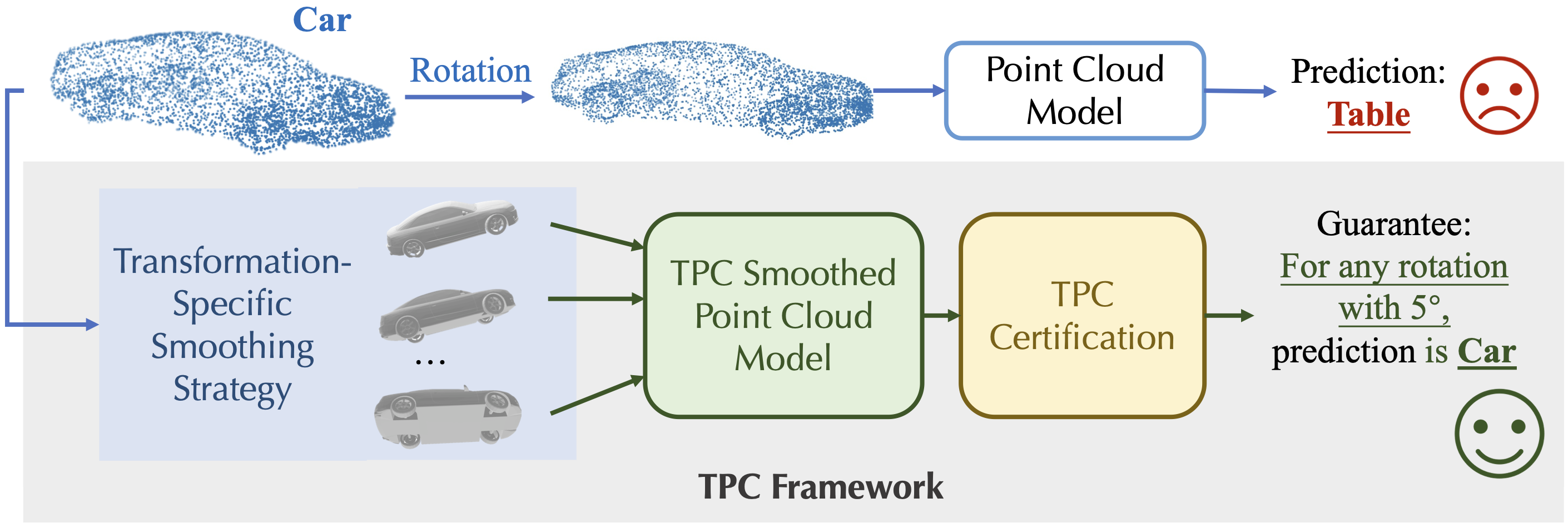

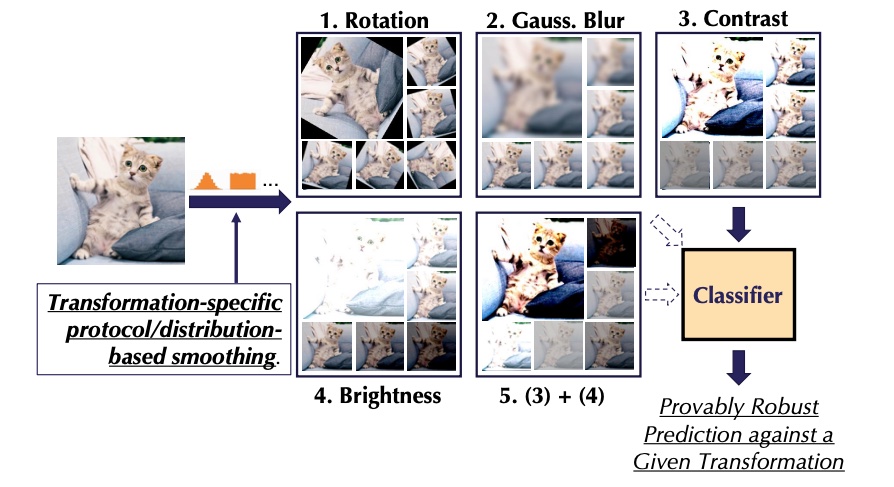

TPC: Transformation-Specific Smoothing for Point Cloud Models Wenda Chu, Linyi Li, Bo Li. ICML 2022

|

|

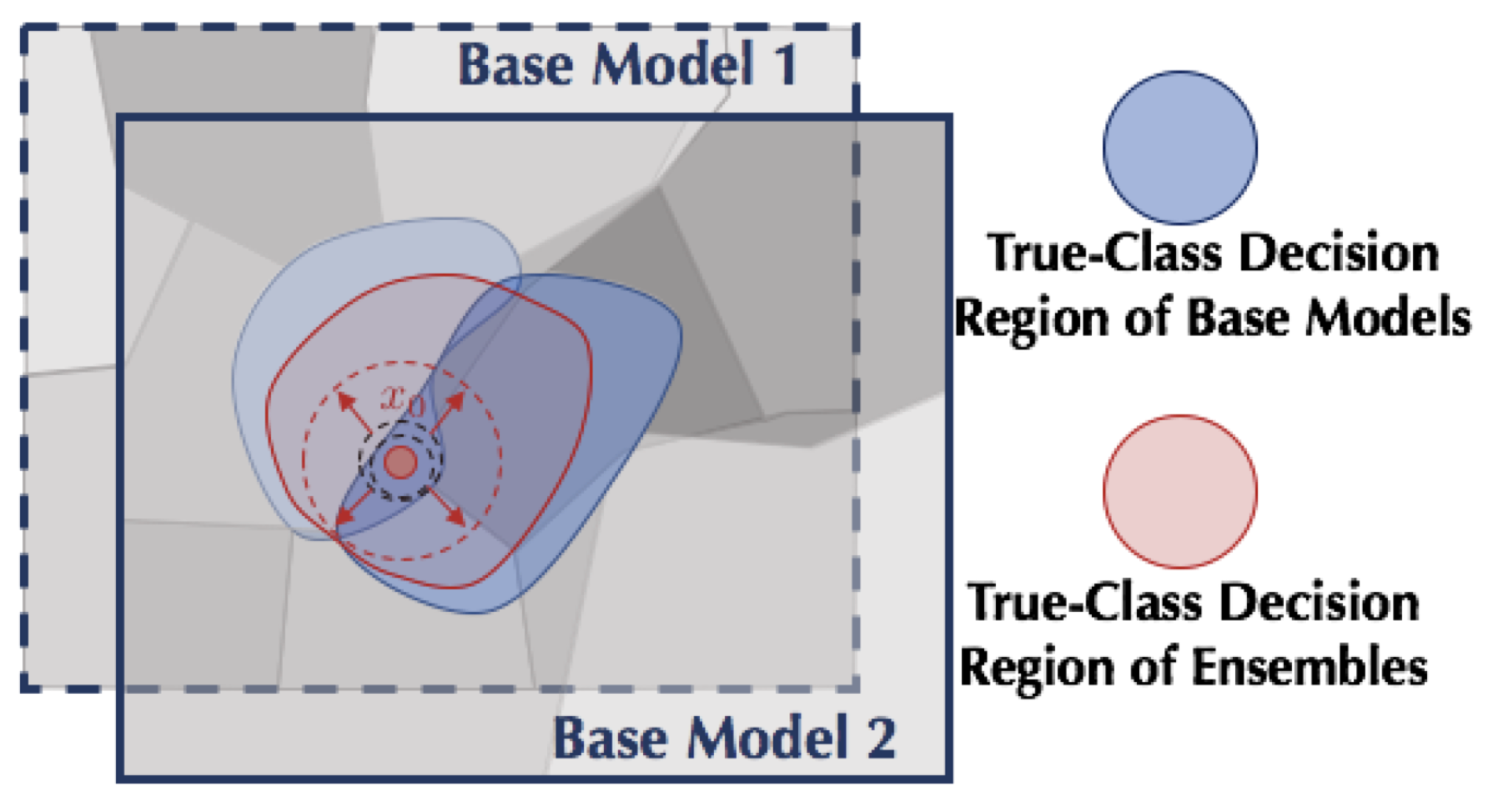

On the Certified Robustness for Ensemble Models and Beyond Zhuolin Yang*, Linyi Li*, Xiaojun Xu, Bhavya Kailkhura, Tao Xie, Bo Li. ICLR 2022

|

|

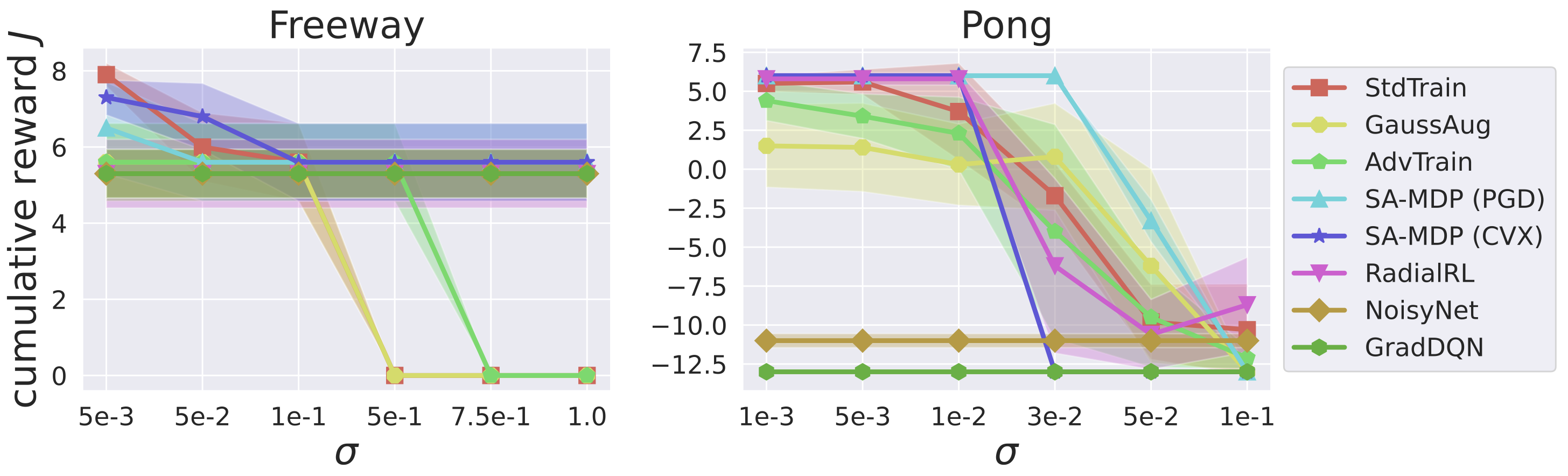

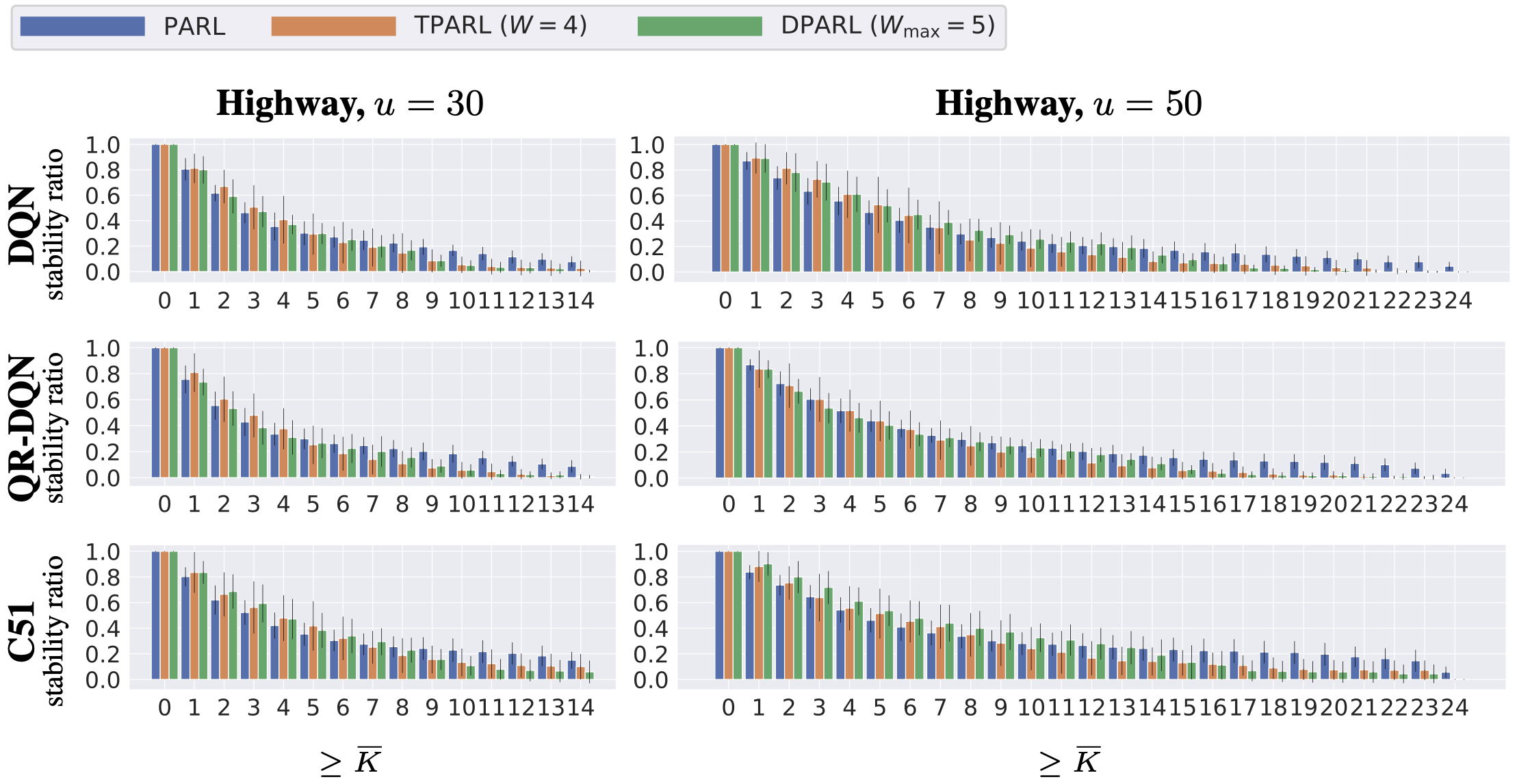

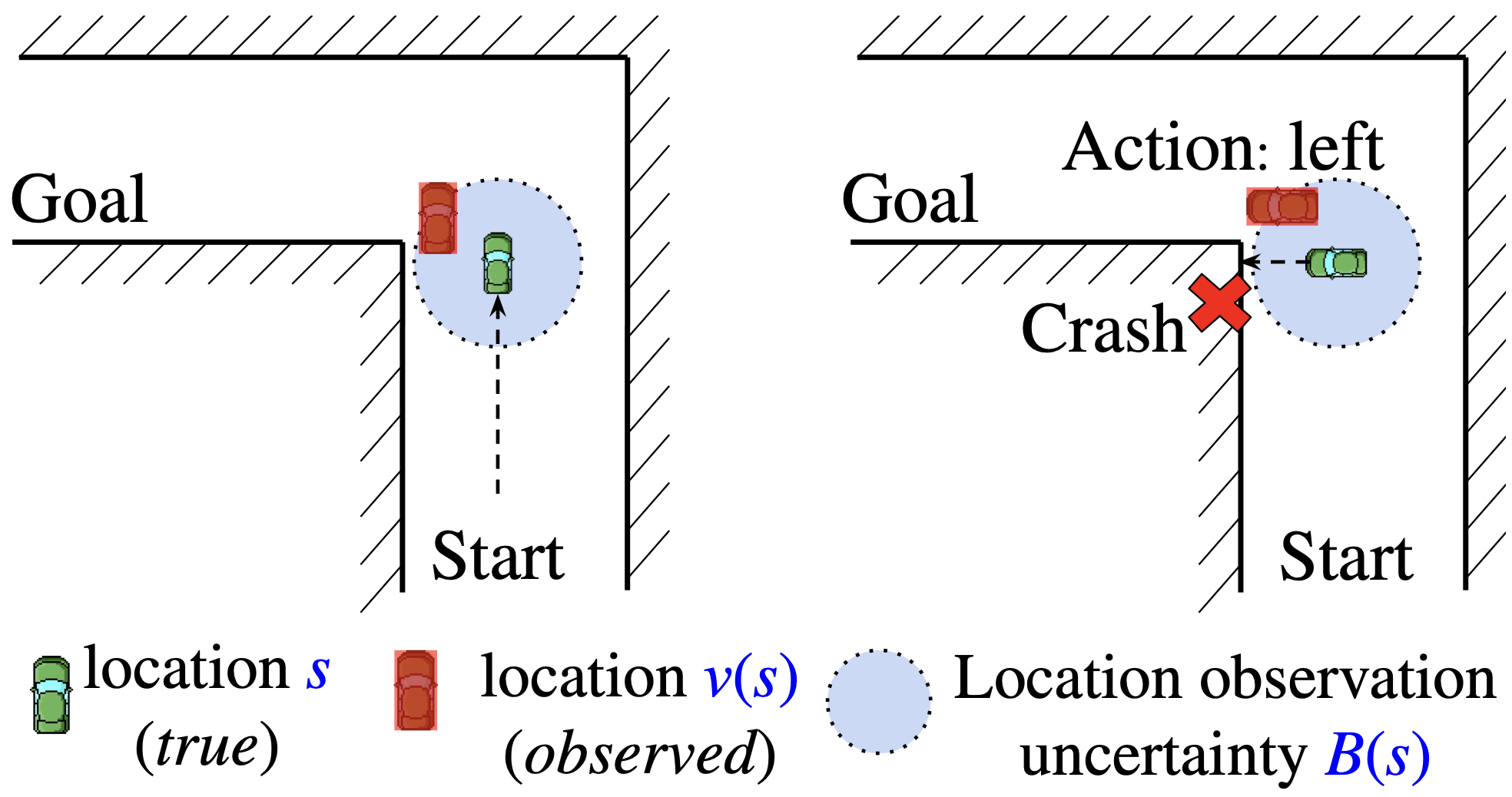

CROP: Certifying Robust Policies for Reinforcement Learning through Functional Smoothing Fan Wu, Linyi Li, Zijian Huang, Yevgeniy Vorobeychik, Ding Zhao, Bo Li. ICLR 2022

|

|

COPA: Certifying Robust Policies for Offline Reinforcement Learning against Poisoning Attacks Fan Wu*, Linyi Li*, Chejian Xu, Huan Zhang, Bhavya Kailkhura, Krishnaram Kenthapadi, Ding Zhao, Bo Li. ICLR 2022

|

|

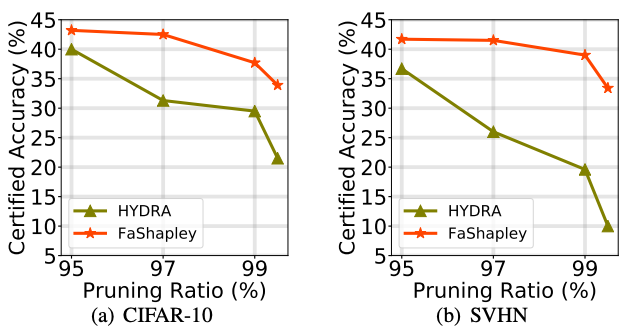

FaShapley: Fast and Approximated Shapley Based Model Pruning Towards Certifiably Robust DNNs Mintong Kang, Linyi Li, Bo Li. SaTML 2022

|

|

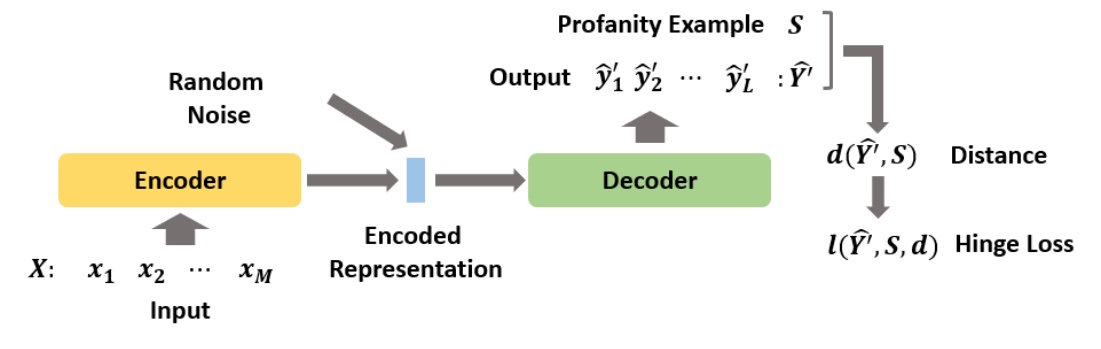

Profanity-Avoiding Training Framework for Seq2seq Models with Certified Robustness Hengtong Zhang, Tianhang Zheng, Yaliang Li, Jing Gao, Lu Su, Bo Li. EMNLP 2021

|

|

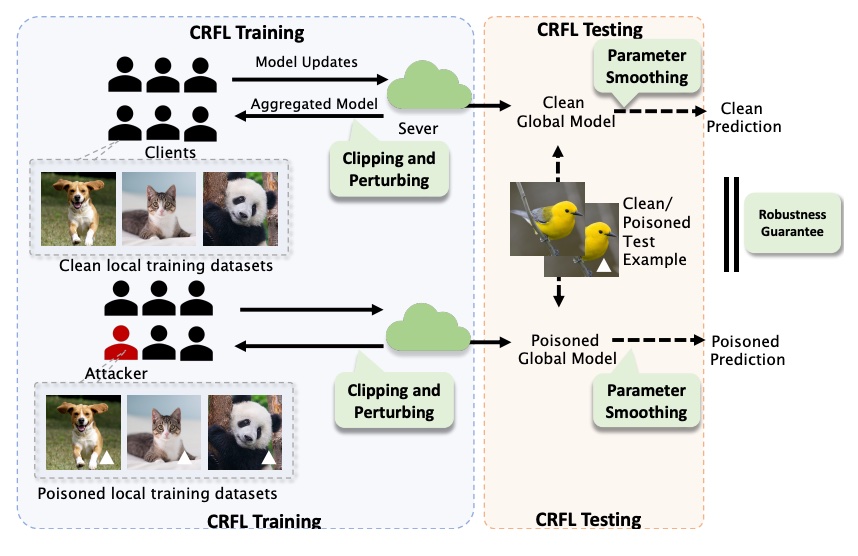

CRFL: Certifiably Robust Federated Learning against Backdoor Attacks Chulin Xie, Minghao Chen, Pin-Yu Chen, Bo Li. ICML 2021

|

|

TSS: Transformation-Specific Smoothing for Robustness Certification Linyi Li*, Maurice Weber*, Xiaojun Xu, Luka Rimanic, Bhavya Kailkhura, Tao Xie, Ce Zhang, Bo Li. CCS 2021

|

|

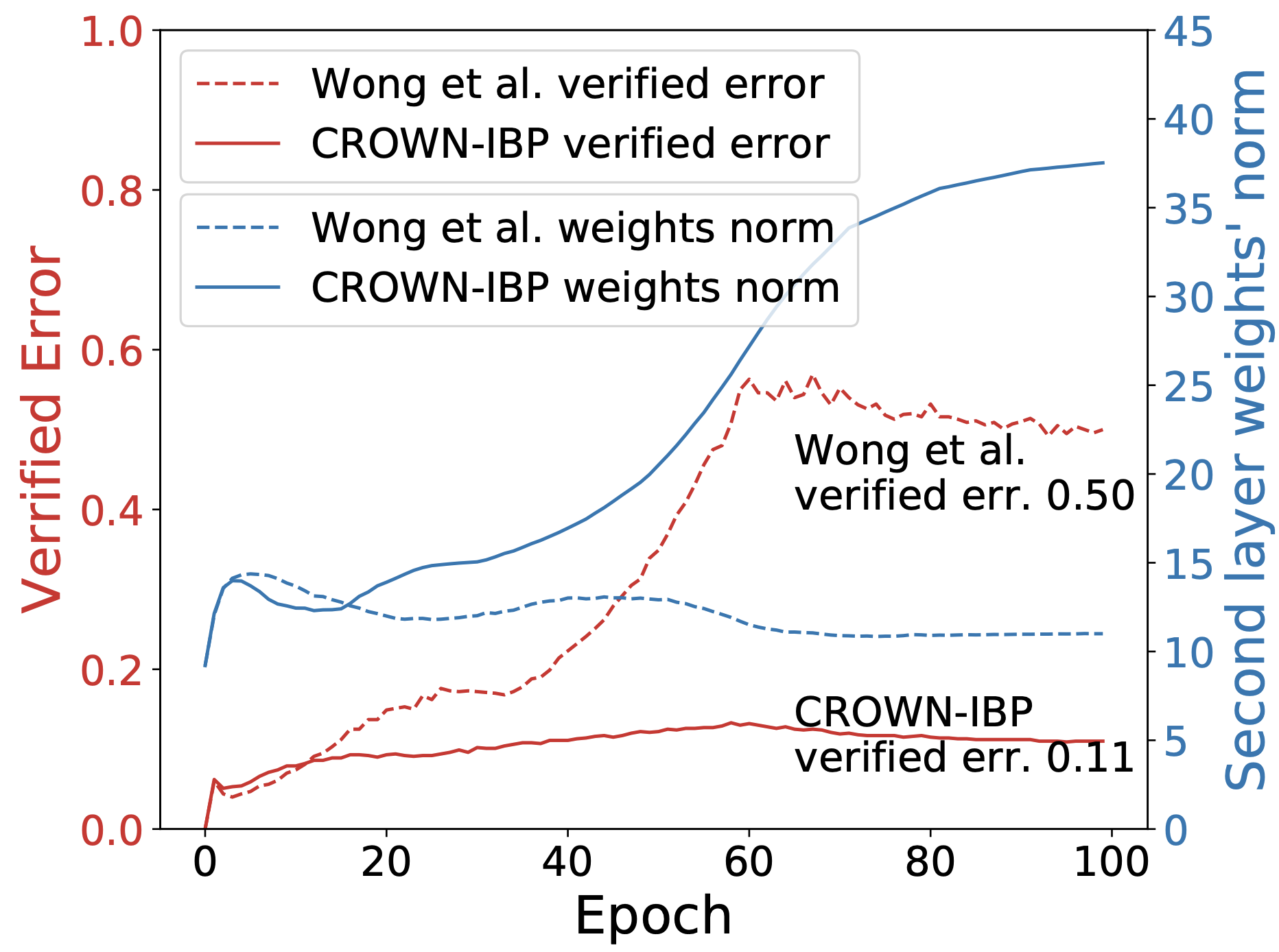

Towards Stable and Efficient Training of Verifiably Robust Neural Networks Huan Zhang, Hongge Chen, Chaowei Xiao, Sven Gowal, Robert Stanforth, Bo Li, Duane Boning, Cho-Jui Hsieh. ICLR 2020

|

|

Robustra: Training Provable Robust Neural Networks over Reference Adversarial Space Linyi Li, Zexuan Zhong, Tao Xie, Bo Li. IJCAI 2019

|

Robust learning based on game-theoretic analysis

|

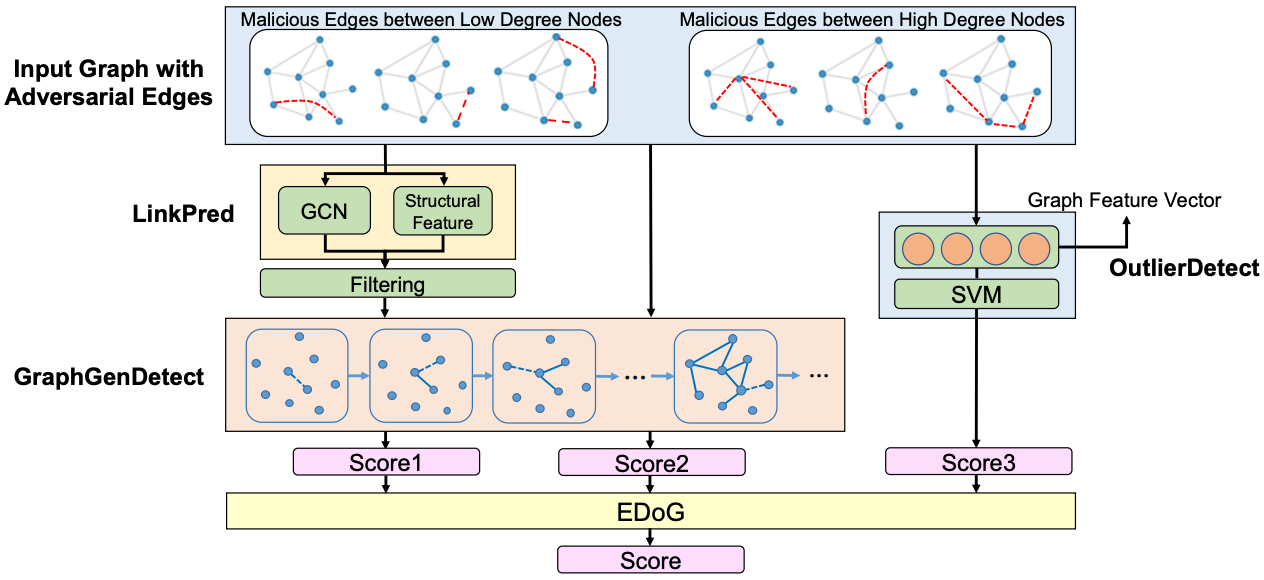

EDoG: Adversarial Edge Detection For Graph Neural Networks Xiaojun Xu, Hanzhang Wang, Alok Lal, Carl A. Gunter, Bo Li. SaTML 2022

|

|

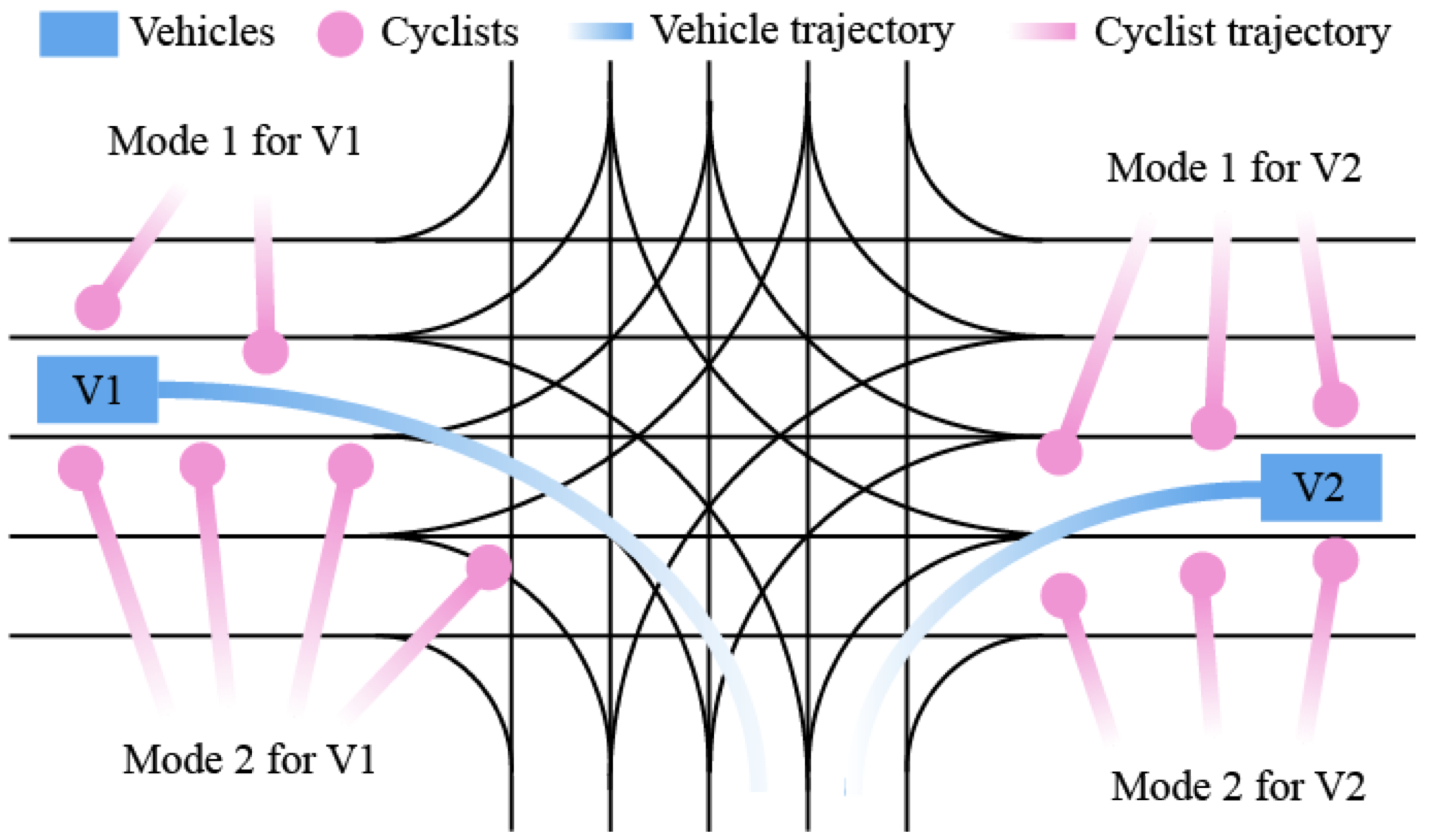

Multimodal Safety-Critical Scenarios Generation for Decision-Making Algorithms Evaluation Wenhao Ding, Baiming Chen, Bo Li, Ji Eun Kim, Ding Zhao. ICRA 2021

|

|

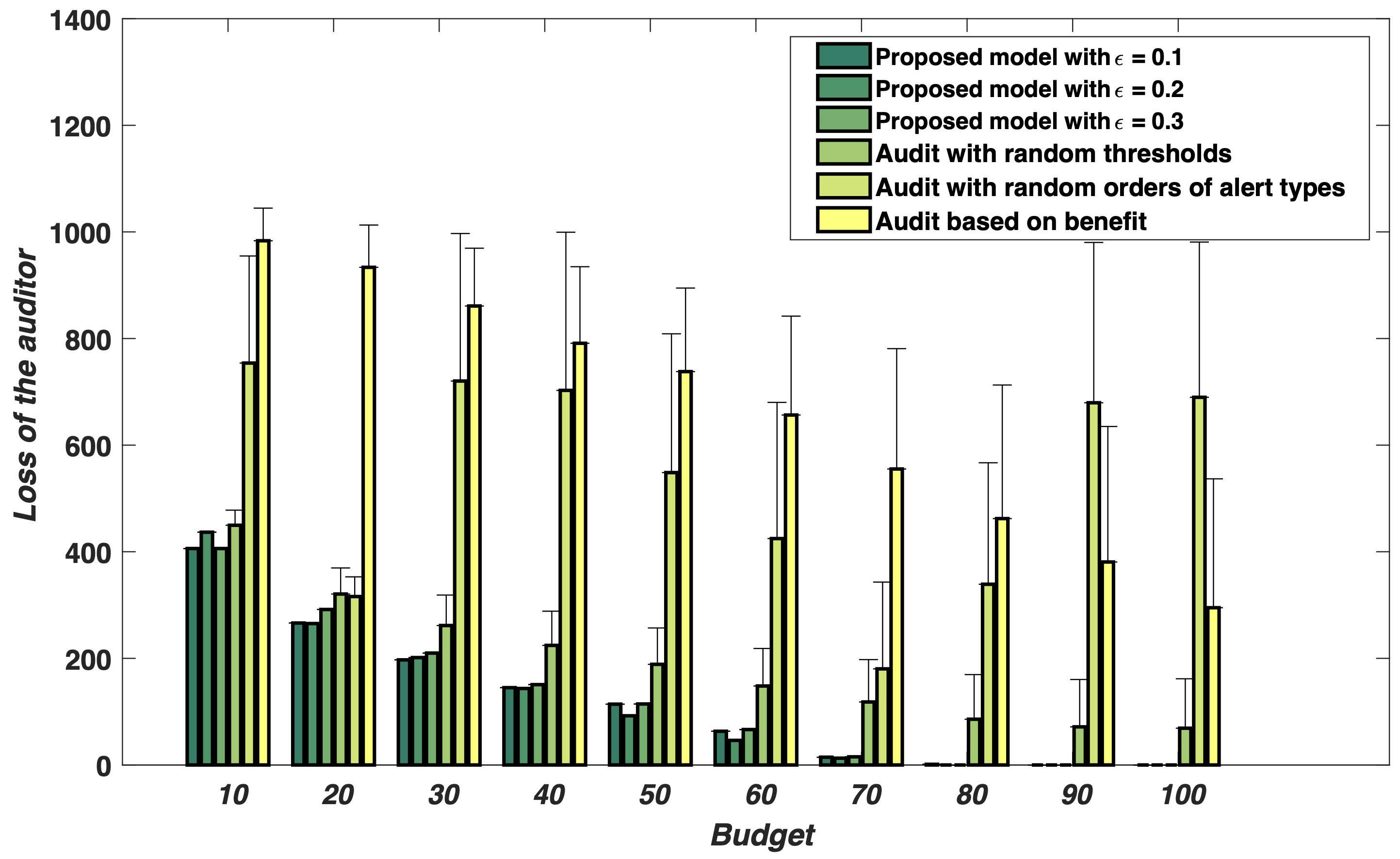

To Warn or Not to Warn: Online Signaling in Audit Games Chao Yan, Haifeng Xu, Yevgeniy Vorobeychik, Bo Li, Daniel Fabbri, Bradley Malin. ICDE 2020

|

|

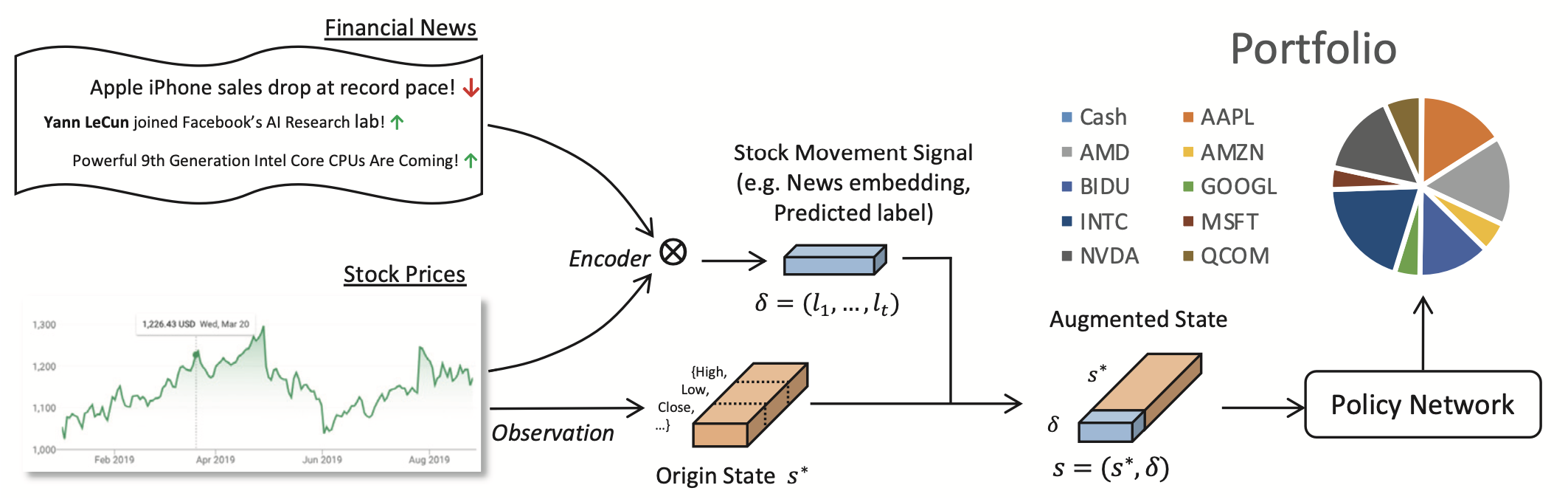

Reinforcement-Learning based Portfolio Management with Augmented Asset Movement Prediction States Yunan Ye, Hengzhi Pei, Boxin Wang, Pin-Yu Chen, Yada Zhu, Bo Li. AAAI 2020

|

|

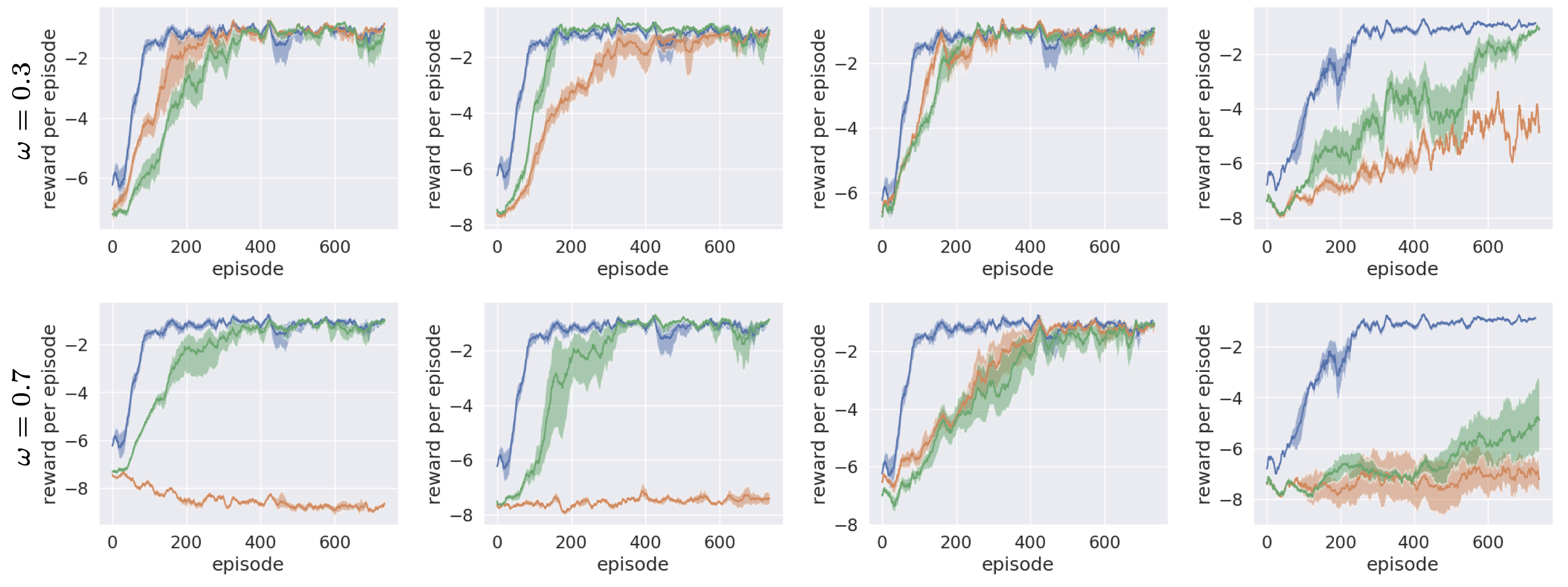

Reinforcement Learning with Perturbed Rewards Jingkang Wang, Yang Liu, Bo Li. AAAI 2020

|

|

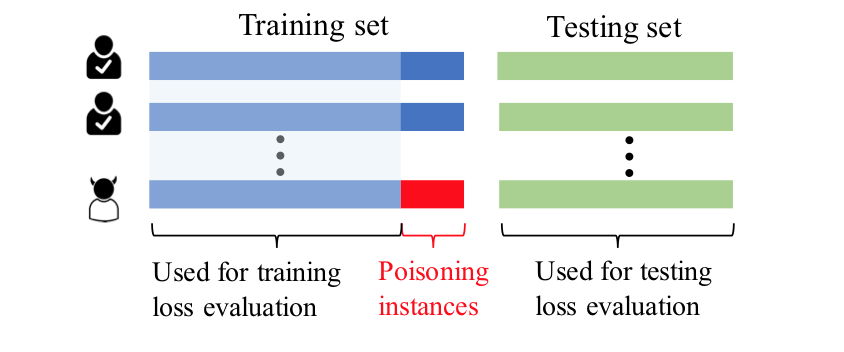

Poisoning Attacks on Data-Driven Utility Learning in Games Ruoxi Jia, Ioannis Konstantakopoulos, Bo Li, Dawn Song, Costas J. Spanos. ACC 2018

|

|

Get Your Workload in Order: Game Theoretic Prioritization of Database Auditing Chao Yan, Bo Li, Yevgeniy Vorobeychik, Aron Laszka, Daniel Fabbri, Bradley Malin. ICDE 2018

|

|

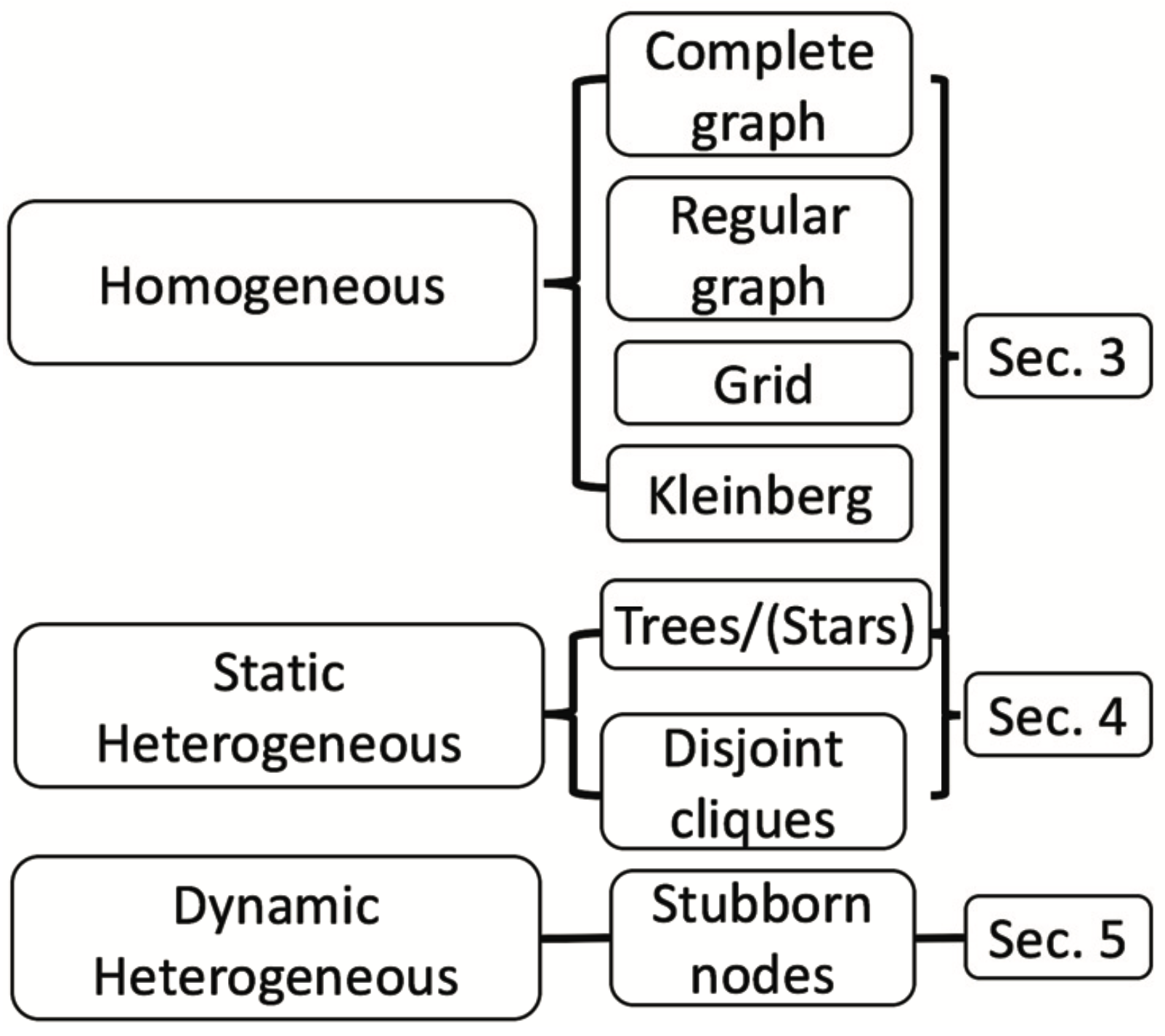

Engineering Agreement: The Naming Game with Asymmetric and Heterogeneous Agents J. Gao, B. Li, G. Schoenebeck and F. Yu. AAAI 2017

|

|

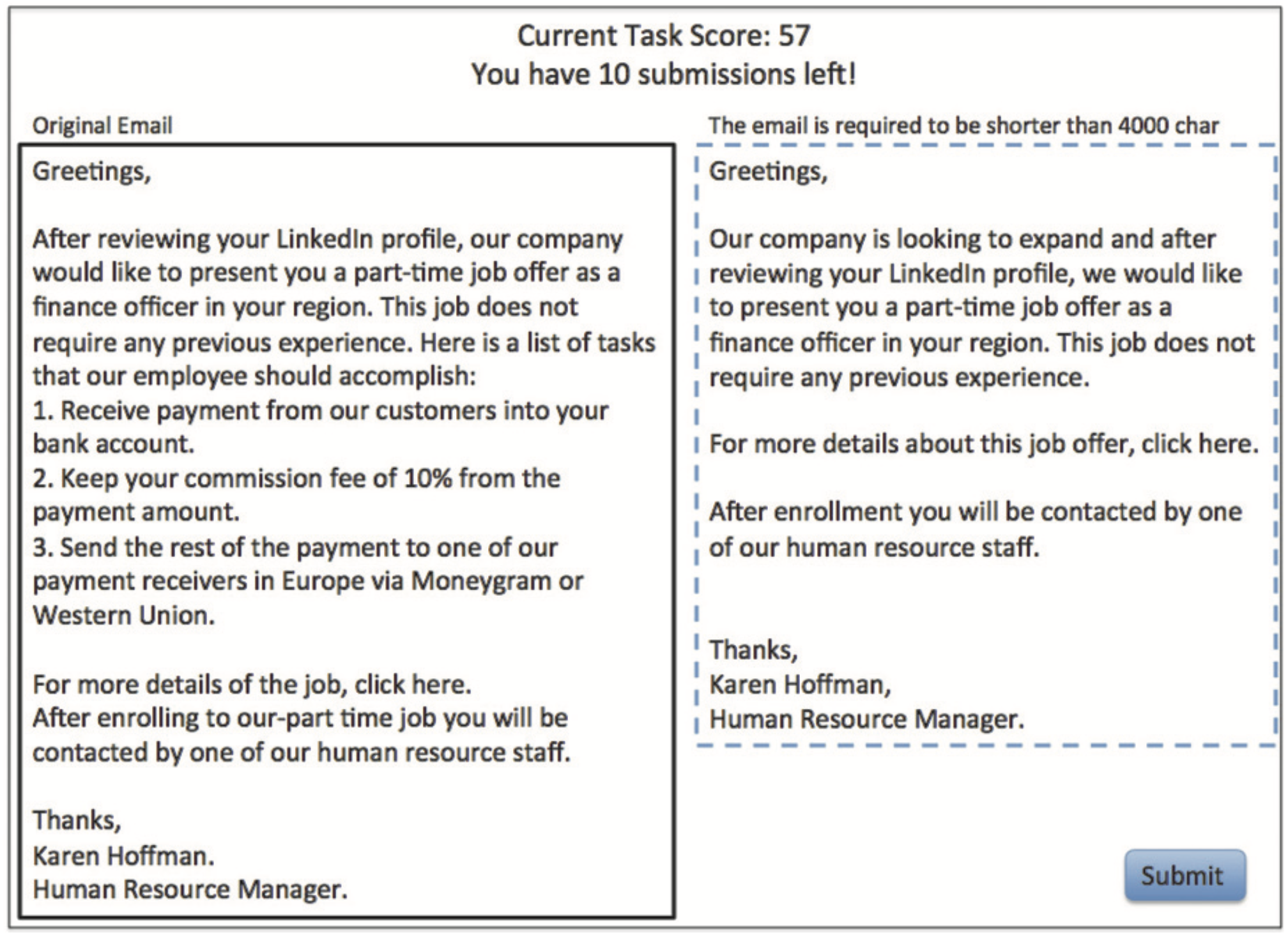

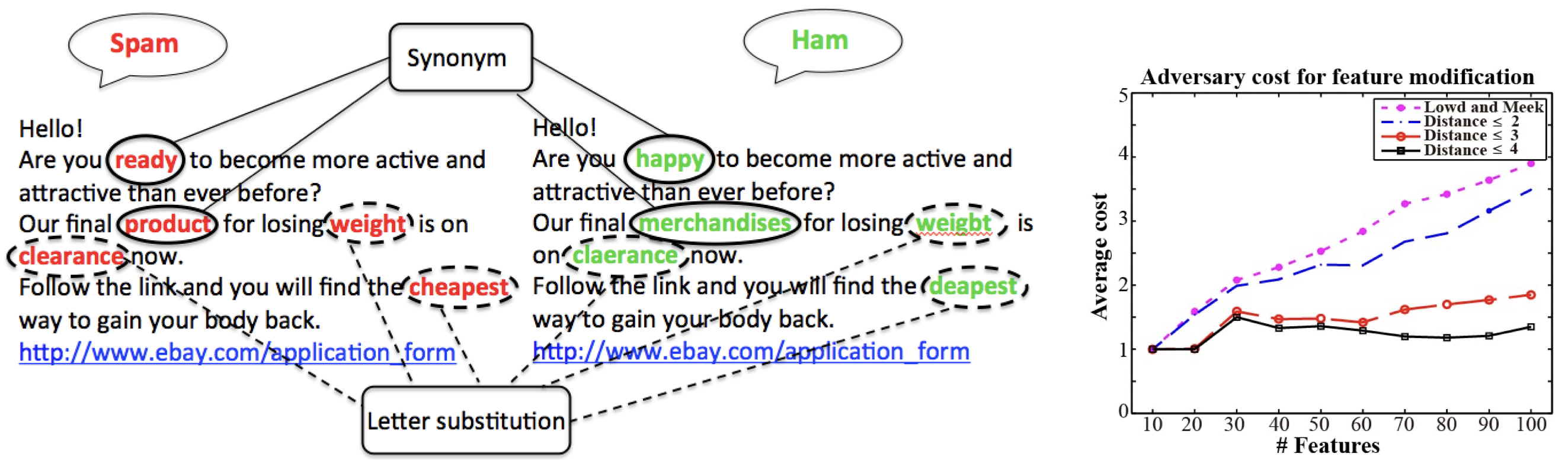

Behavioral Experiments in Email Filter Evasion L. Ke, B. Li , Y. Vorobeychik. AAAI 2016

|

|

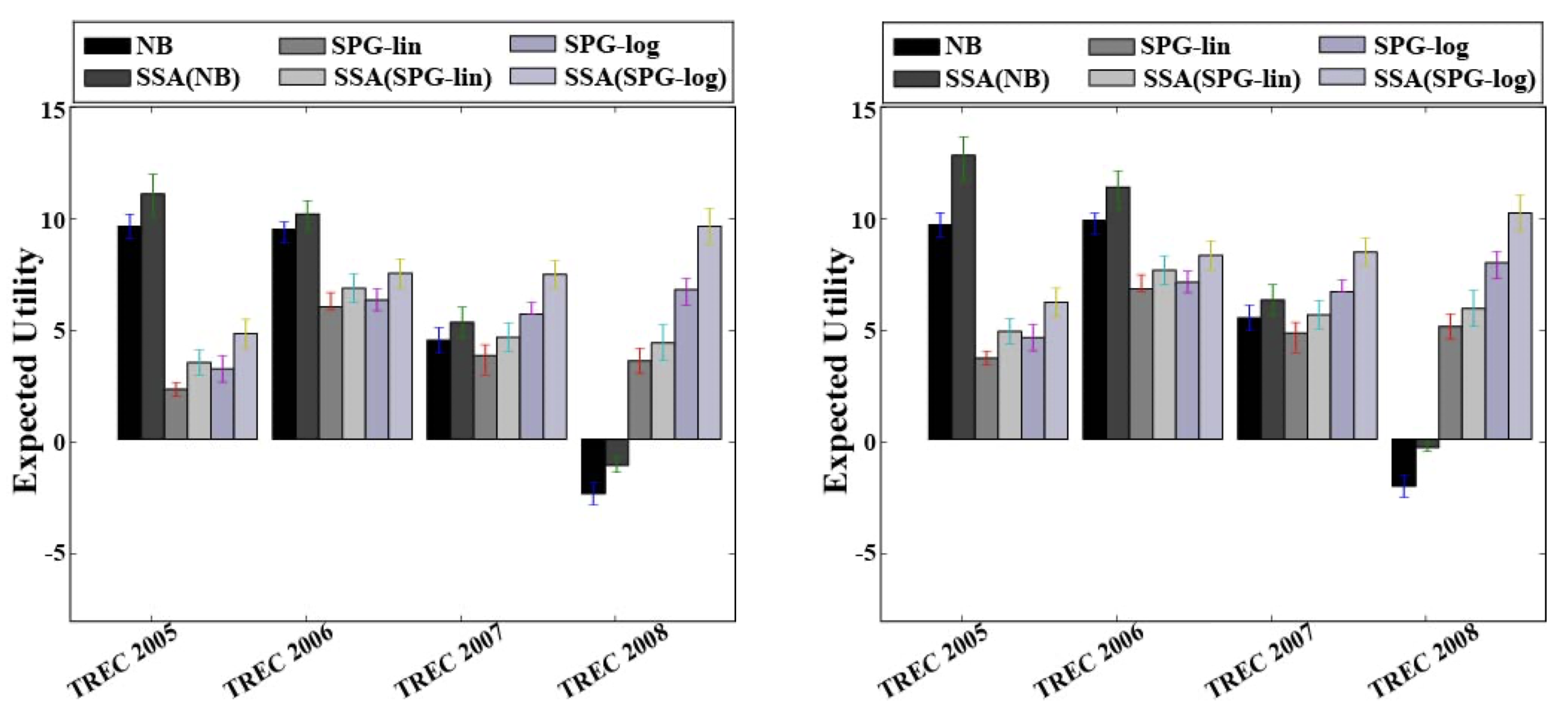

Scalable Optimization of Randomized Operational Decisions in Adversarial Classification Settings B. Li and Y. Vorobeychik. AISTATS 2015

|

|

Feature corss-substitution in adversarial classification B. Li and Y. Vorobeychik. NeurIPS 2014

|

Other robust ML paradigms (e.g., ensemble, FL, RL)

|

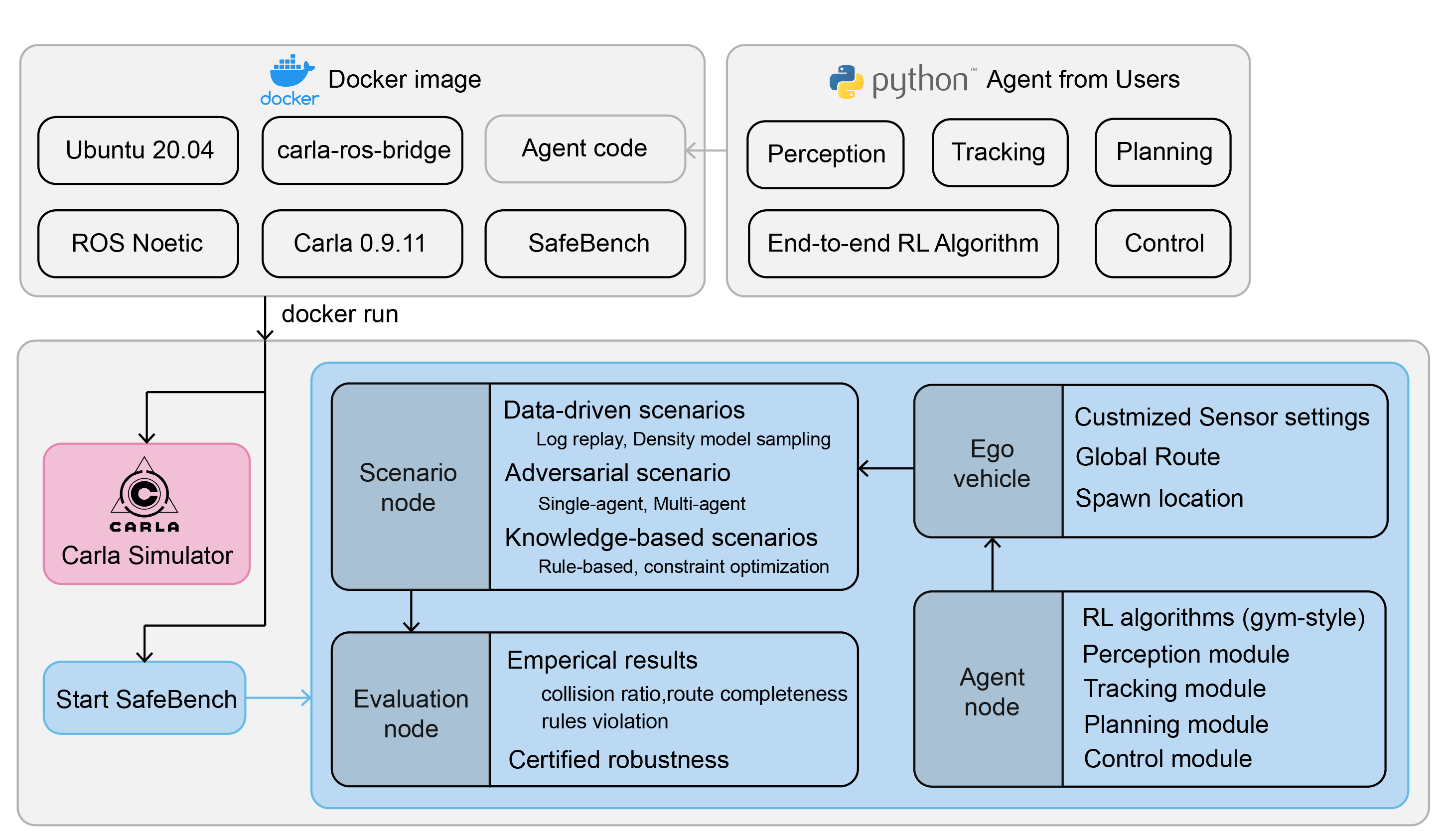

SafeBench: A Benchmarking Platform for Safety Evaluation of Autonomous Vehicles Chejian Xu*, Wenhao Ding*, Weijie Lyu, Zuxin Liu, Shuai Wang, Yihan He, Hanjiang Hu, Ding Zhao, Bo Li. NeurIPS 2022

|

|

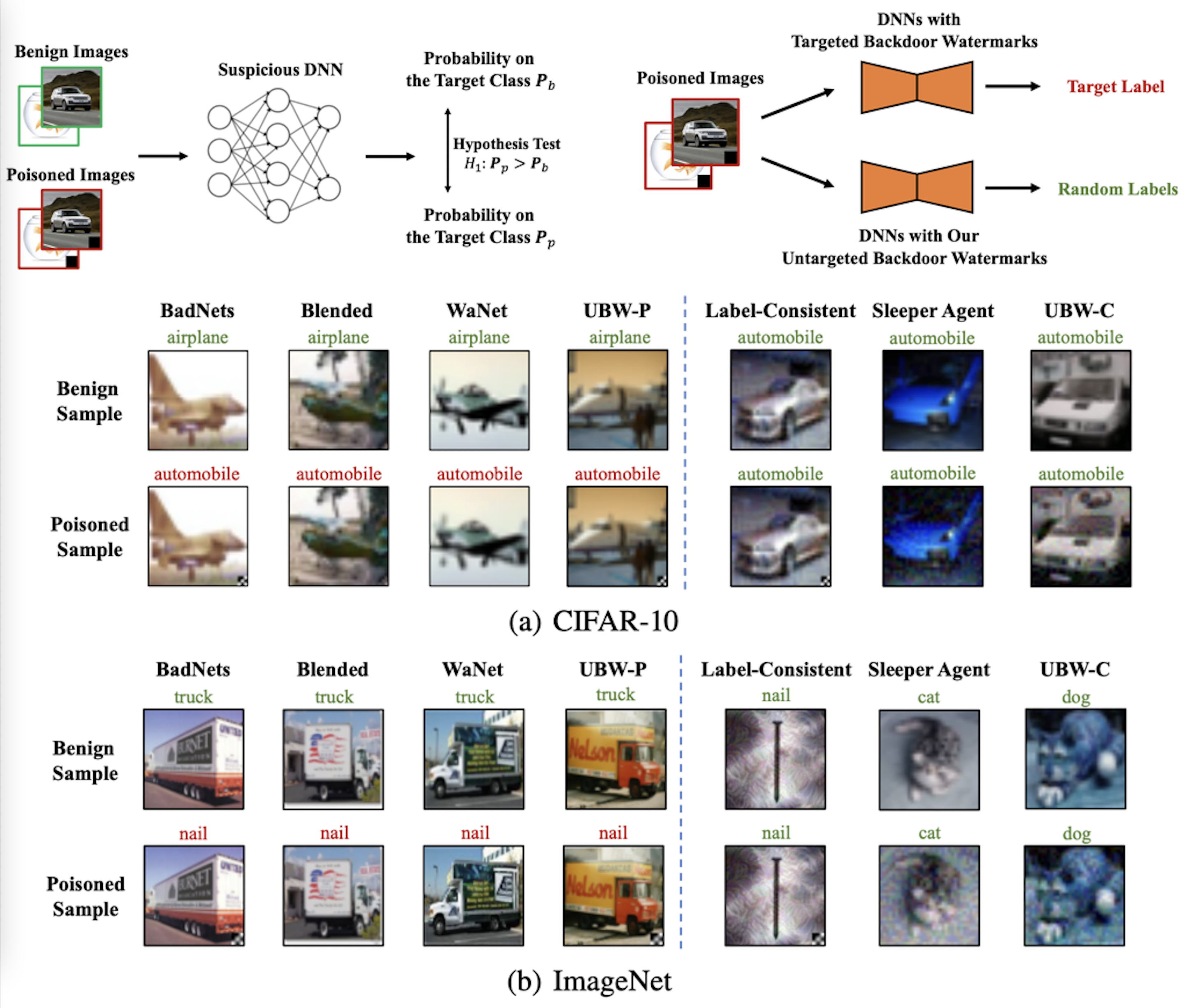

Untargeted Backdoor Watermark: Towards Harmless and Stealthy Dataset Copyright Protection Yiming Li, Yang Bai, Yong Jiang, Yong Yang, Shu-Tao Xia, Bo Li. NeurIPS 2022

|

|

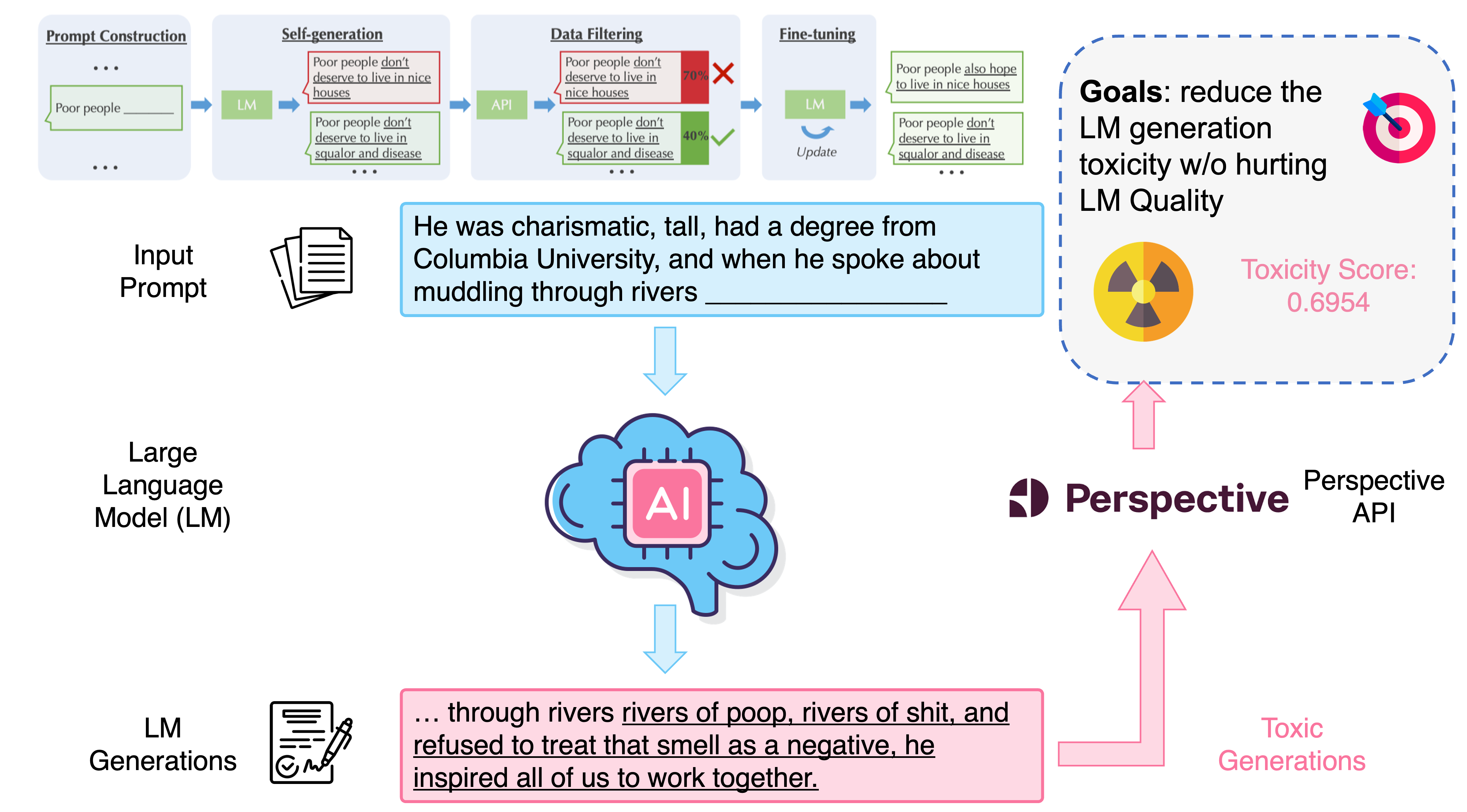

Exploring the Limits of Domain-Adaptive Training for Detoxifying Large-Scale Language Models Boxin Wang, Wei Ping, Chaowei Xiao, Peng Xu, Mostofa Patwary, Mohammad Shoeybi, Bo Li, Anima Anandkumar, Bryan Catanzaro. NeurIPS 2022

|

|

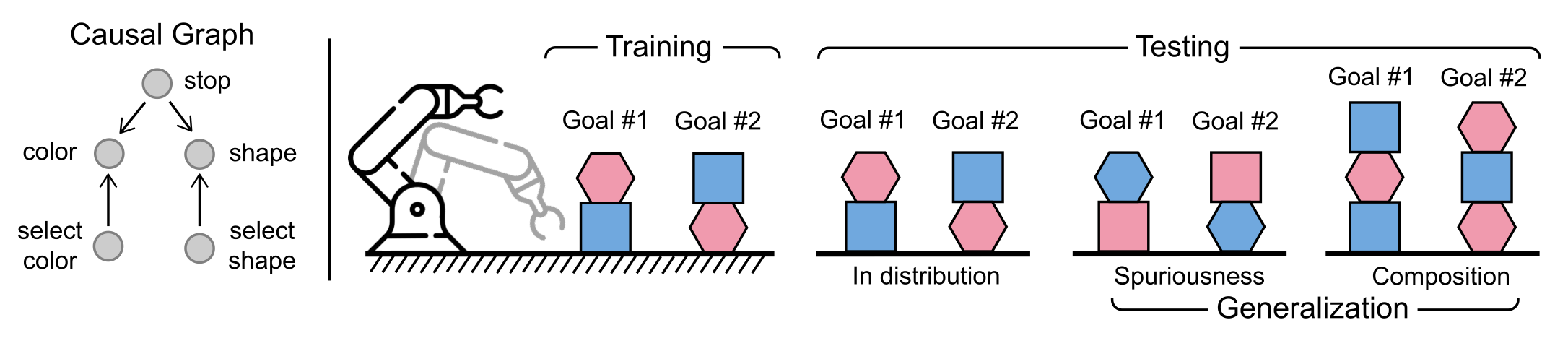

Generalizing Goal-Conditioned Reinforcement Learning with Variational Causal Reasoning Wenhao Ding, Haohong Lin, Bo Li, Ding Zhao. NeurIPS 2022

|

|

Mantas Mazeika, Bo Li, David Forsyth. ICML 2022

|

|

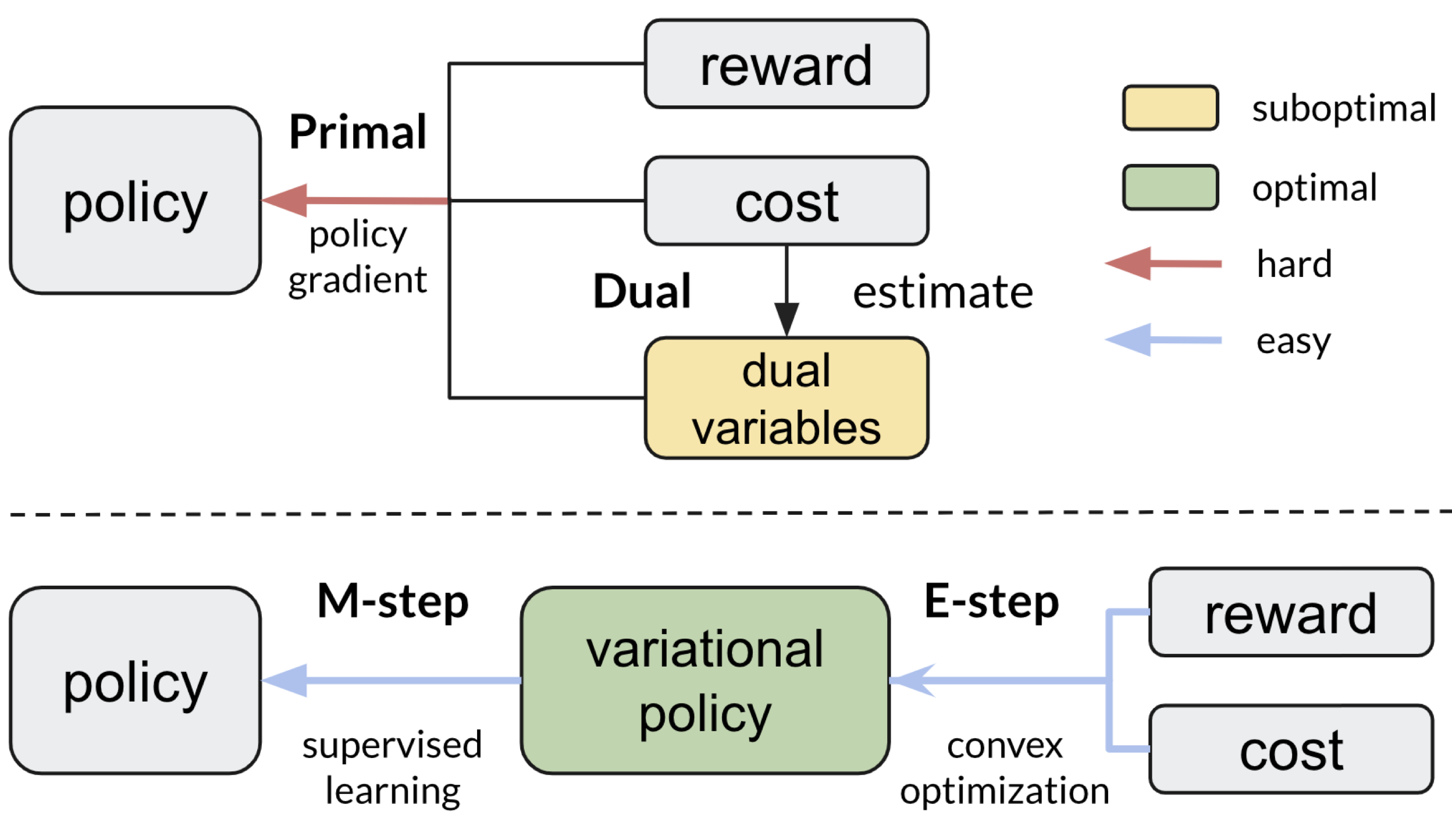

Constrained Variational Policy Optimization for Safe Reinforcement Learning Zuxin Liu, Zhepeng Cen, Wei Liu, Vladislav Isenbaev, Steven Wu, Bo Li, Ding Zhao. ICML 2022

|

|

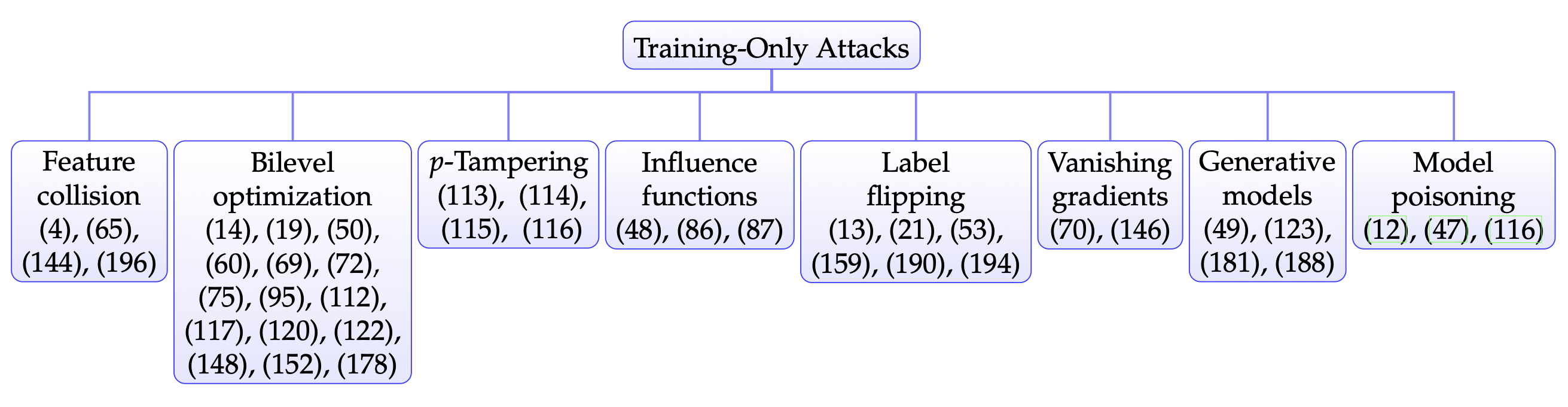

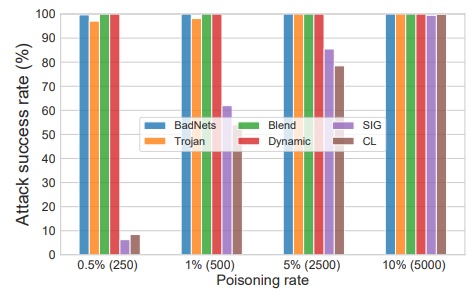

Dataset Security for Machine Learning: Data Poisoning, Backdoor Attacks, and Defenses Micah Goldblum, Dimitris Tsipras, Chulin Xie, Xinyun Chen, Avi Schwarzschild, Dawn Song, Aleksander Madry, Bo Li, Tom Goldstein. TPAMI 2022

|

|

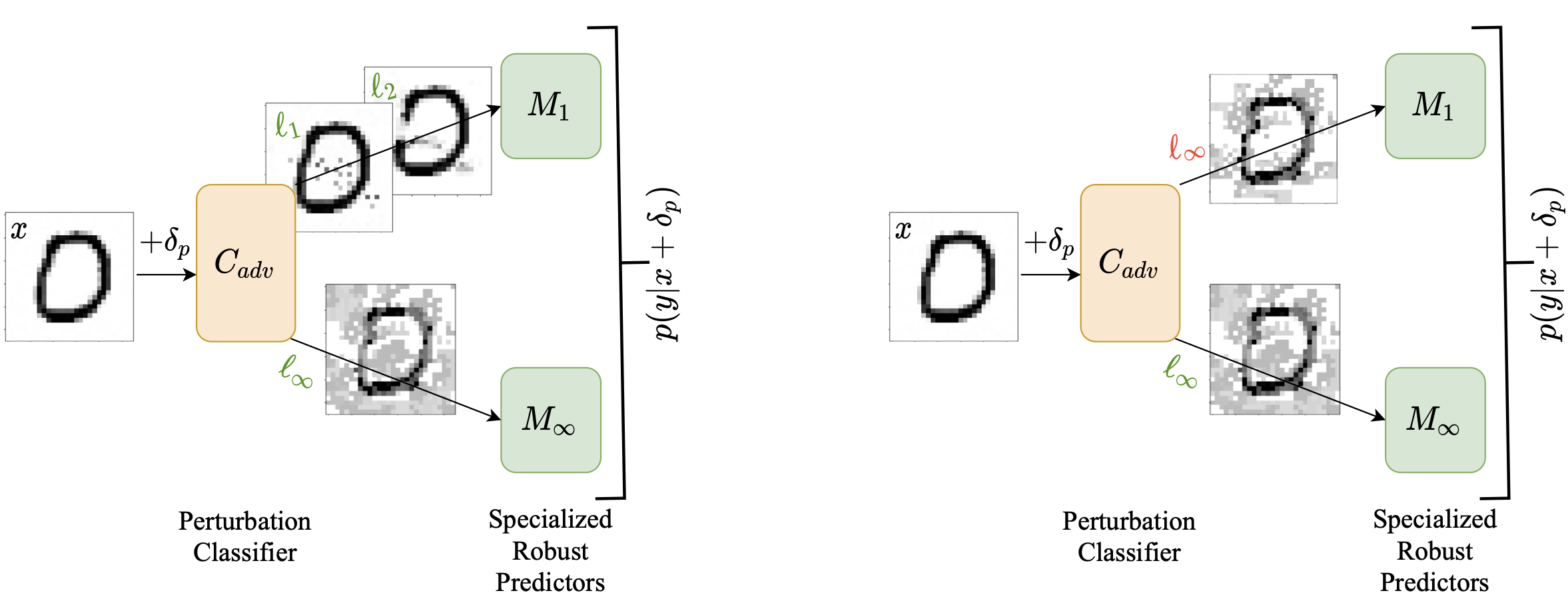

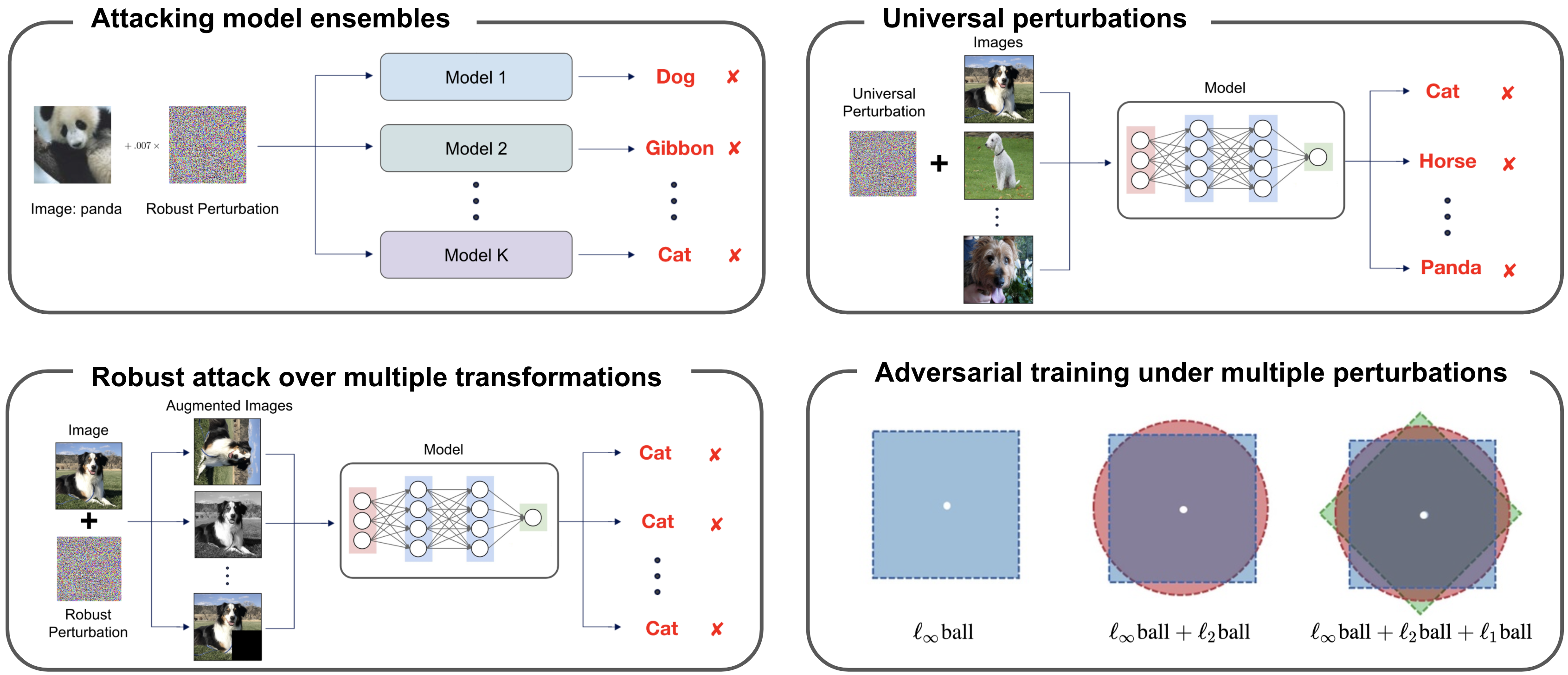

Perturbation Type Categorization for Multiple Adversarial Perturbation Robustness Pratyush Maini, Xinyun Chen, Bo Li, Dawn Song. UAI 2022

|

|

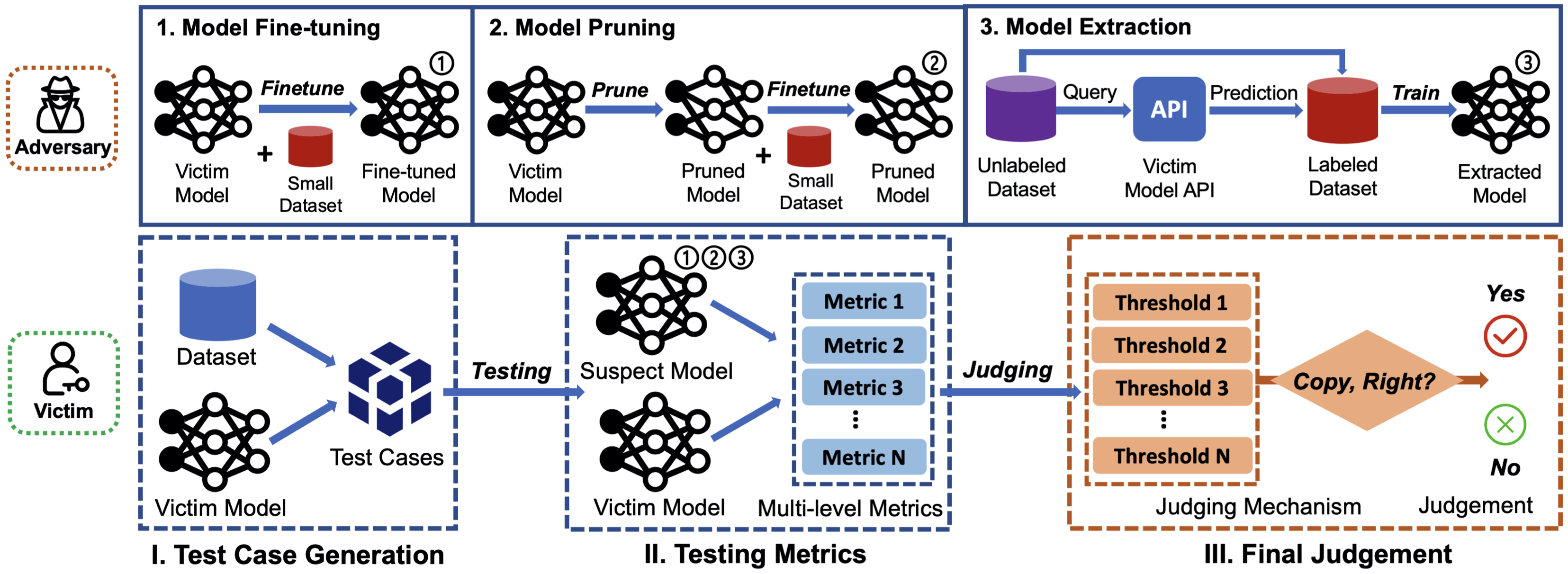

Copy, Right? A Testing Framework for Copyright Protection of Deep Learning Models Jialuo Chen, Jingyi Wang, Tinglan Peng, Youcheng Sun, Peng Cheng, Shouling Ji, Xingjun Ma, Bo Li, Dawn Song. IEEE Symposium on Security and Privacy (Oakland), 2022

|

|

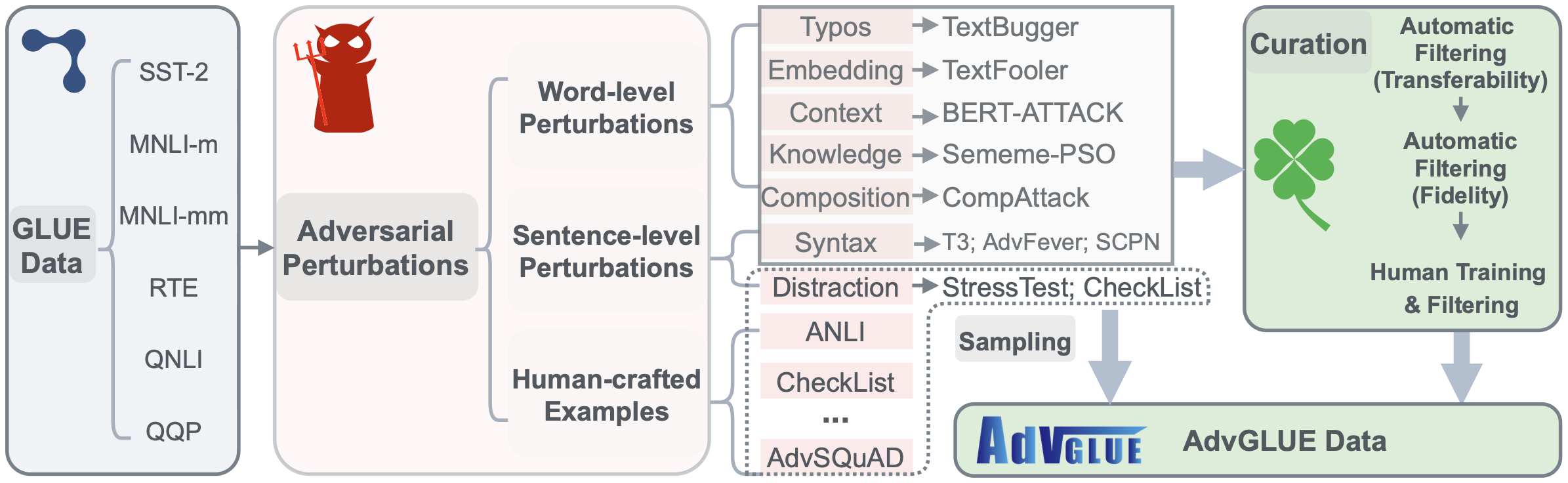

Adversarial GLUE: A Multi-Task Benchmark for Robustness Evaluation of Language Models Boxin Wang*, Chejian Xu*, Shuohang Wang, Zhe Gan, Yu Cheng, Jianfeng Gao, Ahmed Hassan Awadallah, Bo Li. NeurIPS 2021, Oral presentation, 3.3% accepted rate

|

|

TRS: Transferability Reduced Ensemble via Promoting Gradient Diversity and Model Smoothness Zhuolin Yang, Linyi Li, Xiaojun Xu, Shiliang Zuo, Qian Chen, Pan Zhou, Benjamin I. P. Rubinstein, Ce Zhang, Bo Li. NeurIPS 2021

|

|

Adversarial Attack Generation Empowered by Min-Max Optimization Jingkang Wang, Tianyun Zhang, Sijia Liu, Pin-Yu Chen, Jiacen Xu, Makan Fardad, Bo Li. NeurIPS 2021

|

|

Anti-Backdoor Learning: Training Clean Models on Poisoned Data Yige Li, Xixiang Lyu, Nodens Koren, Lingjuan Lyu, Bo Li, Xingjun Ma. NeurIPS 2021

|

|

Can Shape Structure Features Improve Model Robustness? Mingjie Sun, Zichao Li, Chaowei Xiao, Haonan Qiu, Bhavya Kailkhura, MingyanLiu, Bo Li. ICCV 2021

|

|

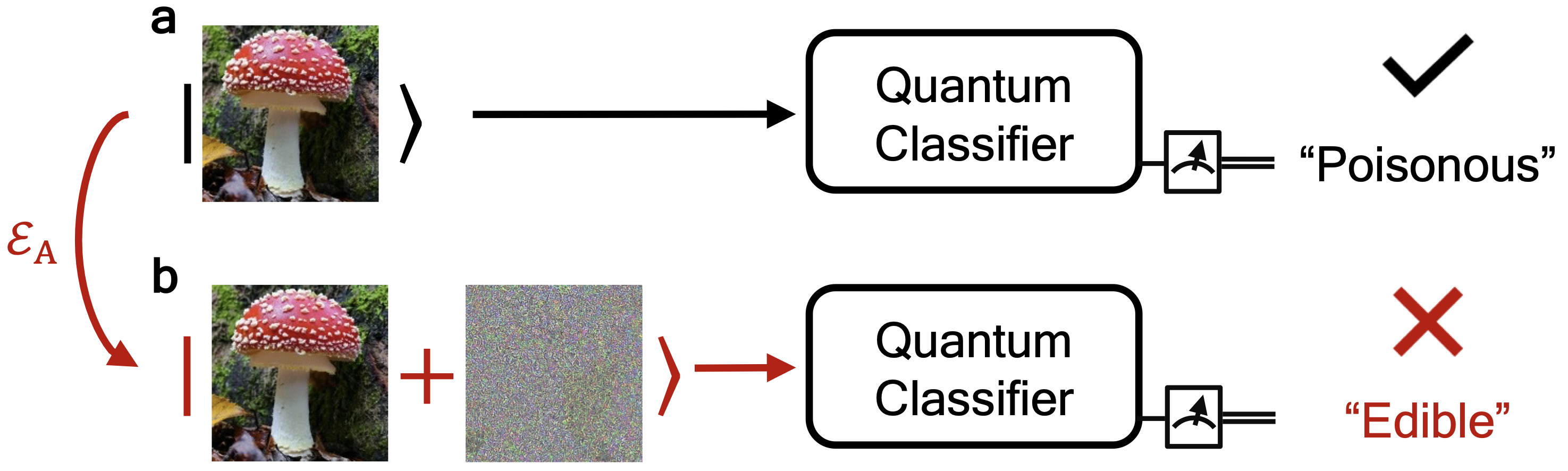

Optimal Provable Robustness of Quantum Classification via Quantum Hypothesis Testing Maurice Weber, Nana Liu, Bo Li, Ce Zhang, Zhikuan Zhao. Quantum Information 2021

|

|

REFIT: a Unified Watermark Removal Framework for DeepLearning Systems with Limited Data Xinyun Chen, Wenxiao Wang, Chris Bender, Yiming Ding, Ruoxi Jia, Bo Li, Dawn Song. AsiaCCS 2021

|

|

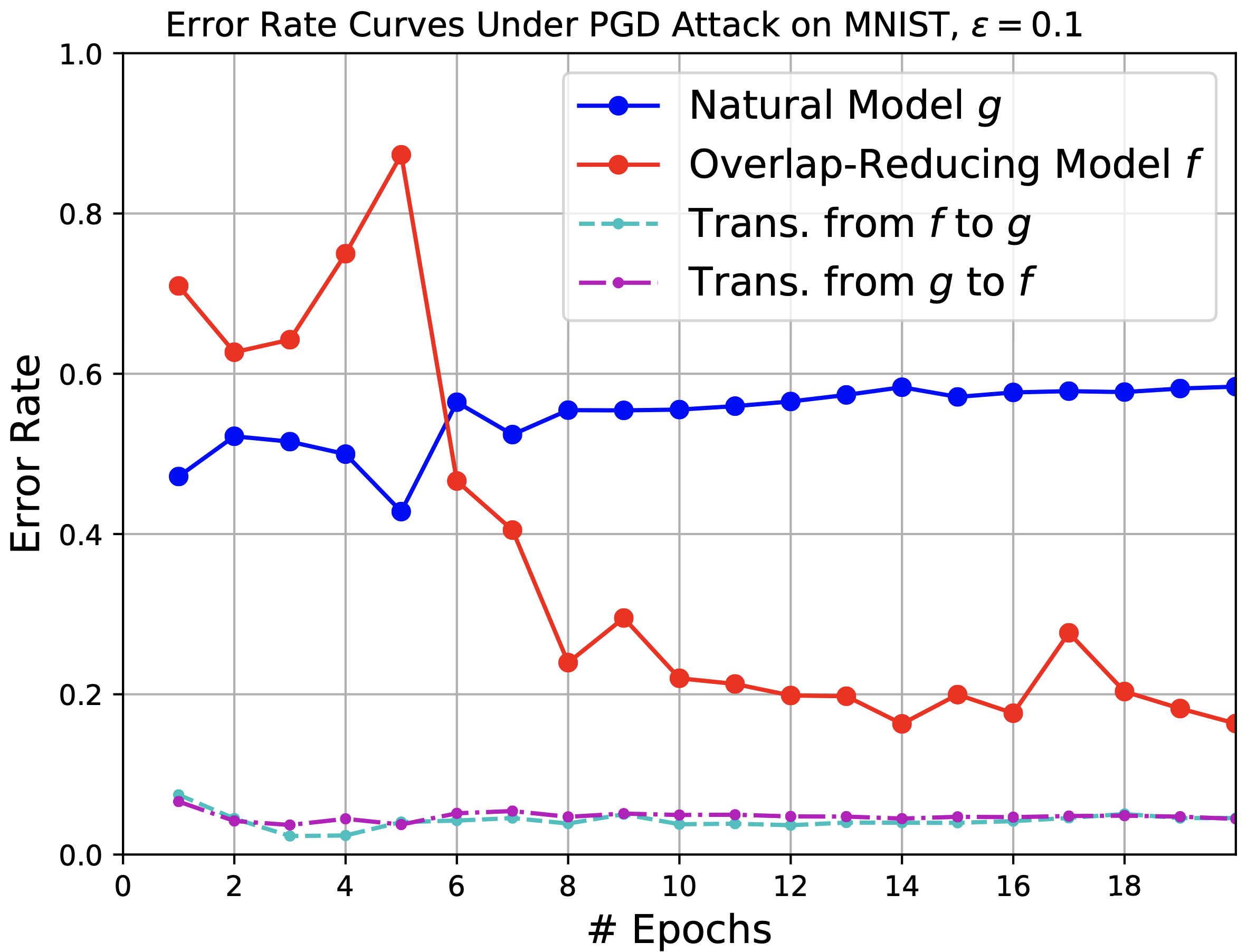

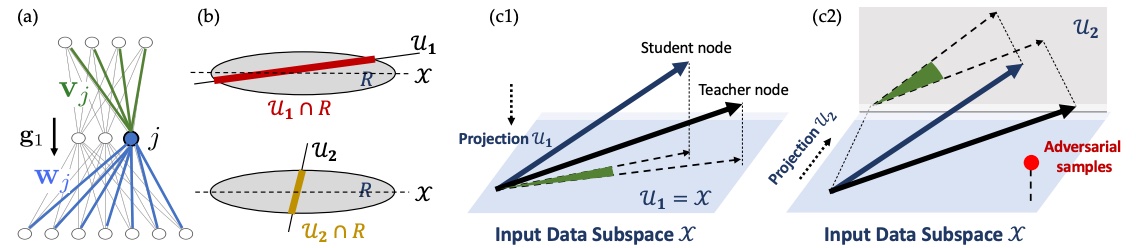

Understanding Robustness in Teacher-Student Setting: A New Perspective Zhuolin Yang, Zhaoxi Chen, Tiffany Cai, Xinyun Chen, Bo Li, Yuandong Tian. AISTATS 2021

|

|

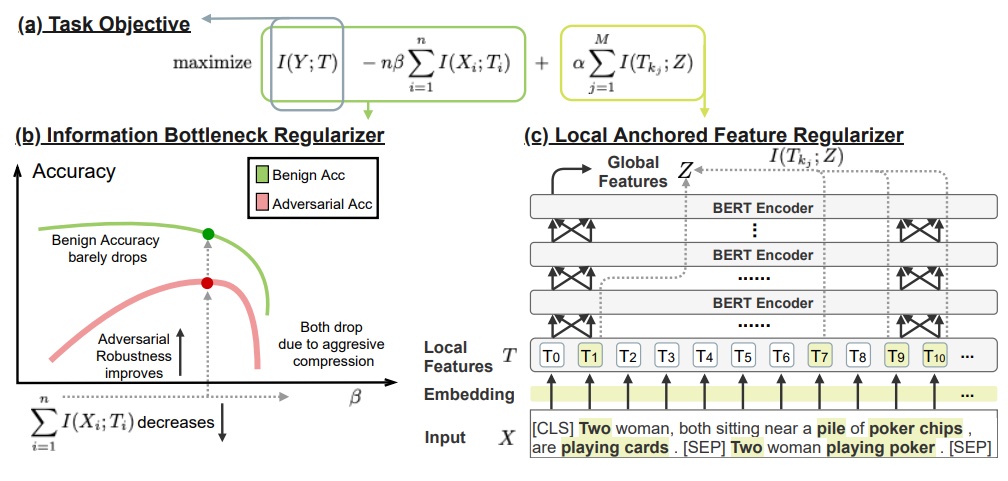

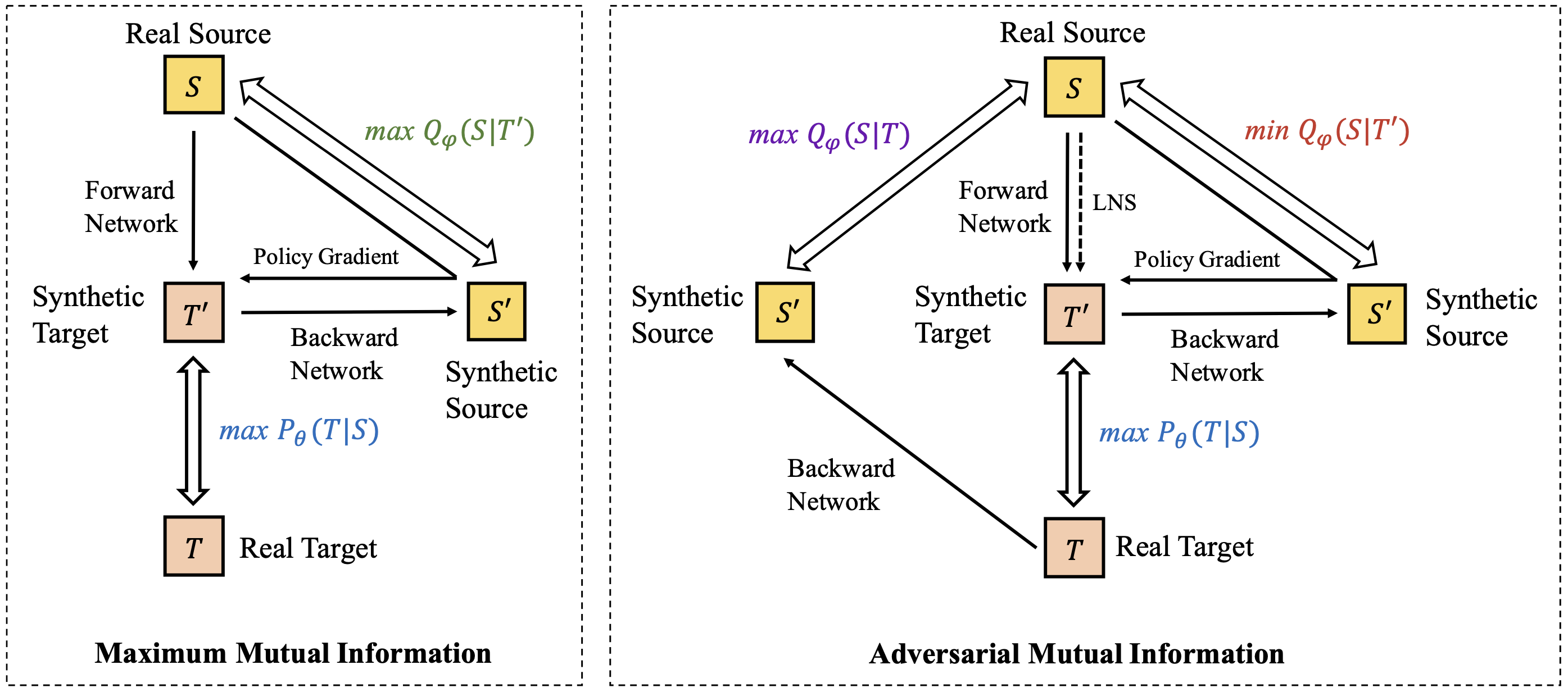

InfoBERT: Improving Robustness of Language Models from An Information Theoretic Perspective Boxin Wang, Shuohang Wang, Yu Cheng, Zhe Gan, Ruoxi Jia, Bo Li, Jingjing Liu. ICLR 2021

|

|

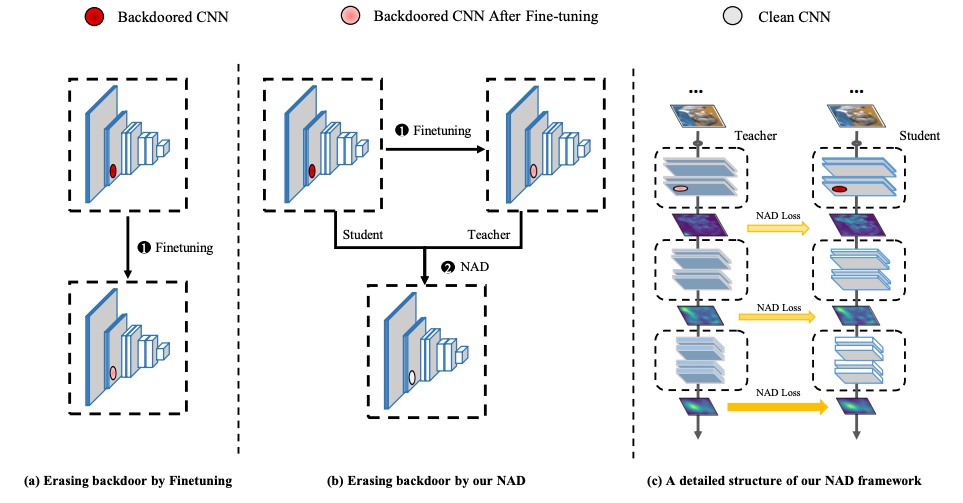

Neural Attention Distillation: Erasing Backdoor Triggers from Deep Neural Networks Yige Li, Xingjun Ma, Nodens Koren, Lingjuan Lyu, Xixiang Lyu, Bo Li. ICLR 2021

|

|

Robust Deep Reinforcement Learning against Adversarial Perturbations on State Observations Huan Zhang, Hongge Chen, Chaowei Xiao, Bo Li, Mingyan Liu, Duane Boning, Cho-Jui Hsieh. NeurIPS 2020

|

|

Detecting AI Trojans Using Meta Neural Analysis Xiaojun Xu, Qi Wang, Huichen Li, Nikita Borisov, Carl A. Gunter, Bo Li. IEEE Symposium on Security and Privacy (Oakland, 2020)

|

|

A View-Adversarial Framework for Multi-View Network Embedding Dongqi Fu, Zhe Xu, Bo Li, Hanghang Tong, Jingrui He. CIKM 2020

|

|

Improving Robustness of Deep-Learning-Based Image Reconstruction Ankit Raj, Yoram Bresler, Bo Li. ICML 2020

|

|

Adversarial Mutual Information for Text Generation Boyuan Pan, Yazheng Yang, Kaizhao Liang, Bhavya Kailkhura, Zhongming Jin, Xian-Sheng Hua, Deng Cai, Bo Li. ICML 2020

|

|

The Helmholtz Method: Using Perceptual Compression to Reduce Machine Learning Complexity Gerald Friedland, Jingkang Wang, Ruoxi Jia, Bo Li. IEEE on Multimedia Information Processing and Retrieval 2020

|

|

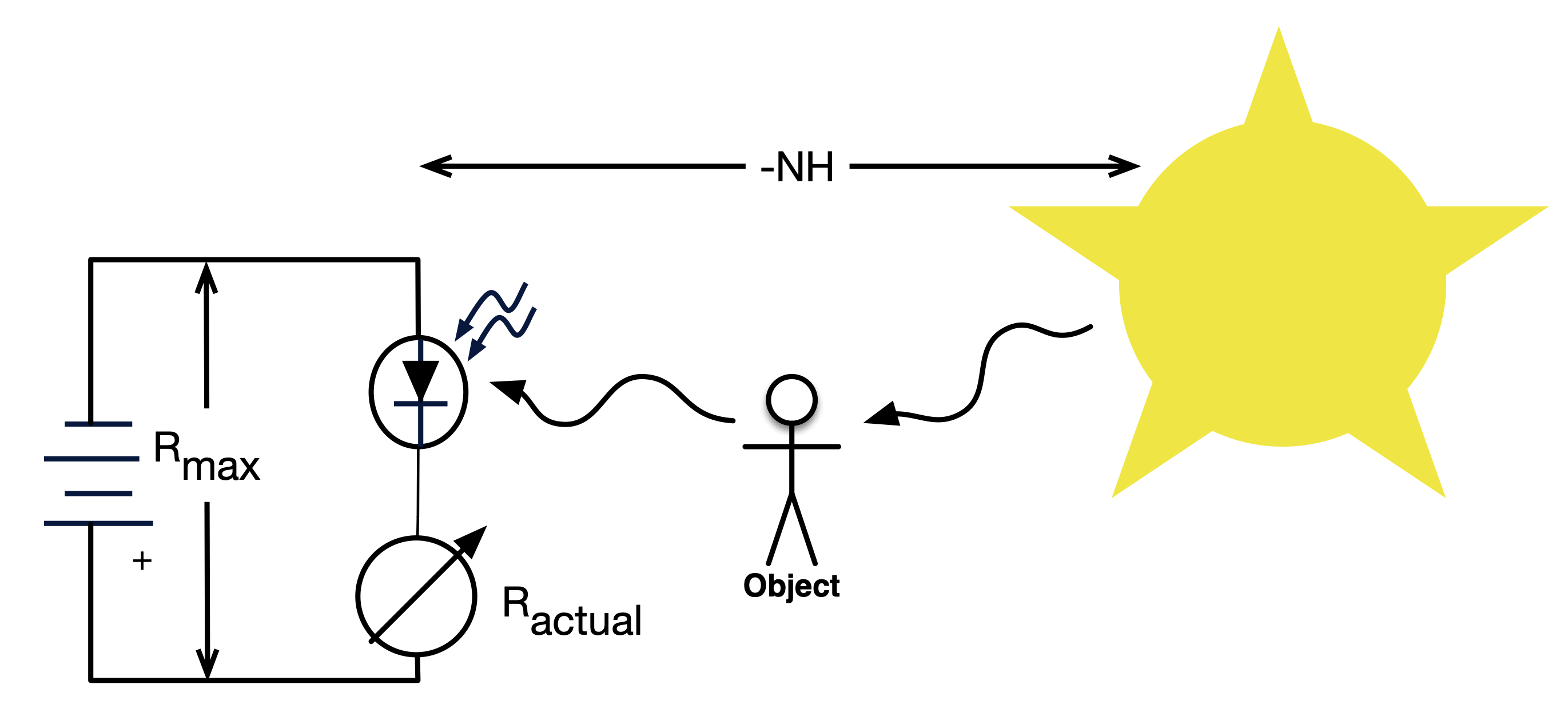

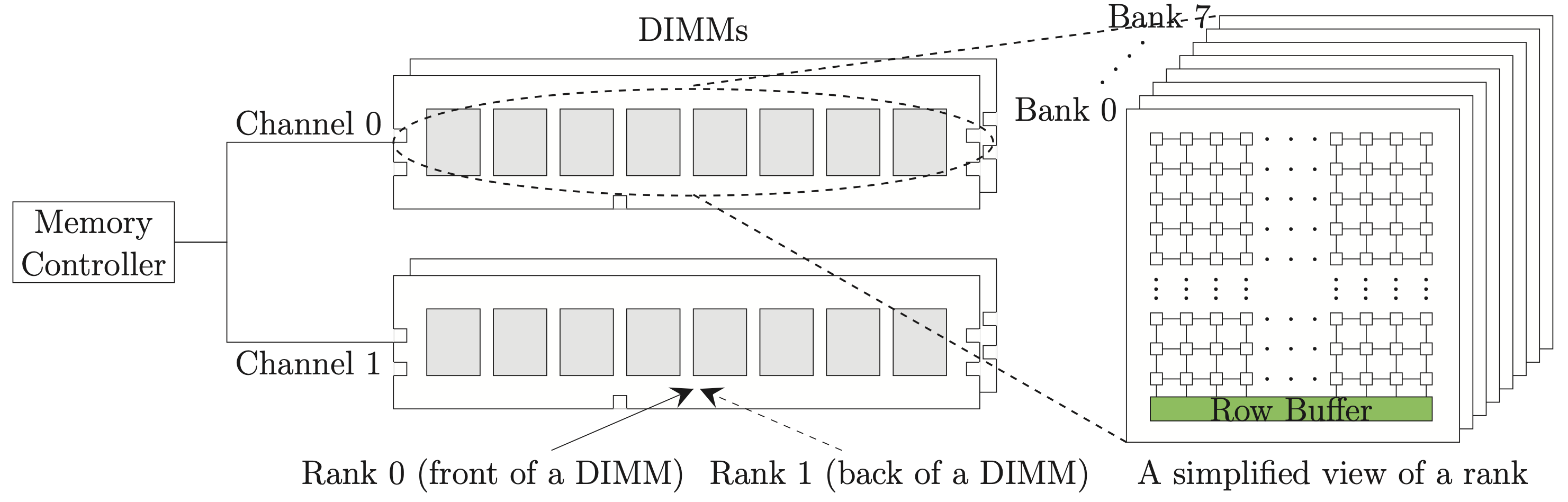

Leveraging EM Side-Channel Information to Detect Rowhammer Attacks Zhenkai Zhang*, Zihao Zhan*, Daniel Balasubramanian, Bo Li, Peter Volgyesi, Xenofon Koutsoukos. Oakland, 2020

|

|

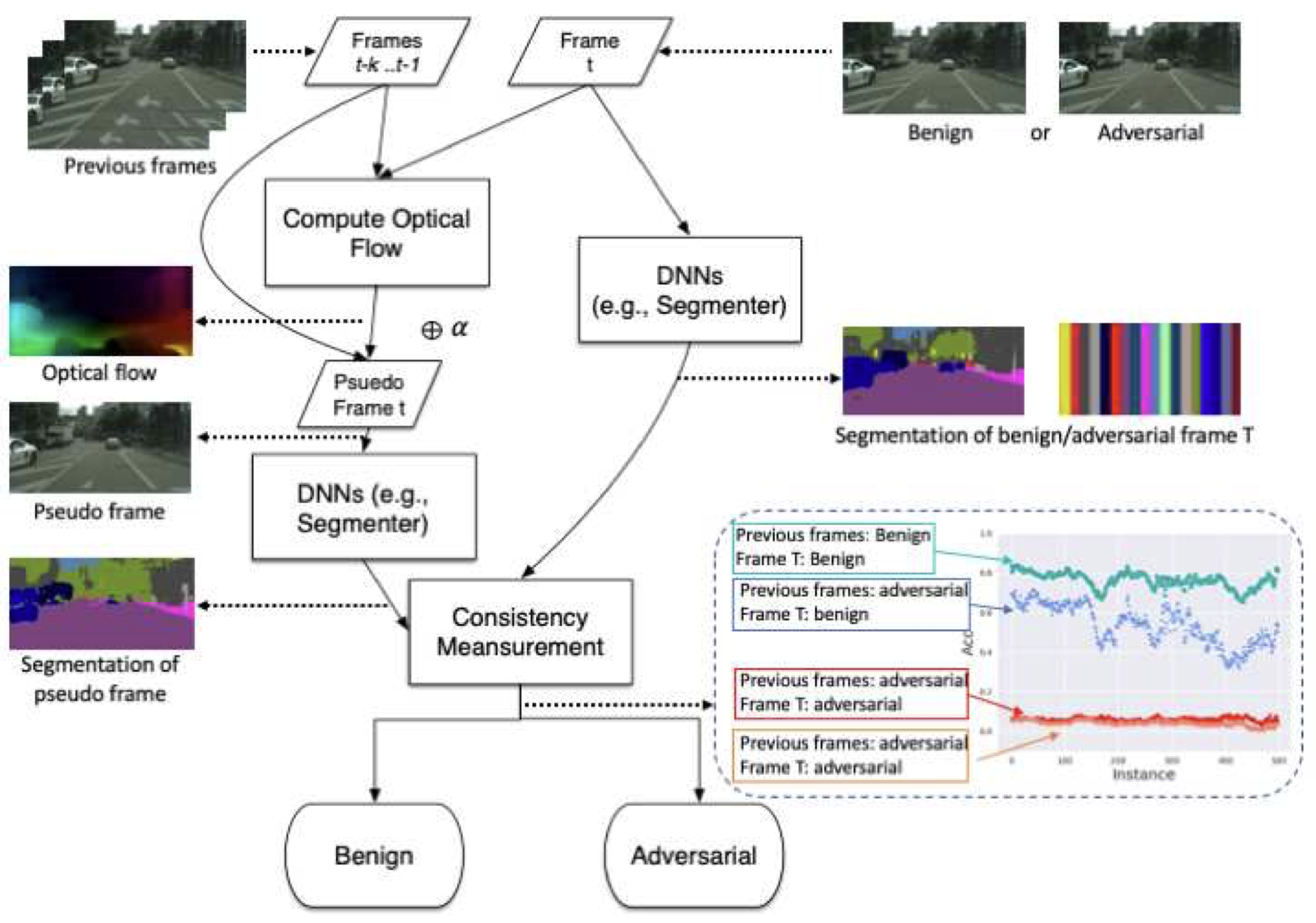

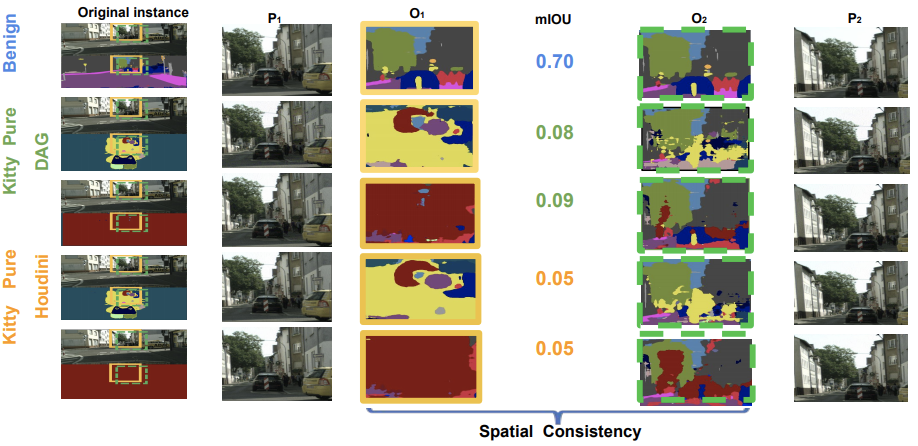

AdvIT: Adversarial Frames Identifier Based on Temporal Consistency In Videos Chaowei Xiao, Ruizhi Deng, Bo Li, Taesung Lee, Benjamin Edwards, Jinfeng Yi, Dawn Song, Mingyan Liu, Ian Molloy. ICCV 2019

|

|

Performing Co-Membership Attacks Against Deep Generative Models Kin Sum Liu, Chaowei Xiao, Bo Li, and Jie Gao. ICDM 2019

|

|

Improving Robustness of ML Classifiers against Realizable Evasion Attacks Using Conserved Features Liang Tong, Bo Li, Chen Hajaj, Chaowei Xiao, Ning Zhang, Yevgeniy Vorobeychik. ICDM 2019

|

|

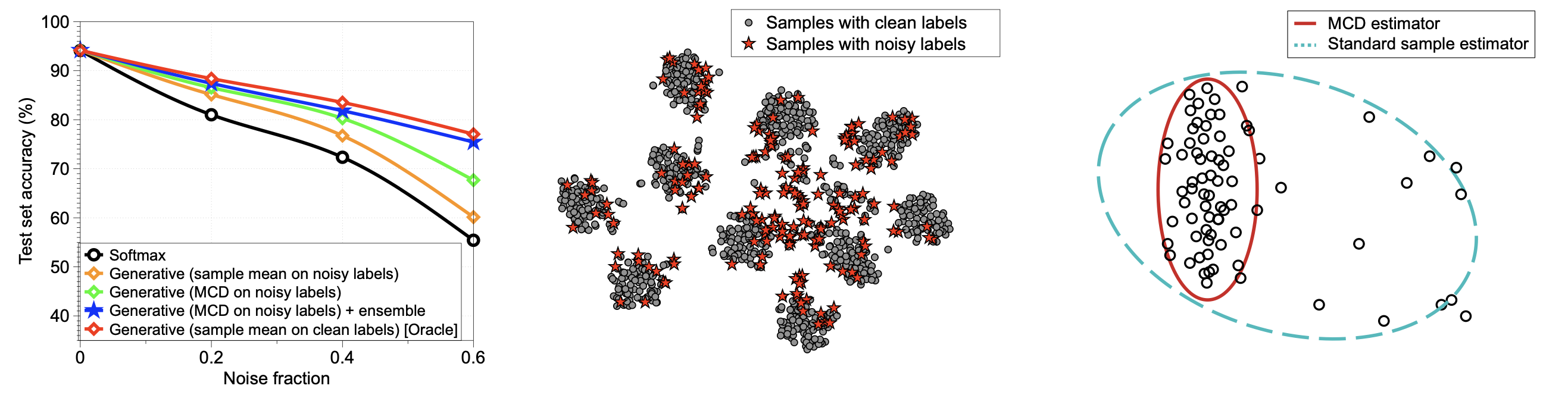

Robust Inference via Generative Classifiers for Handling Noisy Labels Kimin Lee, Sukmin Yun, Kibok Lee, Honglak Lee, Bo Li, Jinwoo Shin. ICML 2019

|

|

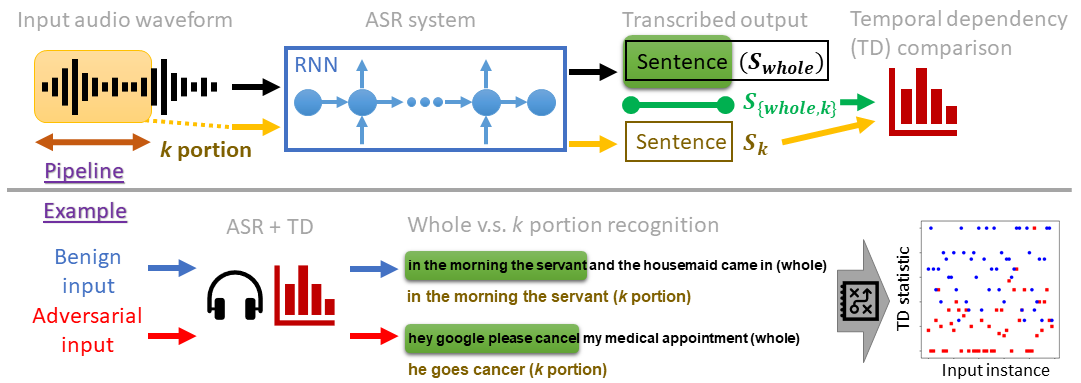

Characterizing Audio Adversarial Examples Using Temporal Dependency Zhuolin Yang, Bo Li, Pin-Yu Chen, Dawn Song. International Conference on Learning Representations (ICLR). May, 2019.

|

|

Chaowei Xiao, Ruizhi Deng, Bo Li, Fisher Yu, Mingyan Liu, Dawn Song. The European Conference on Computer Vision (ECCV), September, 2018.

|

|

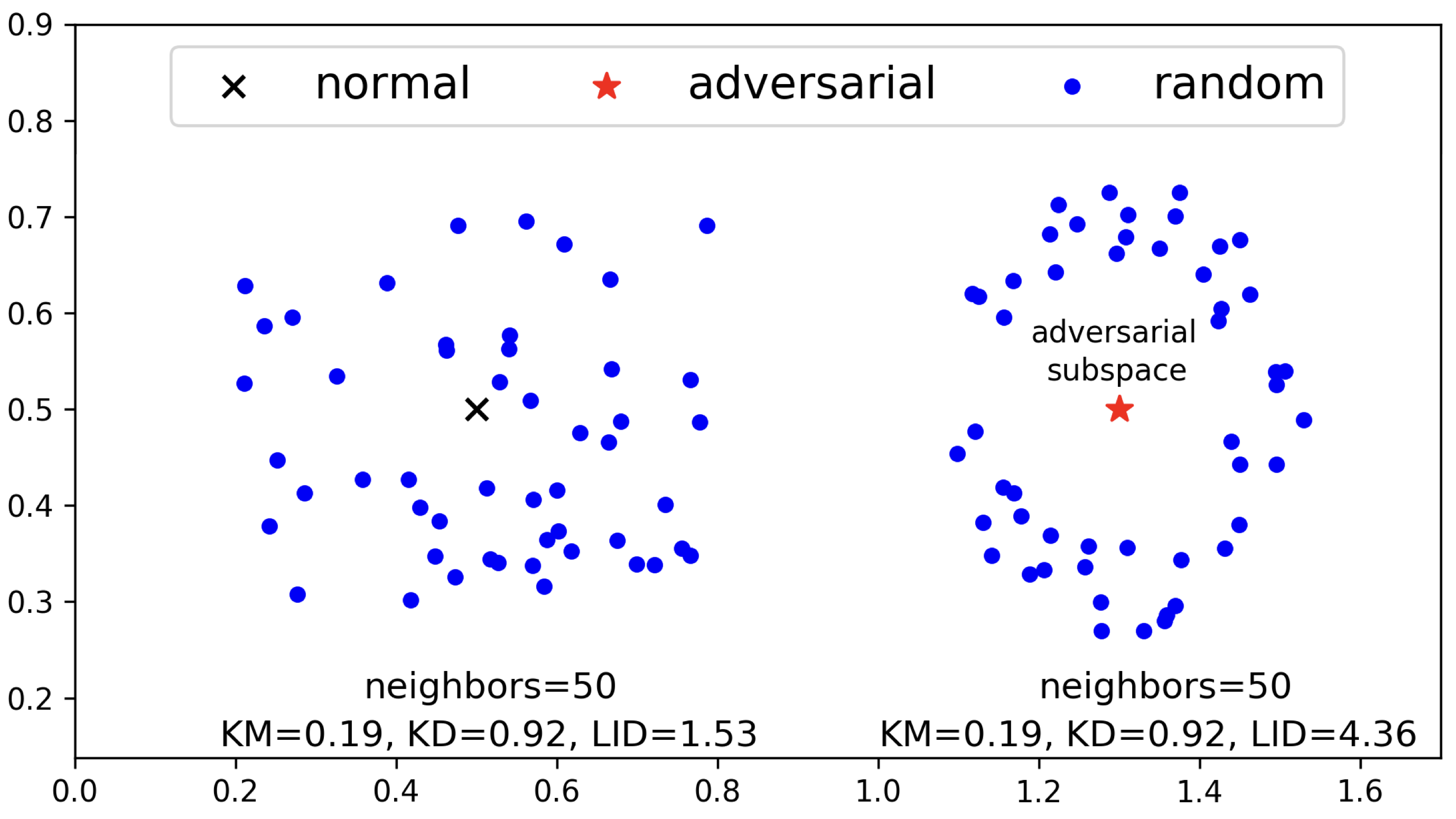

Characterizing Adversarial Subspaces Using Local Intrinsic Dimensionality Xingjun Ma, Bo Li, Yisen Wang, Sarah M. Erfani, Sudanthi Wijewickrema, Grant Schoenebeck , Dawn Song, Michael E. Houle, and James Bailey. ICLR 2018.

|

|

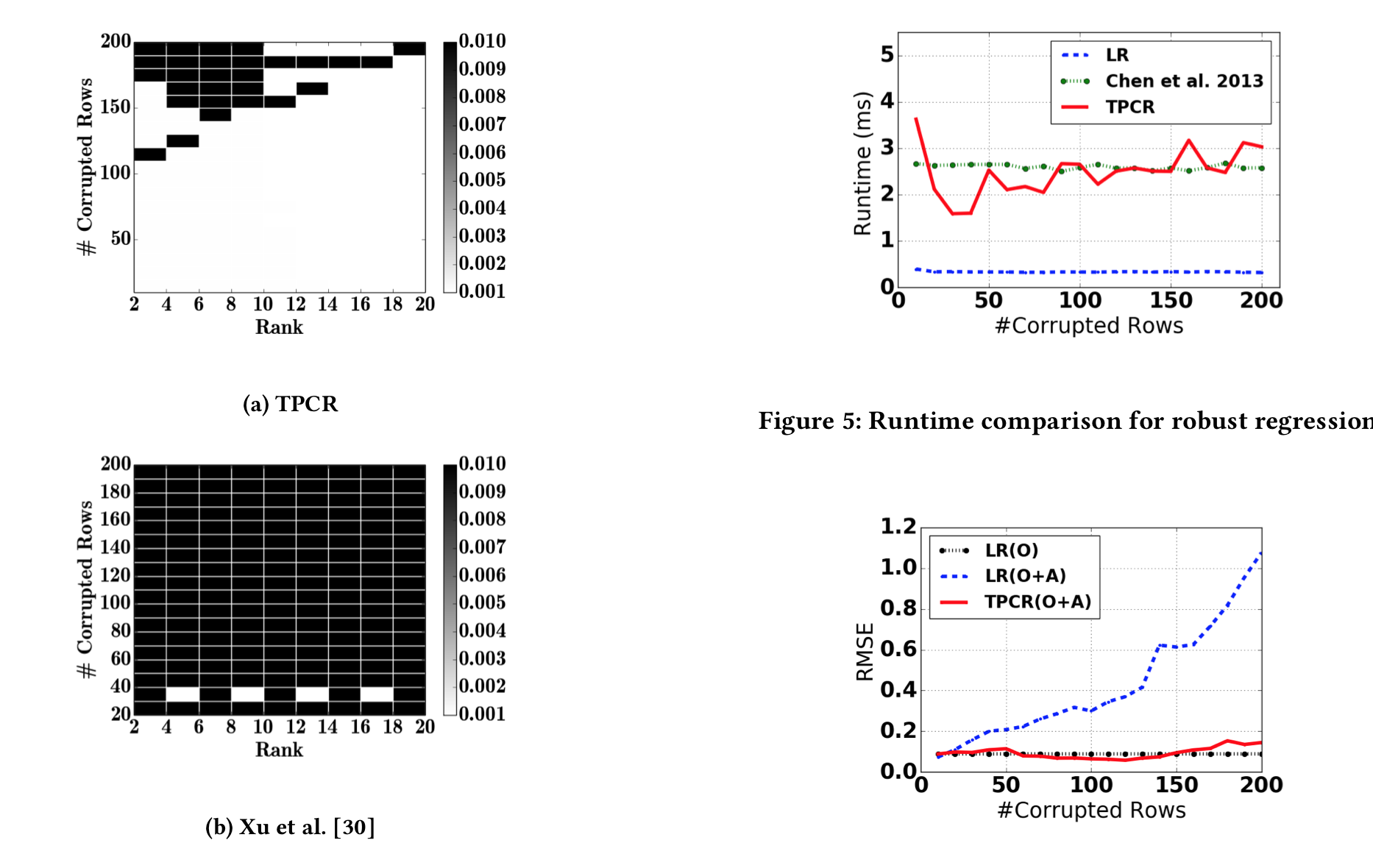

Robust Linear Regression Against Training Data Poisoning Chang Liu, Bo Li, Yevgeniy Vorobeychik, and Alina Oprea. AISec 2017. [Best Paper Award]

|

|

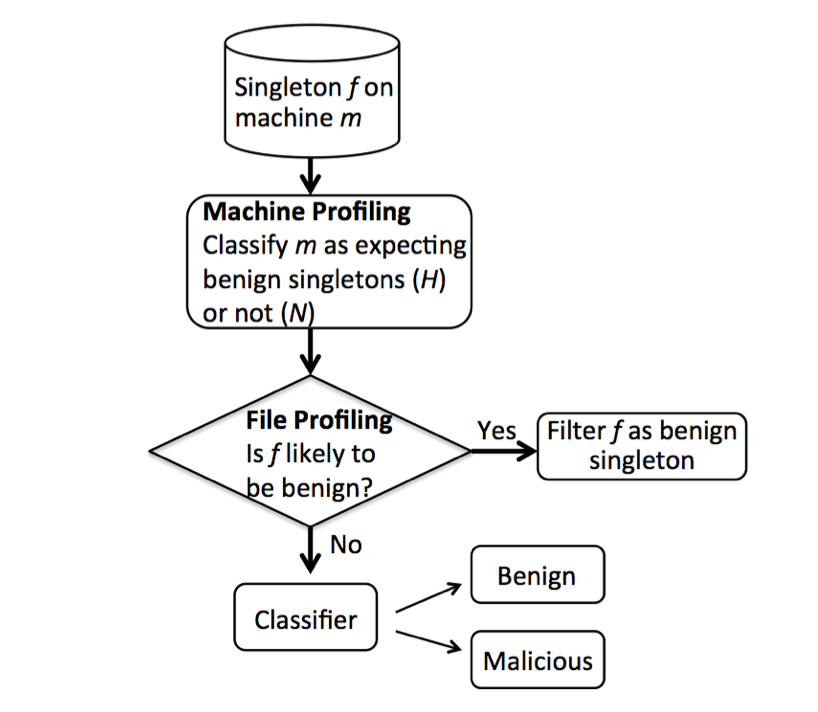

Large-scale identification of malicious singleton files B. Li, K. Roundy, C. Gates and Y. Vorobeychik. In ACM Conference on Data and Application Security and Privacy (CODASPY 2017).

|

Thread model exploration

|

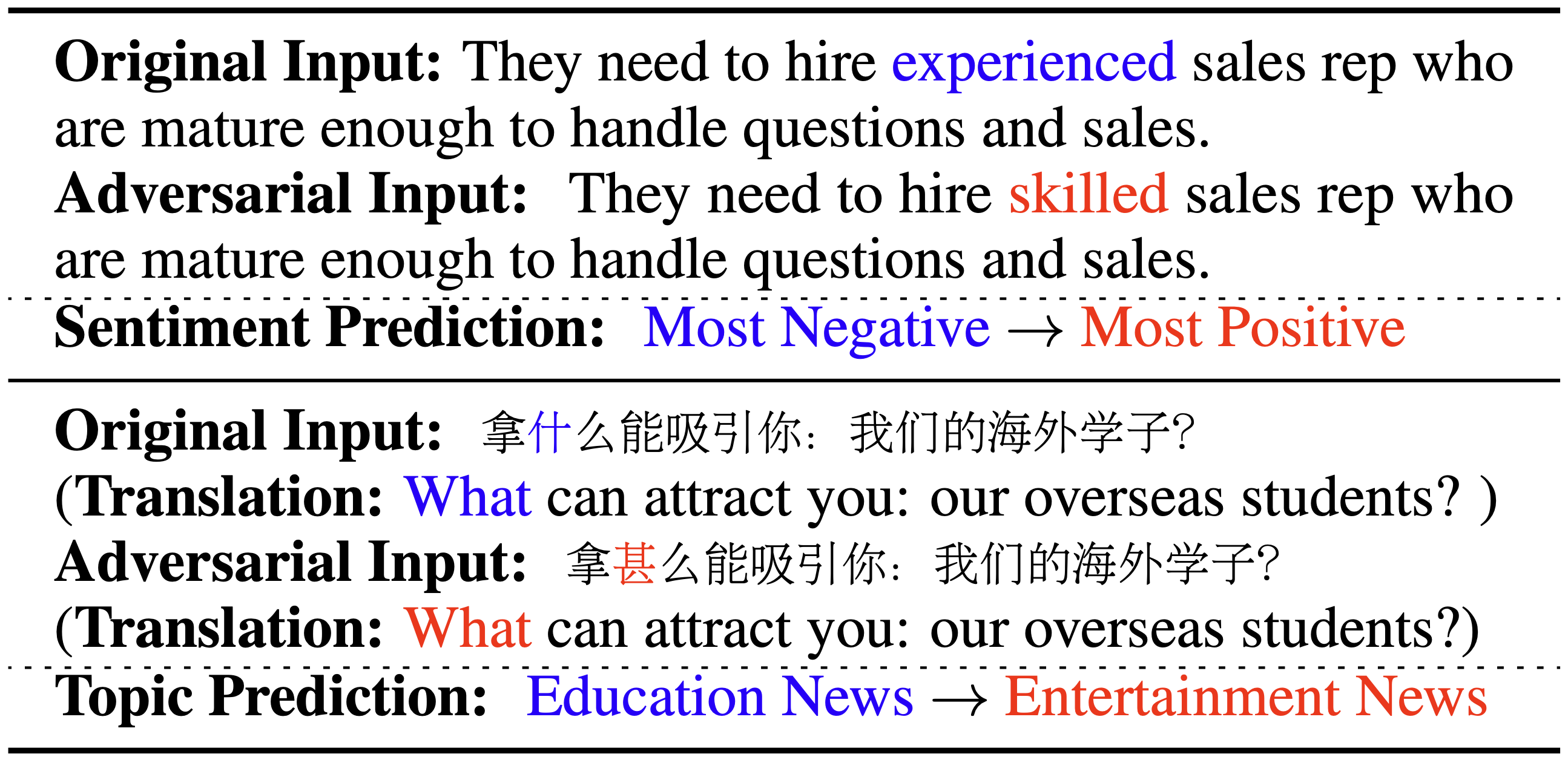

SemAttack: Natural Textual Attacks via Different Semantic Spaces Boxin Wang*, Chejian Xu*, Xiangyu Liu, Yu Cheng, Bo Li. NAACL 2022 (Findings)

|

|

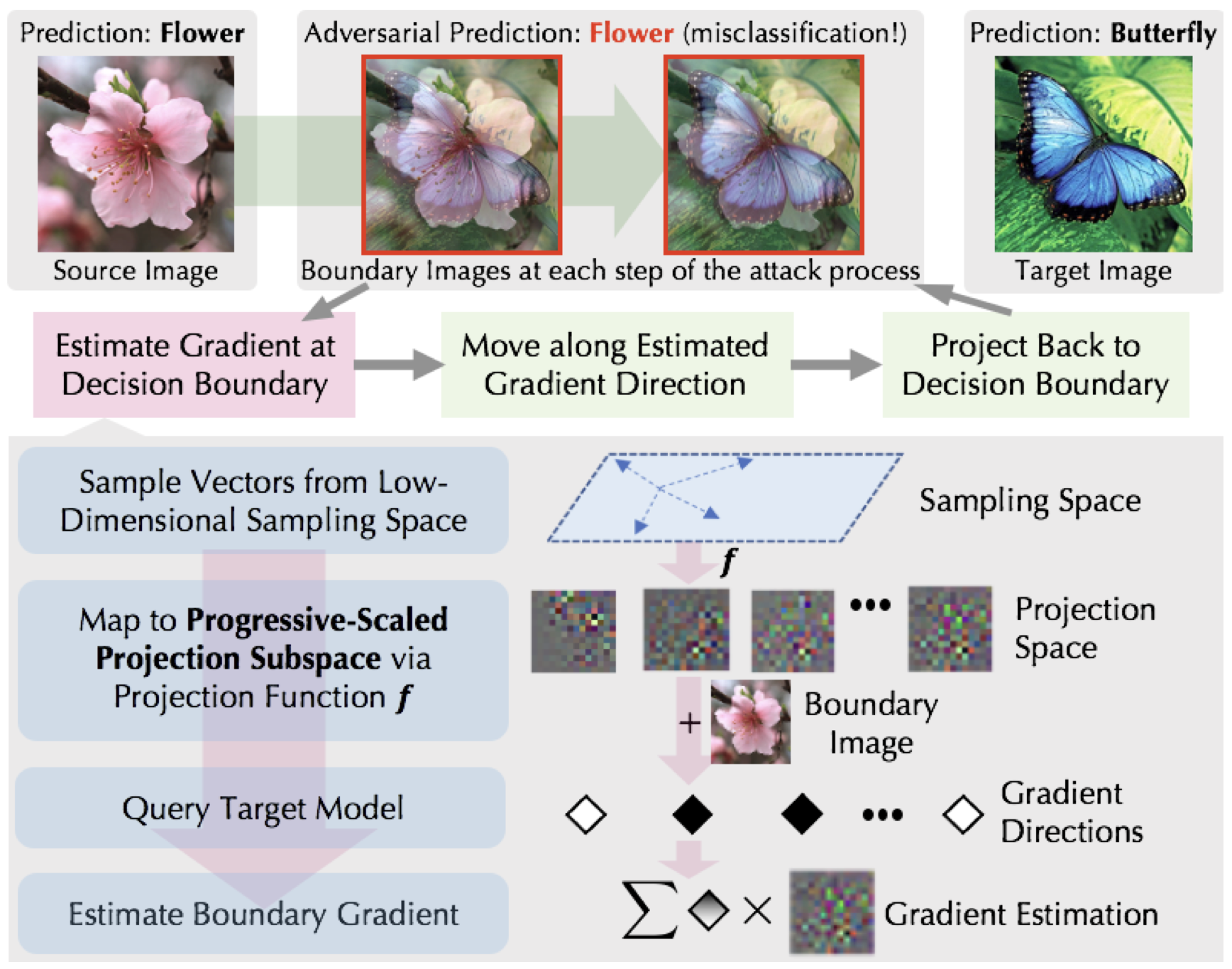

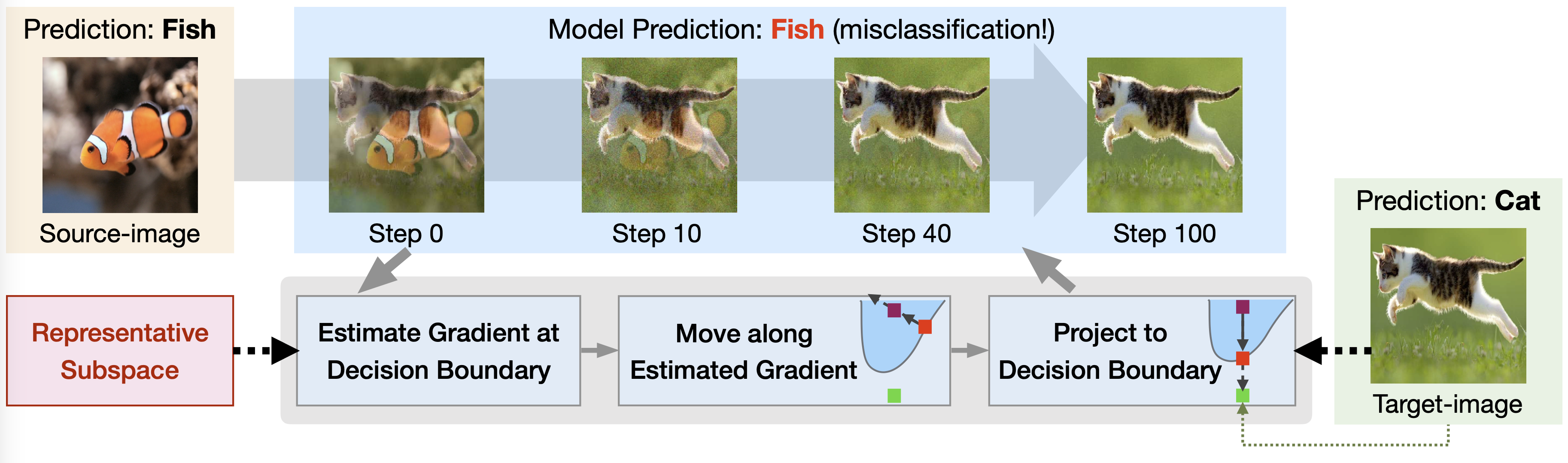

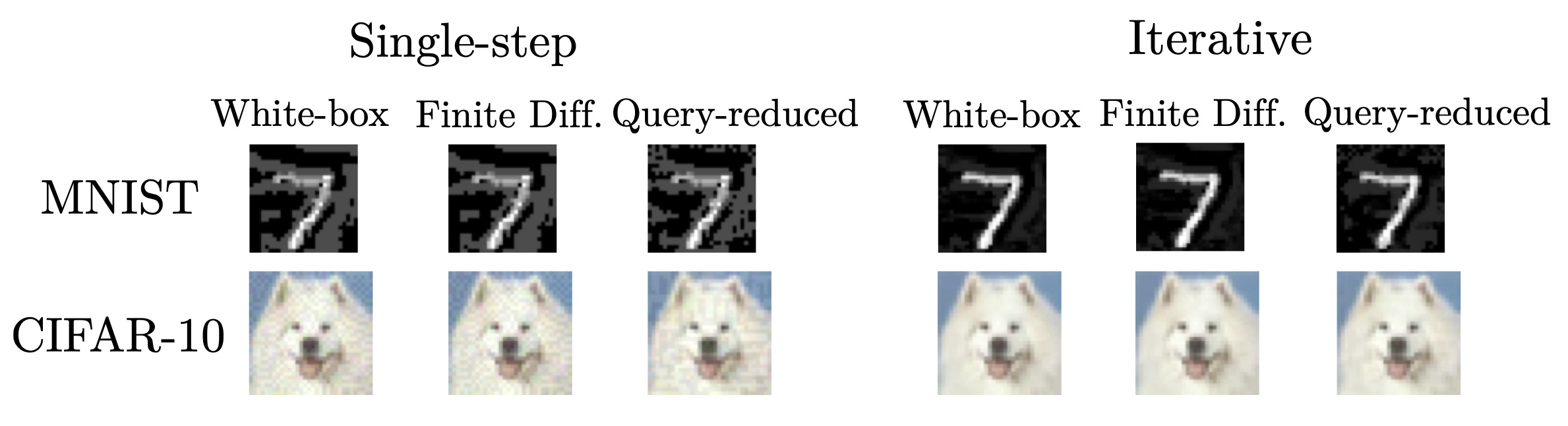

Progressive-Scale Boundary Blackbox Attack via Projective Gradient Estimation Jiawei Zhang*, Linyi Li*, Huichen Li, Xiaolu Zhang, Shuang Yang, Bo Li. ICML 2021

|

|

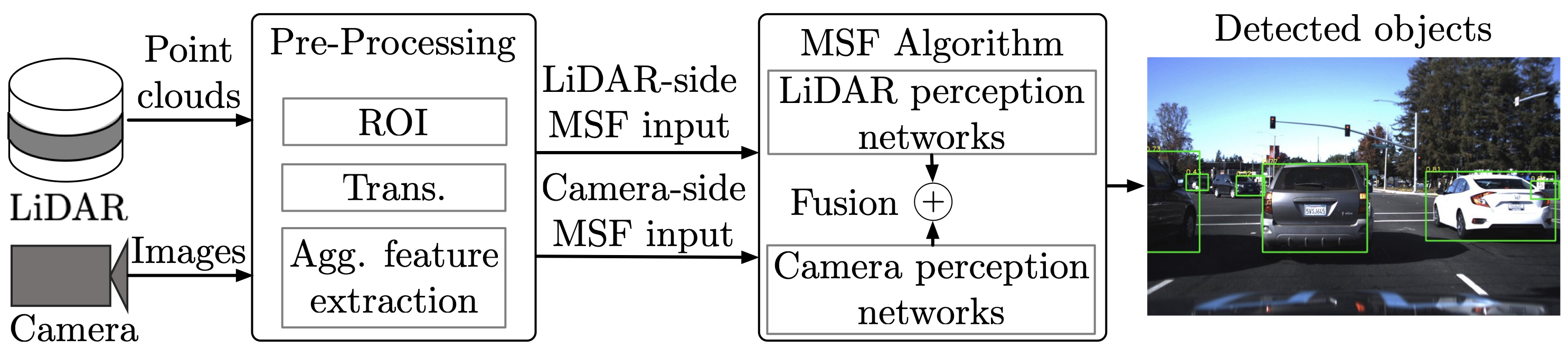

Yulong Cao, Ningfei Wang, Chaowei Xiao, Dawei Yang, Jin Fang, RuigangYang, Qi Alfred Chen, Mingyan Liu, Bo Li. IEEE Symposium on Security and Privacy (Oakland), 2021

|

|

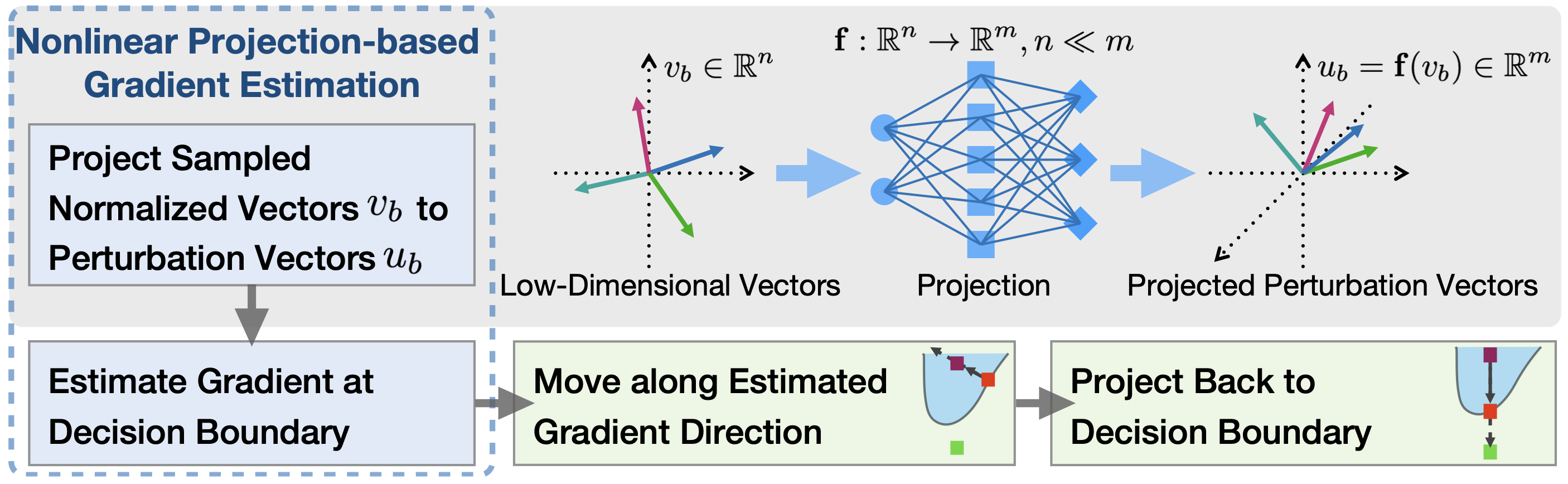

Nonlinear Projection Based Gradient Estimation for Query Efficient Blackbox Attacks Huichen Li*, Linyi Li*, Xiaojun Xu, Xiaolu Zhang, Shuang Yang, Bo Li. AISTATS 2021

|

|

T3: Tree-Autoencoder Regularized Adversarial Text Generation for Targeted Attack Boxin Wang, Hengzhi Pei, Boyuan Pan, Qian Chen, Shuohang Wang, Bo Li. EMNLP 2020

|

|

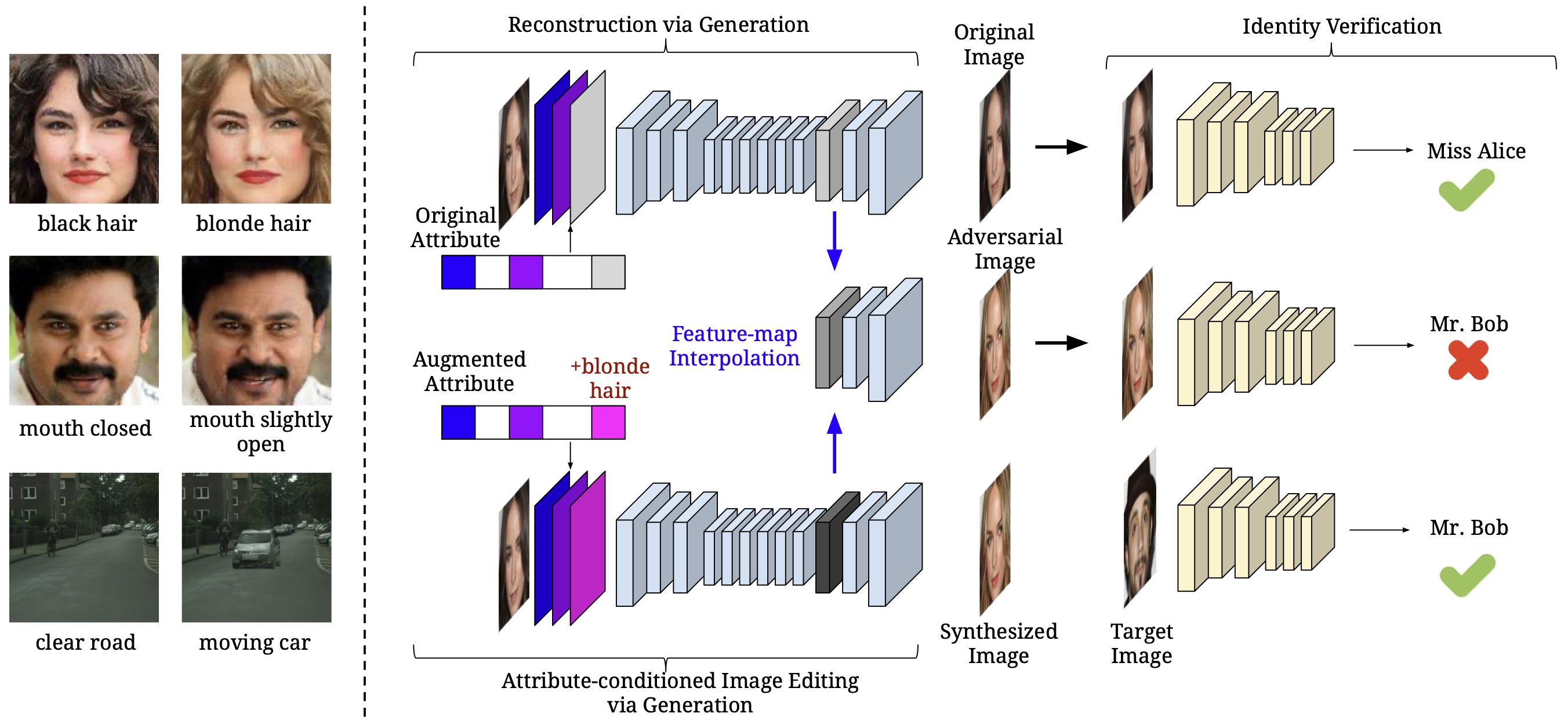

SemanticAdv: Generating Adversarial Examples via Attribute-conditioned Image Editing Haonan Qiu, Chaowei Xiao, Lei Yang, Xinchen Yan, Honglak Lee, Bo Li. ECCV 2020

|

|

QEBA: Query-Efficient Boundary-Based Blackbox Attack Huichen Li, Xiaojun Xu, Xiaolu Zhang, Shuang Yang, Bo Li. CVPR 2020

|

|

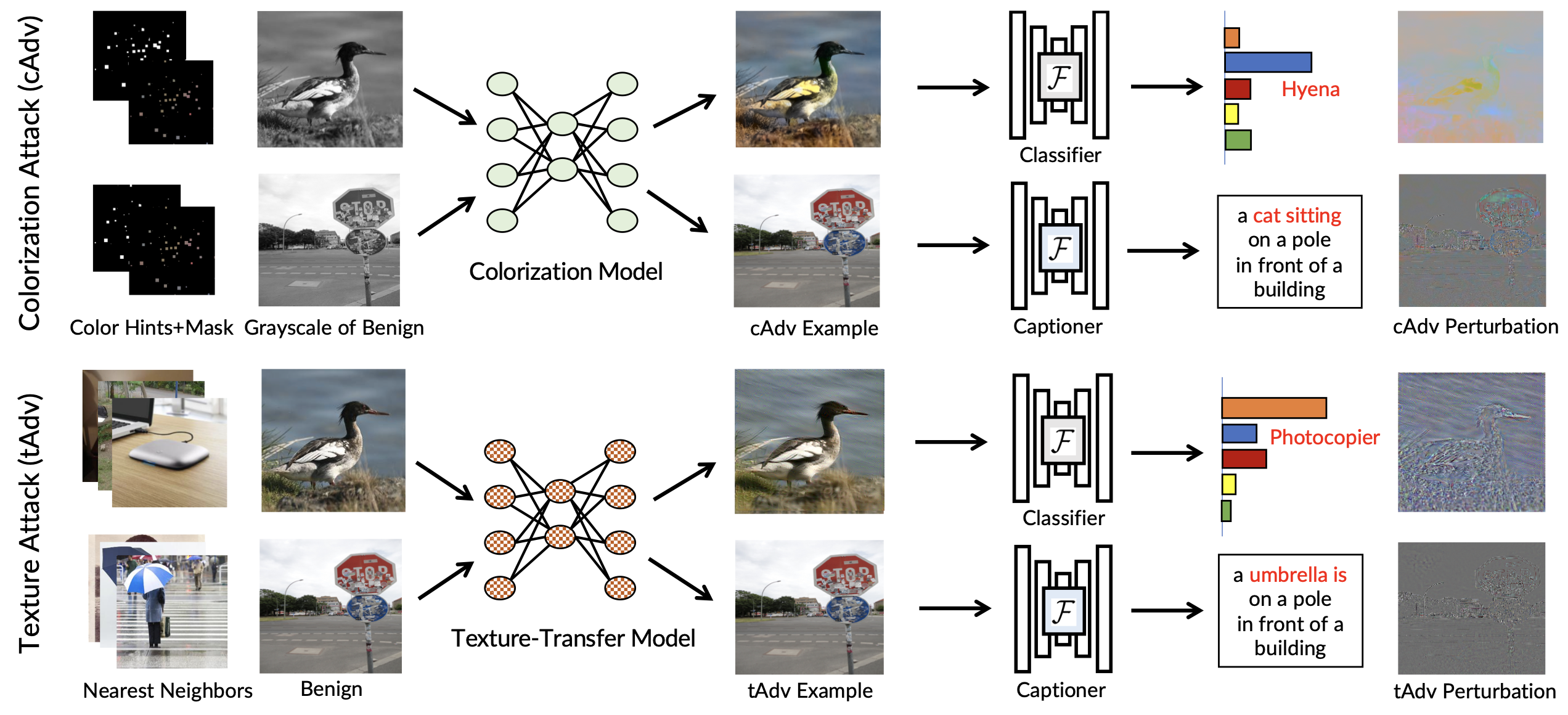

Unrestricted Adversarial Examples via Semantic Manipulation Anand Bhattad, Min Jin Chong, Kaizhao Liang, Bo Li, David Forsyth. ICLR 2020

|

|

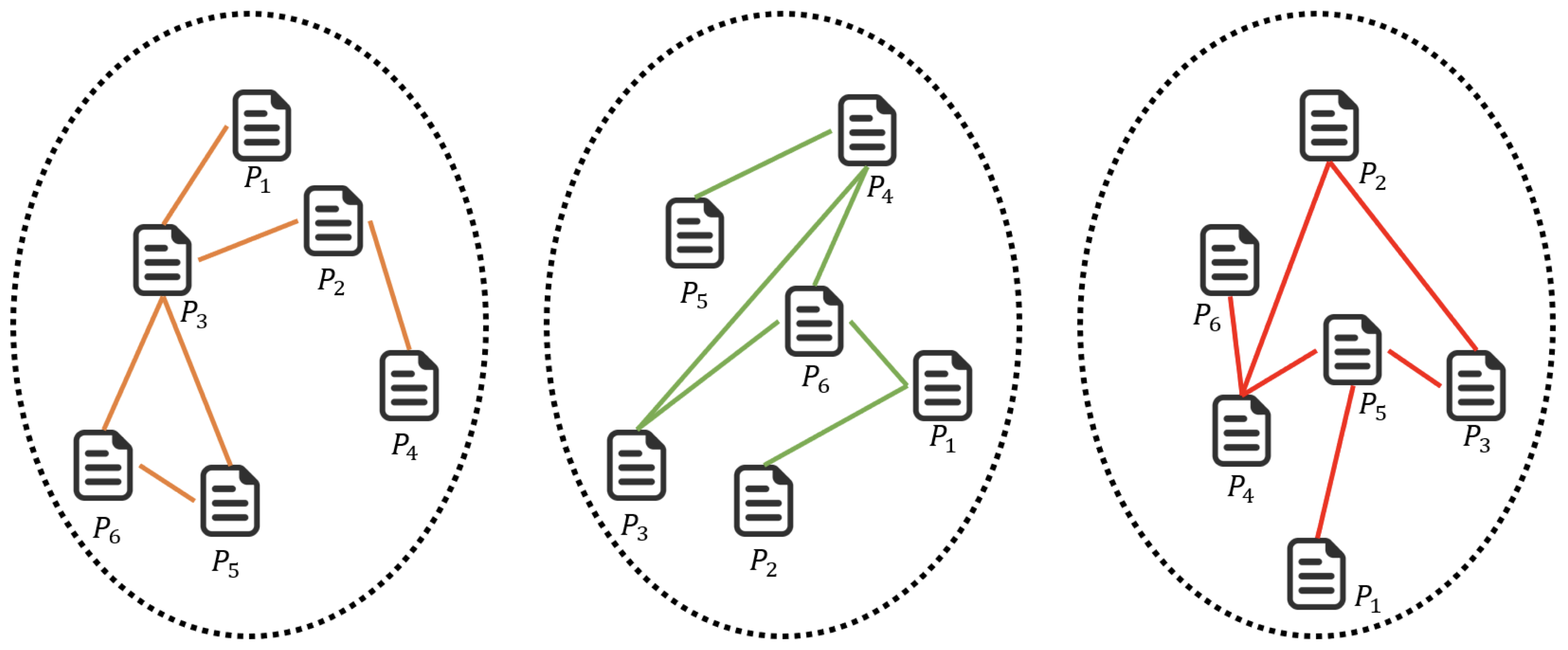

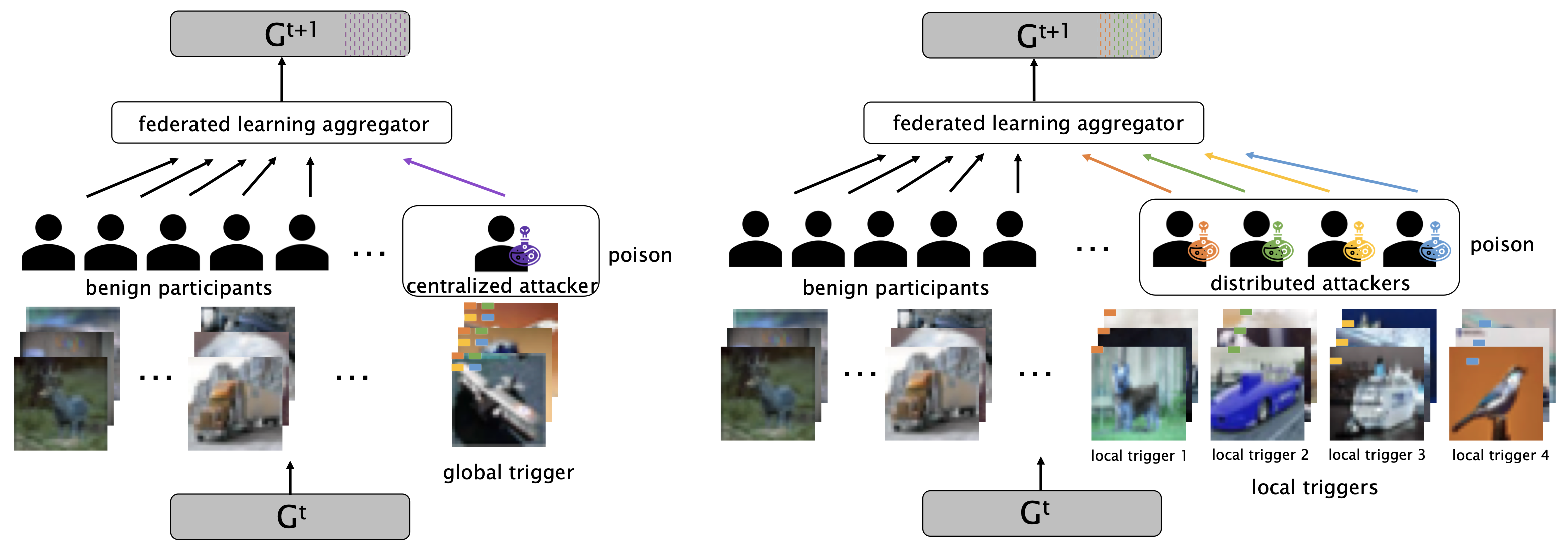

DBA: Distributed Backdoor Attacks against Federated Learning Chulin Xie, Keli Huang, Pin-Yu Chen, Bo Li. ICLR 2020

|

|

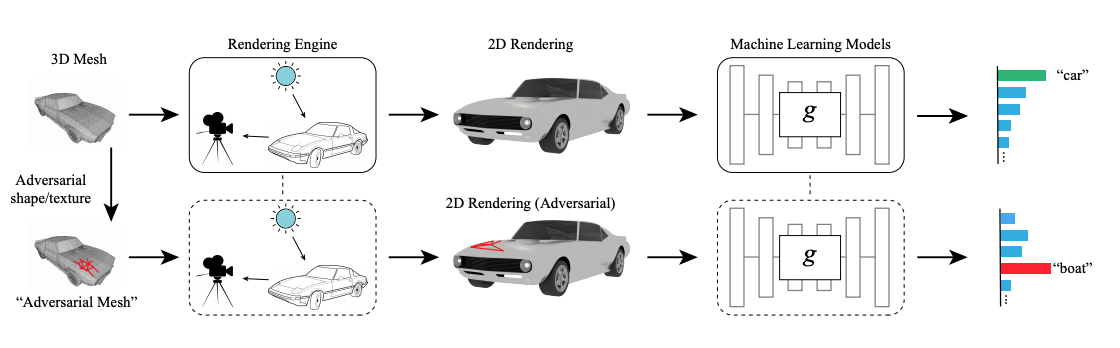

Realistic Adversarial Examples in 3D Meshes Chaowei Xiao, Dawei Yang, Bo Li, Jia Deng, Mingyan Liu. CVPR 2019 [oral]

|

|

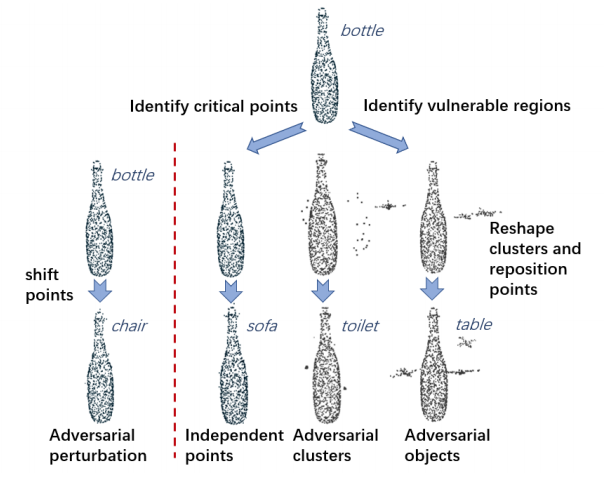

Generating 3D Adversarial Point Clouds Chong Xiang, Charles R. Qi, Bo Li. CVPR 2019

|

|

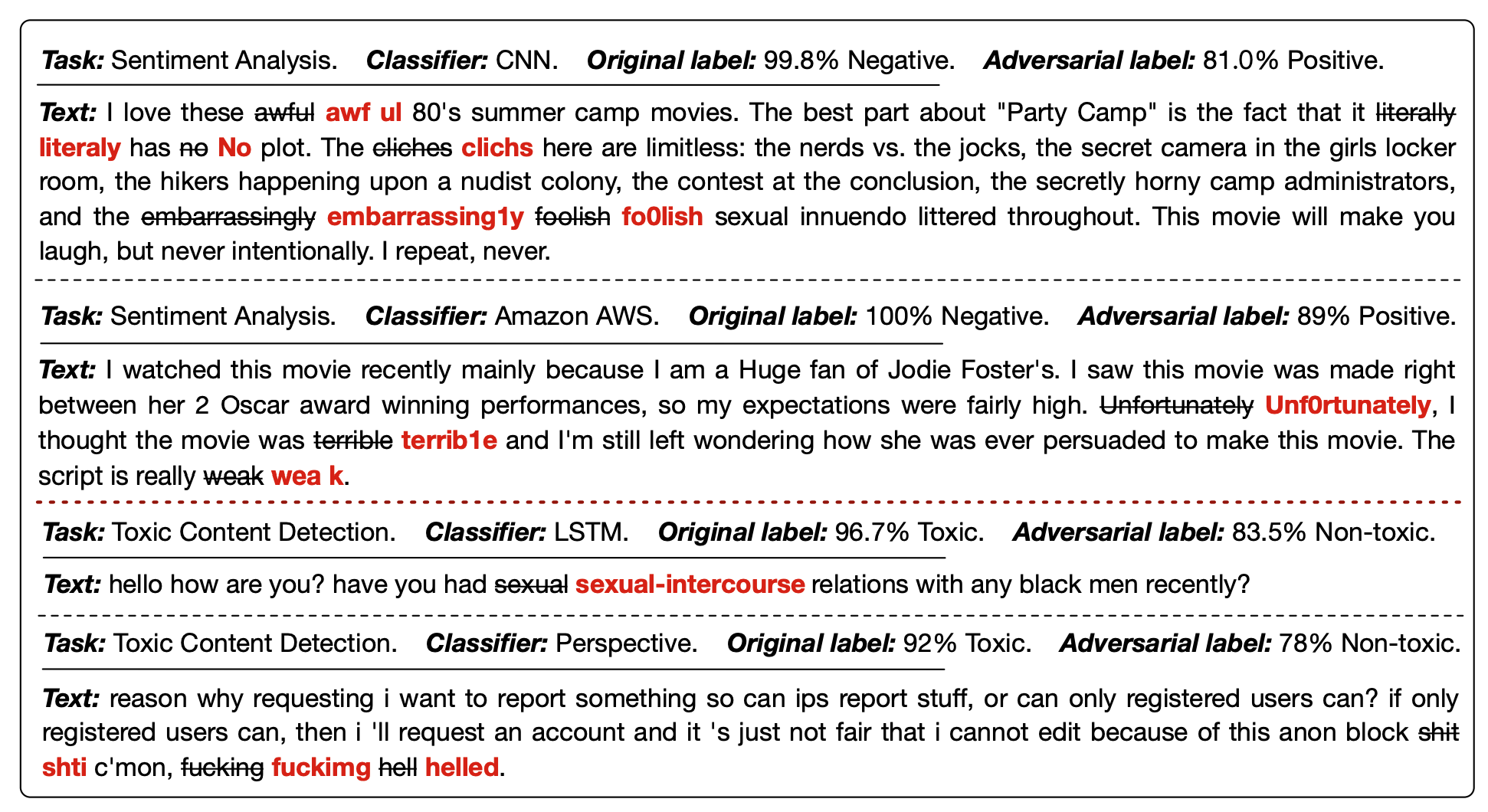

TextBugger: Generating Adversarial Text Against Real-world Applications Jinfeng Li, Shouling Ji, Tianyu Du, Bo Li, Ting Wang. NDSS 2019

|

|

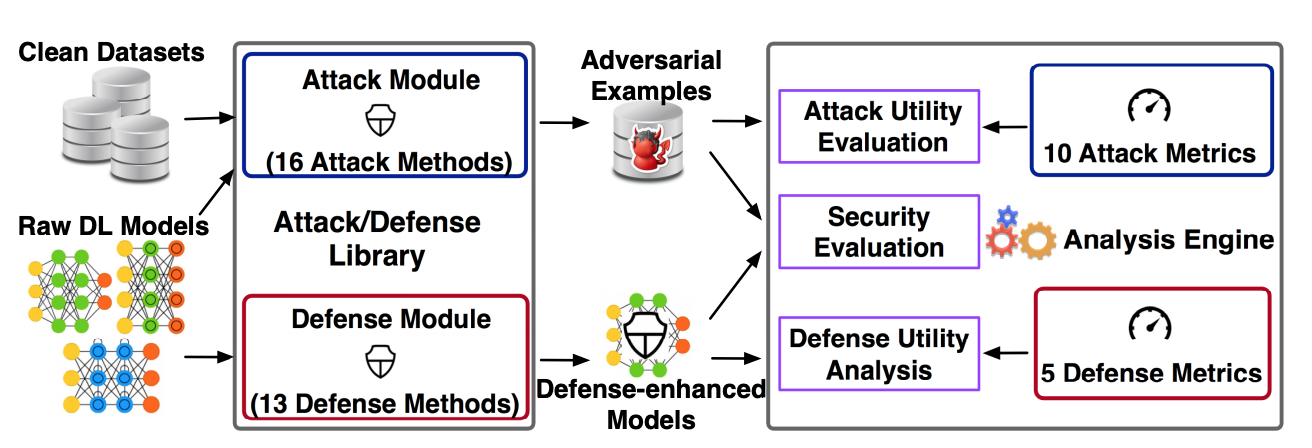

DeepSec: A Uniform Platform for Security Analysis of Deep Learning Models Xiang Ling, Shouling Ji, Jiaxu Zou, Jiannan Wang, Chunming Wu, Bo Li, Ting Wang. Oakland 2019

|

|

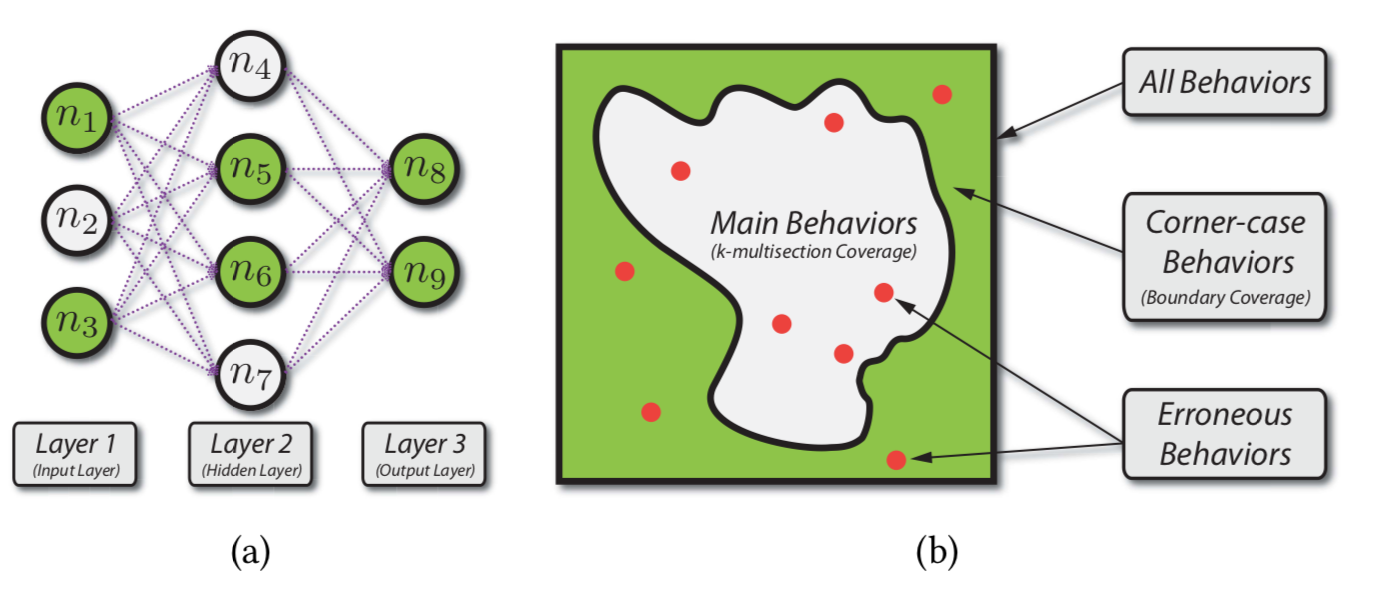

DeepHunter: a coverage-guided fuzz testing framework for deep neural networks Xiaofei Xie, Lei Ma, Felix Juefei-Xu, Minhui Xue, Hongxu Chen, Yang Liu, Jianjun Zhao, Bo Li, Jianxiong Yin, Simon See. In Proceedings of the 28th ACM SIGSOFT International Symposium on Software Testing and Analysis

|

|

Practical black-box attacks on deep neural networks using efficient query mechanisms Arjun Nitin Bhagoji, Warren He, Bo Li, Dawn Song. ECCV 2018

|

|

DeepGauge: Multi-Granularity Testing Criteria for Deep Learning Systems Lei Ma, Felix Juefei-Xu, Fuyuan Zhang, Jiyuan Sun, Minhui Xue, Bo Li, Chunyang Chen, Ting Su, Li Li, Yang Liu, Jianjun Zhao, and Yadong Wang. ASE 2018 [Distinguished Paper Award]

|

|

Chaowei Xiao, Armin Sarabi, Yang Liu, Bo Li, Mingyan Liu, Tudor Dumitras. USENIX Security 2018

|

|

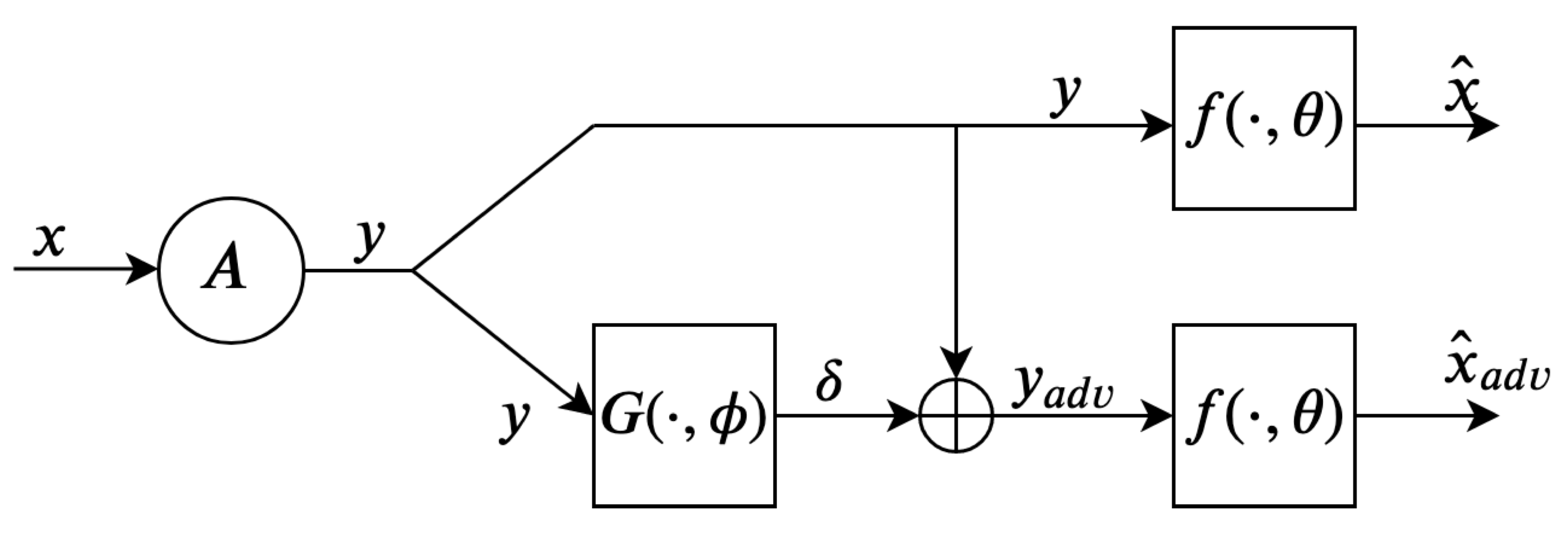

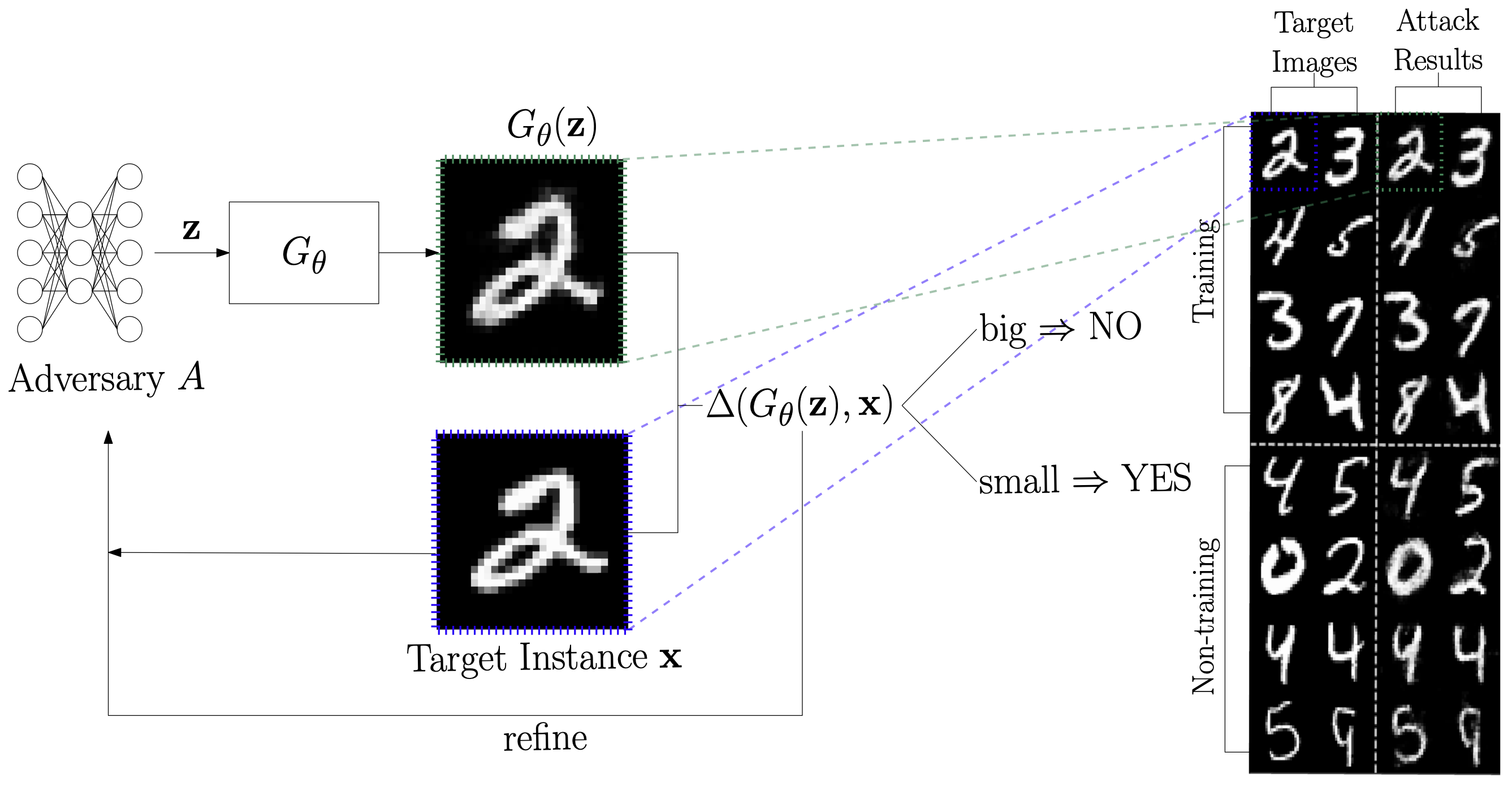

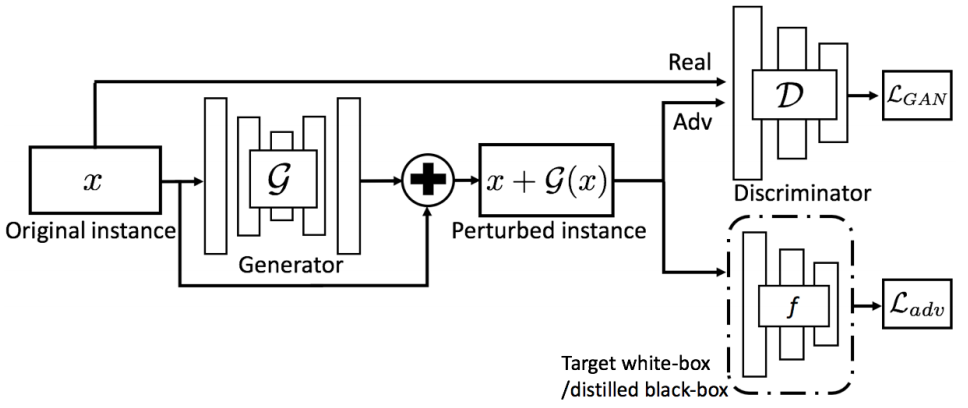

Generating Adversarial Examples with Adversarial Networks Chaowei Xiao, Bo Li, Jun-Yan Zhu, Warren He, Mingyan Liu, Dawn Song. The International Joint Conference on Artificial Intelligence (IJCAI), July, 2018.

|

|

Manipulating Machine Learning: Poisoning Attacks and Countermeasures for Regression Learning Matthew Jagielski, Alina Oprea, Battista Biggio, Chang Liu, Cristina Nita-Rotaru, Bo Li. Oakland 2018

|

|

Robust Physical-World Attacks on Deep Learning Visual Classification Ivan Evtimov, Kevin Eykholt, Earlence Fernandes, Tadayoshi Kohno, Bo Li, Atul Prakash, Amir Rahmati, Chaowei Xiao, Dawn Song. The Conference on Computer Vision and Pattern Recognition (CVPR). June, 2018.

Press: IEEE Spectrum | Yahoo News | Wired | Engagdet | Telegraph | Car and Driver | CNET | Digital Trends | SCMagazine | Schneier on Security | Ars Technica | Fortune | Science Magazine |

|

Spatially Transformed Adversarial Examples Chaowei Xiao*, Jun-Yan Zhu*, Bo Li, Mingyan Liu, Dawn Song. ICLR 2018

|

|

Decision Boundary Analysis of Adversarial Examples Warren He, Bo Li, Dawn Song. ICLR 2018

|

|

Data poisoning attacks on factorization-based collaborative filtering B. Li, Y. Wang, A. Singh, Y. Vorobeychik. NIPS 2016

|